In the previous entry I ended with an abrupt half-joke where I said I got raytracing working. The idea was that I spent days struggling to get simple old technology working properly, but then casually mastered cutting-edge tech in a single sentence. Sadly, it’s not totally true. I got access to raytracing, but I think it’s a stretch to say it’s “working”.

When I left off, I presented you with an image that looked more or less like this:

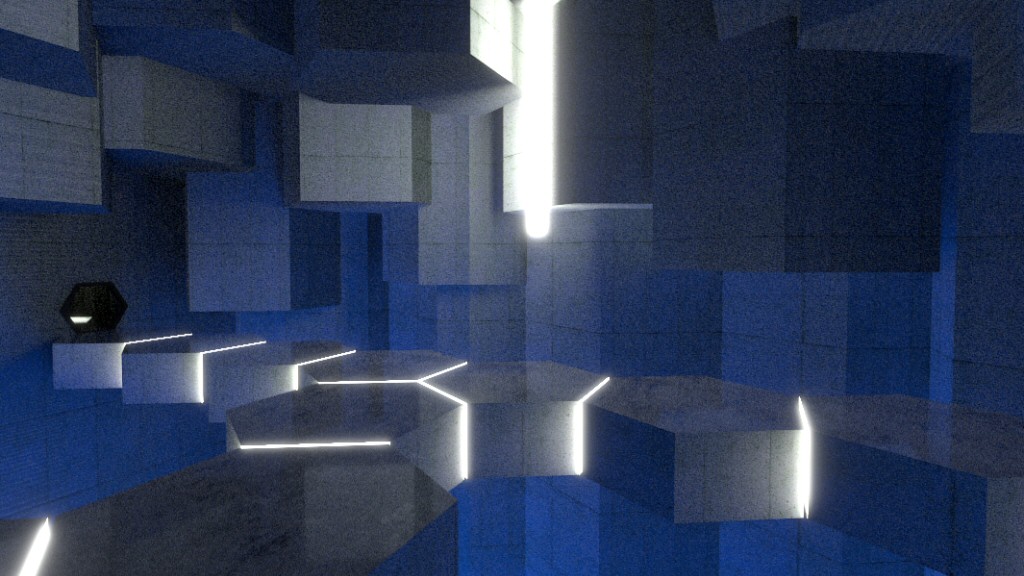

That’s what the program looks like when you leave it alone, but if you move the camera even slightly then it looks something like this:

To a certain extent, this is expected. This is what you get with raytracing / path tracing. Even on the cutting-edge magic hardware we have these days, you still don’t get more than a few thousand rays per frame. We get around this with two techniques:

- We take the little bit of data available and spread it out. I’m not sure of the exact algorithm used, but it’s probably something like “if you’re rendering a spot that hasn’t been hit with ANY rays, then use the nearest available one”. So those existing pixels of light would get smeared out to fill the gaps. This means that the lighting starts out “blurry”. The walls and textures remain crisp, but the patterns of light and dark are initially vague. This gives the images a kind of “dreamy” quality.

- We accumulate rays over multiple frames. Each frame adds a few more rays to the scene, gradually filling things in and sharpening up the lighting. This means the room is initially dark if the whole thing enters the frame abruptly. The room starts out dim, and then gradually lightens as more light rays are added. You’d think this would look wrong, but it ends up feeling like your eyes adjusting when you enter a dark room. This works even if the previous room and this new one are supposed to be the same level of brightness. You can run back and forth between the two rooms, and it will always feel like you’re moving from a brighter room to a dimmer one. It seems to be one of those tricks that your brain just ignores.

As far as I can tell, these features are collectively called “denoising”. In the Quake 2 RTX demo, there’s a console command to turn denoising off and allow you to see the raw images with low ray counts, like my image above. The problem I’m having in Unity is that I can’t find either of these features. There are a few possibilities:

- Denoising hasn’t been implemented. I’m using a preview version of Unity for this projectUnity is REALLY graceful about letting you have several different versions installed simultaneously, and it keeps track of which projects belong with which versions. It’s pretty great. and it’s possible that denoising is still on someone’s to-do list.

- The denoising in the Quake 2 demo is an implementation of a very NVIDIA-specific feature. Maybe the Unity team wants to avoid manufacturer-specific gimmicks like that and aim for generalized solutions. Therefore, this is as good as it’s going to get until NVIDIA and AMD embrace some sort of standard. That will probably take months or years.

- The denoising feature exists, but it’s not documented and so I have no way of knowing where to find it or how to use it.

Normally I’m hard on the Unity team for the state of their documentationParticularly this ludicrous habit of documenting important things inside of hour-long video presentations. Arg. My rage is boundless., but in this case I’m obliged to cut them some slack. It’s called a “preview” version for a reason, and documentation is usually the last step. It’s annoying to have to fumble around in the dark like this, but that comes with the territory if you’re messing around with beta software.

Soon, or Never?

Link (YouTube) |

While I understand the lack of documentation, I really wish I could figure out if this is a problem that Unity plans to solve in the future. Without denoising, this system is largely useless for realtime projects. Indeed, on the forums it seems like everyone else is doing slow renders of photorealistic scenes.

But Shamus! I use Unity, and I’ve seen a “denoise” feature in the past. Are you blind?!

Yes, there’s actually a feature CALLED “denoising” in the render options, but it doesn’t seem to do anything and I’m not even sure it’s related to raytracing. One of the things I’m struggling with is that the options aren’t very organized right now. Like, there are two competing systems in use right now: Raytracing, and path tracing. But in Unity you have to enable raytracing, and then under the raytracing dialog you get a checkbox for path tracing. That’s like making Coke a subset of Pepsi. The menus are full of options for old-school rendering, current-gen rendering, raytracing, and path tracing. Some options only impact one of these systems, and there’s no way to tell if the feature you’re looking at is relevant in the render path you’re currently using.

At one point I clipped outside the level and I saw the sky was a gradient color with a horizon. It was really confusing to have all that background detail while I was trying to examine the level from the outside, and I wanted a simple flat color. I found four different dialogs that had settings for the gradient sky. I turned them all off, and the feature is still active.

The point I’m getting at is that the interface needs a re-work to support all this new stuff, and until that happens we have random features scattered everywhere and doing anything requires a ton of trial and error.

Anyway, back to the problem at hand.

It’s a shame I can’t make this work a little better. It’s really annoying trying to view the world through this odd shimmering snow, and if I want to see what I’m doing I have to hold perfectly still for 4-7 seconds to allow the rays to build up.

One way to make this slightly less painful is to make it so that there’s a lot more light. To this end, I slapped a grid of lights on the ceiling texture. It looks terrible, but it also makes it so the image stabilizes in 2-3 seconds instead of 4-7. I also added a reflective floor, because what else are you gonna do when you have raytracing? I predict that mirrored floors will be all the rage once raytracing catches on. In the 90s, game designers went nuts with the new colored lighting and turned their levels into rainbow neon puke festsThe original Unreal was the most over the top about this. And to be fair, it really did look amazing at the time.. I expect we’ll get the same thing with overly-polished surfaces in the next couple of years.

If Only This Worked…

I’ve been waiting for this moment for a long time. Once we get proper raytracing working, everything gets WAY easier for those of us making procgen content. In traditional rendering, making interesting lighting ends up being a massive pain in the ass. Lights are expensive. If you have too many in the same room, then you’ll kill the framerate. Too few, and you end up with either pitch black areas, or large sections where the lighting is very flat. You have to make sure your real light sourcesThe point in space that creates light. are reasonably close to your apparent light sources Example: A 3D model of a lamp and you need to position them so that you don’t get strange shadowsYou don’t want some little corner of the lamp to cast huge dramatic shadows all over the room. or visual artifactsIf you stick the light INSIDE the lamp, then it will be inside a hull of outward-facing polygons. Little bits of geometry will end up blocking the light in odd ways..

But in a raytracing context, none of this is a problem. You don’t need to worry about having an extra light here or there, because lights are literally free. Actually, a bunch of people nitpicked the video I linked above, explaining to me that lights aren’t actually free. Lights still cost CPU + GPU cycles to use! You fool!

To which I say: It actually depends on how you set them up. Yes, if you add traditional light sources then there’s some additional overhead. But why bother with that? In my project, I don’t have any light sources. I just have some textures set to glow. The renderer doesn’t even need to know that I’m using this thing as a light source. Rays bounce off of it and hit a wall, and you get light. That’s not any more expensive than bouncing a ray off a dark wall.

Also, making light sources out of objects solves other problems. In the real world, lights generally have a penumbra. You get a spot on the table that’s in half-light because it’s exposed to the left half of the lightbulb, but the right half of the light bulb is blocked by an object on the table. In traditional rendering, room lights come from an infinitely small point in space. This means they cast unnaturally harsh shadows with perfectly crisp edges. For years we’ve been trying to fix this by taking our crisp shadows and deliberately blurring them a bit. That softens the shadow, but it’s expensive and can lead to nonsensical results. Like the blurring goes both ways, which means it looks like the very edge of a wall is slightly transparent because some light appears to pass through it.

When the light is coming from an object, this stops being a problem because it works just like the real world. Larger lights will cast softer shadows because they’ll have a larger penumbra. The light comes out of whatever shape you make, so a tube light will behave differently compared to a light bulb, which will give different results than a bonfire. You don’t need any special code to do this. You just make your object the right shape and texture and you’ll get light.

With tracing, rooms won’t be overpowered if you use too many lights. Just like in real life, turning on three lamps doesn’t turn the room into a blinding white-out. It just makes it a little brighter. Likewise, having too few won’t make the image flat because you’ll still have lights reflected in from other places. And in either case, you can have all the lights you want without worrying about performance. You don’t have to turn off shadows for all the lights in the level and just have shadows enabled for the closest few, and then have a system for fading the shadows in and out as you change position. That’s a huge pain in the ass with lots of weird edge cases and fussy details to worry aboutLike, this light is one of the five closest, so we want to enable expensive shadows for it, right? No! It’s close spatially, but it’s in another hotel room and there’s no way for any of the emitted light to reach me. So this lamp is wasting processing cycles for no reason and should be turned off.. With tracing, you just let the lighting happen. You get shadows everywhere, all the time, and you don’t even need to care about where it’s coming from. A room made entirely of glowing bricks isn’t any more expensive to render than a room made of regular bricks.

All of this makes everything so much simpler for procgen content.

As for this project: I don’t know that there’s anything left to do at this point. I got a BSP reader working to my satisfaction, and I’ve fiddled with Unity’s current ray / path tracing solution. Maybe I’ll come back to this in a few weeks / months when there’s some new information or a new version to try, but for now I think I’m done.

But! There’s more programming content coming Real Soon Now.

WARNING: This post was thrown together at the last minute and didn’t get my usual editing pass. There may be (and probably are) many grammatical and typographical errors. Proceed at your own risk. Additionally, I may have put this warning in the wrong place.

Footnotes:

[1] Unity is REALLY graceful about letting you have several different versions installed simultaneously, and it keeps track of which projects belong with which versions. It’s pretty great.

[2] Particularly this ludicrous habit of documenting important things inside of hour-long video presentations. Arg. My rage is boundless.

[3] The original Unreal was the most over the top about this. And to be fair, it really did look amazing at the time.

[4] The point in space that creates light.

[5] Example: A 3D model of a lamp

[6] You don’t want some little corner of the lamp to cast huge dramatic shadows all over the room.

[7] If you stick the light INSIDE the lamp, then it will be inside a hull of outward-facing polygons. Little bits of geometry will end up blocking the light in odd ways.

[8] Like, this light is one of the five closest, so we want to enable expensive shadows for it, right? No! It’s close spatially, but it’s in another hotel room and there’s no way for any of the emitted light to reach me. So this lamp is wasting processing cycles for no reason and should be turned off.

Starcraft: Bot Fight

Let's do some scripting to make the Starcraft AI fight itself, and see how smart it is. Or isn't.

Tenpenny Tower

Bethesda felt the need to jam a morality system into Fallout 3, and they blew it. Good and evil make no sense and the moral compass points sideways.

How to Forum

Dear people of the internet: Please stop doing these horrible idiotic things when you talk to each other.

Games and the Fear of Death

Why killing you might be the least scary thing a game can do.

Artless in Alderaan

People were so worried about the boring gameplay of The Old Republic they overlooked just how boring and amateur the art is.

T w e n t y S i d e d

T w e n t y S i d e d

Oh, I thought it was because you’d be drunk, so all shadows looked softer.

flagging others for Shamus

The problem with texture/emmissive lighting is that the ray tracer doesn’t know a “smart” location to send rays. This is called importance sampling, or direct lighting, or even sometimes Next Event Estimation.

It’s really a flaw in the Unity engine. It needs to ear-mark your emissive surfaces and random sample points on them for direct lighting.

That said…light sources are expensive in ray tracing. A lot of work has been done in the movie-houses to handle huge number of lights. Unity has a long way to go to get this working in real-time—even with hardware accelerated ray tracing on the RTX NVIDIA cards.

Light sources are also expensive in normal rendering. “Regular” lighting, shadows, fog, ambient occlusion, mirrors, windows – all of those are a different rendering pass, or add some computations to your existing rendering passes. Unity might have work to do, but they had similar work in the pre-raytracing world.

Lights are very expensive in current-gen ‘real-time lighting’, because you have to put a camera at every light source, render a (low-res) frame, then combine all these when rendering the final image.

– Every light costs a camera (plus a bit)

Lights are even more expensive in classical ray-tracing, because you start at every light source, emit a bunch of photons, follow them through the scene… and none of them hit the camera. Ugh.

– Every light costs X rays. Rays are a lot cheaper than cameras, but you need a heck of a lot of them before they’re useful.

Lights have zero cost in “path-tracing” – or whatever we’re calling “backtrack the light ray from the camera until it hits sufficiently-many interesting things and call that the colour”.

– In fact, more lights can be cheaper because we can usually stop the moment a ray hits a light-emitting object.

A single path-traced ray costs exactly the same as a single ray-traced ray, as all you do is reverse time’s arrow.

It’s surprising how many things are much easier if you make time go backwards!

The problem is that each pixel on your screen needs a lot of rays, scattered slightly differently in order to make a decent image.

So we have a situation where the lights are free, but the pixels cost way way more than before.

It’s the second time I see a comment in this post series that say that classical ray-tracing is about sending rays from the light sources, and path-tracing is sending rays from the camera. I’m sorry, but this is wrong. I don’t want to nitpick, but terminology in this domain is already confusing enough.

Ray-tracing is a generic term that can be used to describe a whole family of methods, which might explain the confusion. But path-tracing is a very specific method[1], not just casting rays from the camera.

Actually, to be clear, nobody is tracing random light paths from the light sources hoping to hit the camera (which is mostly impossible because the camera is usually a single point).

Conventional, or classical, or old-school ray-tracing cast rays from the camera. On hit, secondary rays may be used for reflection, refraction and shadows. For shadows, a ray is cast toward every light sources (several rays if the light source is not a point and needs to be sampled) to know if there is an obstacle or not. It does not try to sample the lighting environment to get global illumination. It only does direct illumination. More lights mean more secondary rays and worst performances.

Path-tracing also cast rays from the camera. On hit, it randomly choose if the path continue or stop here based on the material properties[2]. If the path continues, another ray is cast in a random direction and the process repeats until the path randomly stops. The contribution of all light sources along the path is computed, and added to the emitting pixel. In the end, the algorithm takes the mean contribution of each paths for each pixel. The algorithm needs a lot of paths per pixel to converge.

This has several implications:

* There will be a lot of very short paths and a few long paths.

* If a path do not encounter a light source its contribution is 0. That’s why in Shamus’ screenshot, most pixels are black and a few are white. It means that in this scene, paths are unlikely to hit a light source.

* Bigger lights makes the algorithm converge faster, because it is more likely to hit them. (The number of light is irrelevant.)

* To improve convergence speed, it is useful to try to increase the odds to send rays toward directions that contribute the most to the final image. The problem is that we don’t know what are these directions, this is why we use sampling in the first places. However we can try to guess, by artificially increasing the odd to cast ray in direction of light sources or by using the characteristics of the material. This is called importance sampling.

This being said, some method cast rays from the light sources, but not with the hope that they hit the camera:

* Photon mapping cast light paths from the light sources and record the impacts. Then conventional ray-tracing is performed from the camera, and lighting is evaluated by looking at nearby impacts.

* Bidirectional path-tracing cast both a path from the camera and a light source, then connect both paths and compute the contribution of the lights along the total path.

Path-tracing is particularly interesting because it is a very simple algorithm that evaluates the rendering equation correctly (i.e. it is unbiased). This means it supports shadows, indirect lighting, caustics, etc. The simplicity allow efficient implementation, particularly on the GPU (this is still non-trivial though).

If you are curious about path tracing, there is a C++ path tracer in 99 lines of code out there [3], the code is a bit dense and it do not implement important optimizations so it is quite slow, but it is great for learning. You can also play freely with blender cycle[4], the path tracer provided with blender (just set the renderer to cycle, create a simple scene and then set the viewport mode to render and boom ! “real-time” path-tracing).

[1] See wikipedia: https://en.wikipedia.org/wiki/Path_tracing

[2] If this is done well, the result is unbiased. It means, among other things, that there is no artificial limit on the number of light bounces.

[3] http://www.kevinbeason.com/smallpt/

[4] https://docs.blender.org/manual/en/latest/render/cycles/index.html

That’s an excellent explanation, thank you very much!

Modern graphic cards can probably can probably cast dozen of millions of ray per frame, not a few thousand. The problem is that a path is built of several rays, and if it does not hit a surface that emits light, it will be wasted (it won’t contribute to the final image). The whole difficulty of path-tracing is to bias probabilities at each bounce to increase the likeliness to hit a light source. That’s what is called importance sampling.

I would be surprised to see Unity implement path-tracing without some kind of importance sampling. The fact that Shamus saw a skybox outside the level makes me wonder if unity is not sending most of the ray toward the sun, which would be a good thing in an outdoor scene but actually makes this indoor scene very slow to converge.

To be honest, this level seems a bit like a hard case for path-tracing. Lighting is scattered all over the place and require multiple bounces of light most of the time. The fact that light is emitted by a texture that is not emitting on most of its surface probably doesn’t help. I guess better result can be obtained with better defined source of light that would allow importance sampling to be more effective (assuming importance sampling is implemented in unity).

So probably for a time, real-time path tracing will need to have a quite good idea where are the important light sources to converge quickly. Also, complicated lighting will impact the performances (or to be exact, how fast the image converges). This mean that the day where one can slap a bunch of procedural content together and hope that the renderer deal with it without help is probably not there yet. Sorry.

About denoising, NVidia solution is not only proprietary but also really unpractical. The idea is to use machine learning to learn how to reconstruct high quality images from noisy images. The problem is that it needs to be trained for each game on big server farms as far as I know, which makes it totally unusable on toy project like this.

Is “important” just boarding towards the direction of a light source? That seems like it would fail for multi-bounce situations. Isn’t the scene also affected by the reflective properties of the surfaces? Consider a mirror, beside a while wall, that has a red ball around a corner. I saw a demo of some war-shooter game, with the scene reflected off of puddles and a shiny car. That’s at least two bounces before a light source.

Finding what is actually “important” is the whole difficulty of path-tracing.

Ideally, at a given point, we want to skew the probabilities so that they match where most light comes from. So if you have twice more lights that comes from direction A than from direction B, you are twice more likely to send a ray in direction A.

Of course, the problem is that we don’t know where the light comes from: that’s what we are trying to compute. So we have to guess. Because direct illumination is way more important than indirect lighting, and because we really want to hit a light somewhere in the path, increasing the probabilities to cast ray toward lights make sense.

However, in some cases it might make the rendering slower. You probably don’t have direct light hitting below a table, so casting rays toward the light from below a table is going to sample a dark spot, which is exactly what we try to avoid. That’s why it is is important to still cast rays in random directions.

There is another form of importance sampling based on the properties of the material. For instance, for a glossy material you should increase the odd to cast rays toward (roughly) the reflection direction, because that’s what will contribute the most to the final image. If your surface is a perfect mirror, you _must_ tweak the odds so that some rays are going in the reflection direction because it is an important path and you just can’t find it randomly.

Now, I lack experience with path-tracing to give more detailed explanation, I’m not sure how people implement importance sampling in practice. But it seems you need to find a good balance between aiming for the light and totally random directions.

Frick, I made a typo on my phone. “just boarding towards the direction of a light source” should have been “just biasing the rays towards the direction of a light source”

On a ray tracing forum, back when RTX cards were new they were saying they were generally seeing the RTX 2080 do 1-4 Grays/s on “real” scenes (i.e. not specially constructed to get high numbers – for comparison, nVidia’s marketing was saying up to 10 Grays/s). How that translates to rays/frame obviously depends on what fps you’re trying to run at, but let’s call the target 50 fps just to make the math nice – that gives a budget of around 200-800 Mrays per frame, i.e. in the hundreds of millions of rays per frame.

I think you’re conflating two things here. NVidia uses DLSS to increase frame rates by upscaling from a lower rendering resolution. This works particularly well with ray-traced lighting because smaller framebuffer = fewer rays needed. AFAIK, the adaptive filtering shader used by e.g. Quake 2 RTX to increase raytracing efficiency by preserving lighting data between frames is a separate system. You can run all of these RTX games with raytracing enabled, but DLSS turned off.

This adaptive filtering shader seems to be what Unity is lacking; the effect Shamus is describing, of the image stabilising over several seconds, is what happens if you disable that shader in Quake 2 RTX.

Loved reading about this project.

It overlapped with an interesting presentation from Exile-Con last fall (a convention for the ARPG Path of Exile, think Diablo 2), by their RendererGuyTM (whole thing is worth a look, imo), where he explains the tricks they use to get away from point light sources, raytracing etc. in their sometimes very resource-demanding game.

(They had another one on their use of procedural content, could be of interest to you, Shamus).

Neat – all their levels are made out of

large (like 10m x 10m) tiles, that get stitched together! :)According to this article ray-tracing rendering scales better with the number of polygons than pre-raytracing rendering. When raytracing starts getting more affordable and mainstream[1], indie games will be plagued by just as much high-poly photorealism nonsense as AAA games! Noooooooooooooo!

[1] Graphics cards, engines, tutorials – everything in place.

Just for the record, these screenshots all look like interesting-to-amazing art styles to play a game in.

Yeah, the speckled ones look like old film grain (Mass Effect, Left 4 Dead), and the sparsely-speckled one looks like Return of the Obra Dinn. :)

Film grain, motion blur, depth of field, and lens flare are all evil abominations that should continue to be seen as limitations of physical cameras, not things to emulate.

Film grain in Mass Effect was friggin sweet tho. I was upset when it wasn’t available in ME3, I tried to look for some universal shader that would add it to any game. Didn’t find any.

Motion blur and DoF are just as much effects of limitations in our eyes and can be done well too. The only good implementation I’ve seen was way back in Crysis tho.

“From the archives” section below this post had a link to this article from 2 years ago:

https://www.shamusyoung.com/twentysidedtale/?p=41474

“Most of us have concluded that the game is dead, so I have no idea why Valve has been reluctant to make it official. ”

Now we have an idea – because they had been working on Half-Life: Alyx :)

It seems that “Rainbow Neon Pukefest” isn’t actually a Glitterpunk band. This is a crime and a travesty.

Be the change you wish to see in the world!

Currently games (particularly Frostbite games) are already suffering from developers turning up their lighting systems to 10. It will be non-stop fights in discos for the next few years!

Alternately, recreate the fight scene in a room of mirrors, from the second Conan film. I think this was in a more recent film, too…

There was a mirror fight in the latest John Wick. I bet developers are itching to have one when the tech allows it.

Also thkse fights in recent John Wick / James Bond films with dark rooms and strobed colour lighting.

Cue the wave of “Robot Roller Derby Disco Dodgeball” clones!

In addition to doing denoising, I believe modern real-time ray tracing does sample accumulation in a texture cache. It doesn’t look like Unity is doing this (or it’s a secret how to turn it on), but that would give the effect you’re describing of building up light samples over the period of a handful of frames. The camera wouldn’t need to remain stationary, because the ray samples are per geometry, not per viewpoint. You still have to cast specular rays per frame to get accurate reflections, but its a lot better than refreshing the whole scene.

Looking forward to the More Programming Content!

Oh yeah, that makes sense – it’s basically a cache of half of the computations, from lights to objects, but not from objects to the camera, right?

Great article.

Could you bsp build your level and import it into Quake 2 RTX?

“But! There’s more programming content coming Real Soon Now.”

Yay! Please do.

Just what I was looking for, a little light reading to break up my working from home day. Thank you!

How does fire render in Raytracing? Do you emite glowing particles and hope one path hits? That does not sound good for denoising with rays from previous frames, as all the particles move constantly.

I don’t know all the techniques, but Blender does volume rendering by samples. So, when in a volumetrically shaded… volume, the ray will get sampled every so often. Sometimes this results in diffusion (as in dusty air) and sometimes (as in fire) it adds light to the ray.

When we finally get another officially-licensed TRON game, it’s going to look so good.