In my column this week, I give my take on the announced specs for the next-gen PlayStation. Below are a few additional thoughts that were too digressionalI think that this should be a word. If referral is related to the process of checking references, and funereal is being like a funeral, then something digressional should be related to or seeming like a digression. to be included in the column.

I find it interesting that Japanese company Sony has an American in charge of designing their flagship gaming hardware. Even more interesting is that the American in question is also the designer / co-programmer of Marble Madness way back in 1984. Cerny’s Wikipedia page is an interesting list of honors an accomplishments. The guy has had quite an eclectic career.

The other interesting note is that the proposed PS5 is continuing the trend of consoles and PC converging. On the console side, the Xbox and PlayStation look increasingly like PCs in terms of hardware. On the PC side, Microsoft is trying to turn the Windows ecosystem into a closed, locked-down platform using the Windows 10 store.

These goals are understandable in isolation, but contradictory in nature.

- Companies want their machines to be based on standard consumer hardware, because those components are cheap thanks to economies of scale.

- Companies want total control over the user’s machine, because this is a good anti-piracy measure and controlling people makes it easier to extract money / information from them.

They want the price advantages of having open hardware but the control advantages of running a walled garden. The problem is that as hardware becomes more standardized, it’s easier for the community to wrest control away from the manufacturer. Jailbreaking your device becomes a matter of applying homebrew patches and requires less reverse-engineering and custom-built chips. The more open the hardware, the harder it is to protect the operating system from those danged meddlesome end-users.

Sony isn’t the only one that wants contradictory things. Personally…

- I’d love it if Microsoft gave up on their creepy Orwellian console and left the games business. At the same time…

- I want there to be lots of competing consoles because that’s generally better for end users.

Of course, the best way to reconcile these two things is to hope that Microsoft gets their act together and stops making a mess of things. Sadly, I don’t think the company is capable of doing that. They’ve had over a decade to work on this, and they don’t seem to be getting any better. They don’t just build obnoxious, inconvenient, privacy-invading systems, they build malfunctioning obnoxious, inconvenient, privacy-invading systems. Between GFWL and the Windows 10 Store, Microsoft has managed to inflict a lot of frustration on me, and I’ve barely used their products.

Still no PS2 support. I have a PS2A couple, in fact. but I’d love it if I could have one less device in the cable nest. Also, it would be nice if we weren’t relying on decades-old devices for access to the greatest console libraryAs measured by number of released titles, although you could probably make a pretty good case it wins in quality as well. in the history of the medium. Assuming the individual components are still manufactured somewhere, they ought to be pretty cheap by now. If they aren’t, then that’s all the more reason to make some more before the machines start dying off in significant numbers.

(This is based on the assumption that emulation isn’t good enough. I know the emulation scene has traditionally had a hard time getting a proper reproduction of PS2 behavior. It’s been a few years since I looked, but the last time I gave PS2 emulation a go there were still a lot of odd timing problems and rendering glitches. Then again, Sony has access to the full specs and don’t need to engage in any messy reverse-engineering, so maybe they’ll have more success emulating PS2 behavior on modern devices. I don’t know enough to predict that, so for now I’m assuming emulation is off the table and we need comparable hardware for access to those old games.)

Looking to the future, I have no idea what the giants will do in another ~7 years to try and get us to buy the PS6 and Xbox Whatever. What will motivate us to upgrade? Pay another $400 USD for graphics that are 0.001% more photorealistic? Heck, I’m not even sure what’s supposed to motivate us to make the jump to the PS5. Assuming Google Stadia is wrong and we’re going to continue to do our gaming on boxes, then we’re going to need a really good reason to buy another box.

But PS2 compatibility might be a pretty attractive feature. Sony could ransom our childhoodIn my case, more like mid-30s-hood. back to us through some sort of subscription service. On one hand I wouldn’t appreciate paying a monthy fee for access to a bunch of games I already own. Then again, this might be the only way these games can live on.

Sure, there are lots of PS2 in circulation now, but the number is going down, and there’s currently no way to reverse that trend. It might take a decade or so, but eventually the machines will become rare enough that it will be hard to introduce the next generation to these old games. Movies get occasional re-releases, but migrating games to the next generation isn’t nearly as easy.

Since writing this article, some information has leaked on the Microsoft side. Microsoft is claiming “ours is bigger”, but the official dick-measuring contest probably won’t start until next year.

Footnotes:

[1] I think that this should be a word. If referral is related to the process of checking references, and funereal is being like a funeral, then something digressional should be related to or seeming like a digression.

[2] A couple, in fact.

[3] As measured by number of released titles, although you could probably make a pretty good case it wins in quality as well.

[4] In my case, more like mid-30s-hood.

Twelve Years

Even allegedly smart people can make life-changing blunders that seem very, very obvious in retrospect.

Quakecon 2011 Keynote Annotated

An interesting but technically dense talk about gaming technology. I translate it for the non-coders.

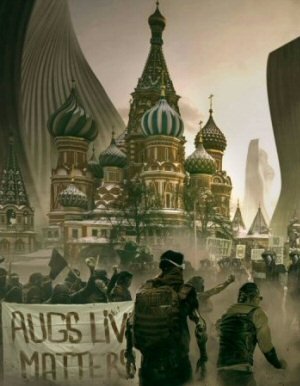

Deus Ex and The Treachery of Labels

Deus Ex Mankind Divided was a clumsy, tone-deaf allegory that thought it was clever, and it managed to annoy people of all political stripes.

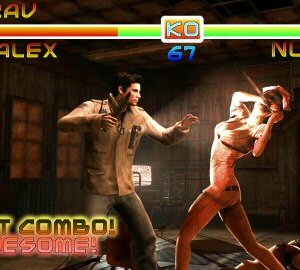

Silent Hill Turbo HD II

I was trying to make fun of how Silent Hill had lost its way but I ended up making fun of fighting games. Whatever.

Rage 2

The game was a dud, and I'm convinced a big part of that is due to the way the game leaned into its story. Its terrible, cringe-inducing story.

T w e n t y S i d e d

T w e n t y S i d e d

Microsoft and Amazon are both working on streaming game services and Apple has announced there’s as well.

Streaming games is likely to be the future.

I’d say it’s more that Microsoft and others believe that the future of gaming lies in streaming. They may well be right, though I think that high speed internet access will have to be dirt cheap and available everywhere before that can actually happen. But gaming companies have been wrong about the future of gaming before. When CD-ROMs were new, a whole lot of people (who probably should have known better) were convinced–absolutely convinced–that interactive movies were the future of gaming. That obviously didn’t happen. FMV games are vanishingly rare these days.

Your reference to CD Rom games doesn’t really apply here.

No one is suggesting there’s going to be a brand-new genre of games that are streaming-only. It’s just going to be the same games as now, but delivered streaming, although obviously this is less palatable for single player games or simple puzzle games that can run on cheap basic hardware and so there’s no console cost saving in streaming them from a data centre.

Streaming services will never, as long as the laws of physics work the way we think they do now, be a viable alternative to local processing in a number of use cases, but the real issue is that it’s obviously a fad just like FMV games were, and MMOs were, and VR was supposed to be. They’re not gone exactly, but hindsight has shown us that these things were niche products at best.

So if Google Stadia can serve games to you with 10-15ms ping, you think that is going to make input lag unacceptable? Even if they optimise every aspect of the service to ensure the least input lag they possibly can?

Google might be able to serve a lot of people games with only 10-15ms of added latency, but I doubt they’re going to be building data centers in every state, nor do they seem to be keen on rolling out Google Fiber to every household in the world. So while it may be a perfectly reasonable subsititute to owning your own box in LA, New York, or Chicago, it probably isn’t ever going to be so for someone in Billings, DeLand, or Nansana.

Assuming that hypothetical, which is highly unlikely in real world scenarios…for some games? Absolutely it’s unacceptable. Ensuring the display is configured correctly alone can make a world of difference for how well some games play, e.g. Devil May Cry. Right now games are increasingly targeting 60hz refresh but it’s clear that the older 85hz standard was better for certain kinds of games like flight sims and first person shooters, and displays that go well above 60hz max are again becoming popular for that reason. Once we start talking those kinds of performance problems, the solutions in a streaming environment are downright ghastly.

To be sure the ineluctable latency issue is far from the whole of my concern with streaming systems.

Anyone who cares about 60hz refresh vs 85hz refresh for a first person shooter is likely to be on a PC and this streaming solution isn’t really targeting them. They’re also likely to be pro-level or at least enthusiast, and so would make up 1-2% of the potential market.

Also, if the game you want to play is only offered on a streaming service, then you’ll just have to deal with it.

First, you’ve effectively abandoned your original argument, and are accidentally giving good reasons why it wasn’t a very sound argument in the first place. Second, I don’t have to deal with it. I’m not obligated to give anyone my money if they won’t take it on terms acceptable to me. If they won’t sell me goods I want to buy, I simply won’t buy them and will do without. Other people? Well, as Gabe Newell once said, piracy is a service problem, and making a game people actually want to play exclusive to some subpar-at-best closed garden with no ownership is practically the definition of a service problem, and one that might end up getting tag-teamed by legislation on one side and Supreme Court rulings on the other.

There is a niche for games streaming, much like there is for leasing cars. Both markets look like fools with too much money and too little common sense for their own good, and the games streamer market is concentrated around people who are practically walking distance away from the nearest data center.

It super does matter if it cuts a huge chunk of the market out from underneath them…

“Also, if the game you want to play is only offered on a streaming service, then you’ll just have to deal with it.”

And ya know what, that’s fine too. Keep em all, I’m real tired of this crap already.

I have no doubt streaming games is a future, but I severely doubt it is the future.

I mean, look at the US alone. Outside the big cities freaking dial-up is still pretty dang common. And due to the poor anti-monopoly enforcement, you typically get one internet provider per area that charge whatever they wish for how little they happen to care to provide.

And that’s just some of the practical obstacles before you even start playing the games. Heck, I remember Game Tap. Being kicked out of your single player puzzle game because of your brother just started downloading something, and the ping got too much? That was infuriating, even for the free version. If I’d paid for it? Well, frankly, I wouldn’t have kept doing that.

So… yeah. The old people, whose gaming consists of the odd puzzle game and such? I can see that happening. A way for them to get some more snazzy colors and effects in Candy Crush 40000, or whatever, without needing to upgrade their gear.

…But gamers? Can’t see it happening outside a novelty, or if there’s this one platform killer-app you feel you need to try. Not when you suddenly have to deal with ping and latency in ALL your games.

In the grim dark future of Candy Crush 40k. . .

Let’s

killcrush sum humiez, boyz! Waaagh!Everytime you creat a combo, you get a WAAAGH. The number of ‘A’s in your WAAAGH corresponds to the points the combo nets you.

Compete to make the biggest single WAAAAAAGH you can!

…This game sounds like an awesome variation on Match-3! Quick, someone make it!

Wow, you’re kind of suggesting a future version of Newgrounds, where everything can be streamed instead of using flash.

Given how much time I personally spent playing flash games, I’d say there’s potential there.

Now that you mention it… Yeah, I could see that work. Like a indi-game focused Steam, but financed via ads, or something like that.

Think we’re half to a full decade until the tech is that cheap and sturdy, however.

Was more thinking the current app store model writ a bit larger myself. A tiny client you download for your thing, but most of the hard number crunching happens server side. Might just be how Newgrounds or Albino Blacksheep reinvents themselves in a decade or two, though, once the tech is boiled down to ‘works in any browser.’

The US actually has terrible internet by first world standards, so its easy for Americans to be confused by this proposal for streaming games over the internet and think that it is going to fail.

I think people are looking at the wrong benchmark. The real question is: how better internet it is today than it was 5 years ago? 10 years ago? It is realistic to think that will be good enough for 2025? 2030?

Outside of America, internet access and capability has rapidly improved in the last 10 years.

Just look at the videos uploaded to YouTube in 2009 vs today.

Absolutely, I agree with this. If by “the future” you mean “what these companies will try and fail to force their consumers to use”. No one can see the future and no one can truly steer the future. Not even with monopolies and spy networks.

To have actual success, you need to provide what the customers want. Unless a majority of customers want streaming games, no streaming service will succeed. Hoping and praying that this time the mythical streaming service customers will appear, will not result in streaming becoming “the future”.

I believe that a majority of customers will want a streaming games future, where they only pay $50-100 for the hardware initially, can play the same game on their phone, bargain-basement PC and TV, portability of saved games and all of the other features that Google have announced, and it costs maybe $10-15 per month.

It would not surprise me if the future of *console* gaming shifts to streaming. It will give the console publishers a lot of control over DRM. And it would reduced the cost of manufacturing consoles – swapping a lower up-front cost (for the hardware) for a greater monthly subscription (which console uses are already used to).

Yes, this would likely leave the people out of dense urban areas out of luck – but we’re a minority already. And its not like MS hasn’t already tried ‘always online’. The backlash in 2013 was great because then a large minority couldn’t be ‘always online’ reliably. There’s few places in the US and darn near nowhere in Europe and Asia where that’s the case anymore.

If this happened, its likely it would force ‘AAA’ gaming into streaming (they’re not going to develop an offline version for PC) so that’s a little disconcerting.

Totally agree.

There is a vast gulf of difference between “always online” and “capable of streaming 1080p60 video in real time and with low enough latency for remote twitch controls”.

That statement was made in the context that “always online” in 2013 was a bit of a stretch, but that it isn’t a stretch now at all for Europe and Asia and the majority of the US.

It will be precisely the same for streaming games – it might be a stretch for many places today, but 6 years from now (same time gap as 2013 to 2019) it won’t be a stretch at all.

That’s why streaming games is the future – and when I say that I don’t mean “tomorrow”, I mean 5 years from now – like how Blockbuster doesn’t exist as a major brand and NetFlix and others are now killing off cable TV.

You obviously don’t live here. The US, because of a bunch of short sighted greedmongers, deliberately sabotaged their infrastructure so that Comcast and the like could continue their monopolies.

The US is 3 DECADES behind everyone else, and none of the corporations responsible for fixing it are going to want to pony up the dough to close the gap.

It’s still not a viable future until internet access is treated like a utility and the whole nation is brought up to a reasonable standard.

To pretend otherwise is foolishness.

1. We’re not three decades behind everyone else. three decades ago there was no internet.

2. ‘Slower than Europe or Asia’ does not mean ‘too slow to do anything’.

I have a modem from the late 80s. There was internet, nothing like today. However we are pretty damn close to what the internet was about a decade ago. My first DOCSIS 3 modem, I set it up to be able to set my own speed cap. At first I just unlocked it to what that modem was capable of, got around 60-80Mbps during day, upward to 300Mbps at night. Comcast figured it out, gave me a slap on the wrist and told me not to do it again. I still unlocked it at night but left their cap on during the day, never got a complaint from them again. Fast forward to today, you can purchase 60-80Mbps for residential use, higher for business use (last place I worked clocked in at 200Mbps). The modems while looking nicer and having better LAN side connections are very similar on the WAN side, slight update to the DOCSIS spec but generally the same hardware capabilities. All they have really done is relax the speed cap.

The upgrade to DOCSIS 3, which would be around 2007-2008 is the last major update to our internet infrastructure we have seen. That’s pretty old by modern standards.

The technology, if capable of at least 50 Mbps, isn’t really the problem.

It’s the distribution and how widespread the access is, and then secondarily how it well it is provisioned to deal with saturation.

I suppose that, technically, that’s true but from a practical standpoint we’re already there for basically everyone who isn’t on a rural ISP.

Well, to start with, this is for consoles – 1080p/*30FPS* is considered acceptable by console players today. That’s what? 44ish Mbps? And 1080p/60 video is what, 66 Mbps? I can get a 60Mbps service if I lived in the nearby small town of 15,000 people. 100Mbps or more is pretty normal in cities today.

As for latency – console players are already used to having high latency between having to game on their tv combined with being forced to use the console manufacturer’s dedicated server network. Its one of the reasons ‘auto-aim’ is standard for them.

So, it might not be quite ready-for-primetime *today*, but in a couple years? Or when the next console generation drops in another 10? Yeah, it’ll be pretty standard. Its why Google is dropping Stadia now – they genuinely expect it to be a viable option to ‘building a gamine rig’ within less than a decade.

This seems similar to Amazon Prime Air to me, the drone-delivery system. It was first announced in October 2013 and at the time everyone seemed pretty incredulous about it (I certainly was).

If that announcement hadn’t been made then, but was made last year, I don’t think people would have batted an eyelid about it – yes, it’s a little far-out (after all it’s still not available on a commercial scale – although trials are under way), but not really that crazy given the pace of development in drone technology as well as autonomous navigation and the pervasiveness of online ordering and delivery.

It seems some people today are reacting to Stadia and streaming services with the same sort of incredulity that met Prime Air back in 2013, but in 3-4 years time I don’t think such an announcement would seem out of the ordinary at all, but rather fairly pedestrian.

OnLive were way ahead of their time. I think Stadia and its ilk are striking at just the right time – just when the techology is starting to become feasible and internet access wide-spread enough to a good enough standard to be (eventually) a viable profitable service.

Transgress > Transgressive Y / Transgressional N

Congress > Congressive N / Congressional Y

Digress > Digressive Y / Digressional ..?

Gotta love English. I once got roundly mocked at school for an on-the-fly conjugation of ‘humble’ to ‘humblicity’.

The only place I’ve ever seen the word Congressional used is in the name of the Congressional Budget Office, purveyors of non-partisan budget analysis and projections since 1970-something.

Oh, I’m almost certain it’s an Americanism. But it’s all English nonetheless.

Like “catholic”, “congress” was originally a more generic word that’s now become associated with a single institution–in the English language, that is.

You’ve never heard of congressional hearings? Although if you’re wise and avoid politics that would make sense.

I have, I suppose, though they aren’t usually referred to that way. They’re usually referred to by the name of the committee holding the hearings.

Humblicity has perfect cromulation.

(And google spell checker doesn’t even blink at either. Gotta love it.)

Transgress: Verb.

Digress: Verb.

Congress: Noun.

There’s a consistency in this case. Although English is a goddamn mess.

Part of the reason the first and second playstations were so good was the number of interesting lower-budget games from smaller developers.

My excitement from raytracing comes mostly from thinking what indie devs will be able to do with it.

since a game with graphics like the old quakes/unreals are easier to develop. A technique that easily improves that kind of graphics might be really useful for smaller devs.

Things that allow one to make more with less frequently have their most interesting uses in the hands of those with fewer resources.

So a raytracing console combined with a policies that foster indie games might bring back a bit of that old glory to the PS5.

This!

The problem with the current pipeline that gets us to 95% of the same quality as raytracing is that it requires a lot of additional content. All of those environment maps and shadow volumes and reticulated splines require a lot of additional artist-hours that end up costing a lot of money.

The challenge with PC titles during the transition period is that you’ll still have to produce content for both pipelines, since there are a mix of graphics cards in the target demographic. If on the other hand you can be guaranteed that every PS5 will have raytracing hardware you don’t have to spend time and money on the traditional pipeline. This should drive down project costs and hopefully opens up the possibility of Morrowind / KoToR sized games to be made again.

I’m not sure I agree with that. The really expensive part of the art budget is surely animation. Also the most exponentially expensive part, because slight improvements in animation quality make everything that came before look bad in aa way that slight improvements in, say, texture quality don’t.

I think simpler animations look better with simpler graphics, the same way some low-poly models often look better with stylised textures than with realistic ones.

Also a lot of animations for common action can be obtained cheaply/free, and both unreal and unity engines have retargetting for different models.

Smaller budget animations still won´t be as good as AAA ones, but they can be good enough.

Plus if the Devs don´t have to spend as much rerources on textures/,aps/models, etc.. they can redirect those freed resources to other aspects like animation.

A question for those with more programming knowledge, so ray tracing from my limited understanding will allow devs to replace lots of lines of code and makeshift hacks for lighting with one single universal program. Will this free things up because of all of that junk being replaced with elegant code or no difference because that code will require lots and lots of CPU time?

It’s a valid concern but then again what prompted us to upgrade to the current PS4/Xbone? I mean there’s really only the Shadow of Middle-Earth series that *needs* to be this gen, maybe with say Horizon: Zero Dawn or RDR II it makes it easier to have their super complex enemies/bounty system but there were probably workarounds

*shrugs*

The FIFA and Madden fans will upgrade for more realistic sweat and more current rosters and I’ll follow suit as an idiot when a back catalogue is built up

The upgrade prompt comes from the fact that the console developers only release games on the “current” consoles, and there are exclusives and “exclusives” held ransom, same as it always has. I intend to get a Switch because Nintendo has enough 1st party exclusives I want to play that I’m willing to pay for it when I can, but otherwise there hasn’t been a good reason to use a console over PC ever since you could buy a graphics card for the PC for less than the console (assuming you used Windows). Which was a good 5-10 years ago.

Yeah, that seems like the obvious answer. “What will motivate us to upgrade?” Publishers are just going to stop producing games for the PS4 at some point, so if you want to play new games on console, you have to buy one of the new consoles. For most people that’s really the only motivation that matters, right?

I guess in theory, if enough people decided not to buy a new console, then publishers would keep releasing games for the current generation? But the companies that sell the consoles are also publishing a lot of the games, and they’re obviously going to do whatever they can to get people to buy their new consoles. And the big companies that sell the most console games always seem so keen on making games that only run on newer, bigger machines. How badly would sales of the PS5 have to be before the industry gave up and just kept making games that run on PS4?

The PC is probably the better option for the user comfortable with regularly upgrading video cards and navigating all the storefront/authentication services (Steam, Uplay, Origin, GOG, etc.) and dealing with generally more buggy new releases. But I wouldn’t discount the convenience of consoles for people who don’t want to deal with all that and just want to install a game and play (even if modern console games still have to download massive files and patches these days) with a single piece of hardware that works with your existing TV and can also double as your Blu-Ray/DVD/video streaming box.

Like, unless you’re committed to being on the cutting edge of gaming graphics and system performance, or engage in audio or video/graphics production of some kind, the average person’s computing needs are probably met by a Chromebook or their smartphone. Then they get a console to play games and otherwise serve as their living room entertainment centre and they’re satisfied for the next 5 years or whenever the next console comes out.

My understanding is that the difference for coders would be marginal. Most of the benefit would be in not needing to do all the extra art bits that games have been doing to emulate real lighting. Not even sure how much benefit there’d be for AAA games looking to do the best possible graphics they can. Likely too they’d need to do that extra work anyway for those customers without the fancy new raytracing hardware.

Either way the code I don’t think will do much for the CPU time. Both sides are mostly done on the GPU side.

Not really. Lighting and texturing shaders are only tens to hundreds of lines of code. You can make a shadow map with about five lines and read it back with another five (twenty if you’re getting really fancy)

The really complicated part in realtime rendering is working out what you don’t need to draw, and that’s only made more complex with each light bounce. That’s why I think quake 2 was chosen for the most fully featured raytracing renderer to date – the BSP map structure makes it easy to work out which bits to draw.

Shouldn’t raytracing tell you what you need to draw? If a ray hits a surface, draw it. Done.

Not if you’re doing interactive realtime 3D. You’re going to want to cull down to a list of surfaces that will (probably) be relevant to the player to test against, because testing ray intersections gets really expensive. For global illumination you’re going to have to do ray marching to find out if, for example, there’s an area of shadow between the player and the surface. If some of those surfaces (or shadows) are animated / player controlled things get really complicated.

For example, Battlefield 5 is pretty aggressive about culling geometry in its raytraced reflections, such that you can frequently see objects popping in and out.

“I mean there’s really only the Shadow of Middle-Earth series that *needs* to be this gen, maybe with say Horizon: Zero Dawn or RDR II it makes it easier to have their super complex enemies/bounty system but there were probably workarounds”

As someone who’s been a game dev for 11 years, I can tell you the current gen has enabled a lot of smaller developers to make a lot of higher quality work a lot cheaper and easier.

Having an order or magnitude more RAM meant significantly less time budgeting resources and setting up memory budgets and juggling what can and can’t make it into the game.

We still hit the limits, but instead of struggling to find a spare MB, most of the time you can rustle up 100MB unless you’re working with the biggest companies that have managed to cram every little thing they can in.

On the current generation getting our games to run at 1080p, 60fps on PS4 was achievable but hard, next gen that should be a baseline every single company should be able to hit without spending any time on it.

Less time spent on performance, means more time spent making the games fun.

The fanciest PS3 games looked as good as a good PS4 game, but the standard PS4 game looks far better than the standard PS3 game. And so it will be with the next gen.

As for the hardware raytracing, I don’t know what the API looks like but it should basically be fewer lines of less buggy code rather than everyone implementing their own and spending lots of time debugging it.

The main thing you’ll see though is because it’ll perform significantly better, all the big engines will just support it so every dev making a Unity or Unreal game will just tick a box and have fancy raytracing enabled without any fuss.

I REALLY wish they’d do PS2 backwards compability. I still have both my PS3 and PS2 standing around at home in addition to my PS4, but for PS3 I think pretty much anything I care about has gotten a remaster or re-release or something. There’s still tons of games available only for PS2 though, so it’d be nice if (when the console is released) I could replace three clunky boxes with just one.

Looking at the PlayStation Store seems to suggest Sony are happier re-selling you PS2 games than letting you play the copies you already own. It’s a slightly double-edged sword in that the re-releases you can download on PS4 come with trophies and actually do run in full HD, which is good, but you’re paying for something that already exists and relying on publishers to give a crap about bringing the titles to the platform, which is.. less than good. These versions don’t exactly receive a remastering treatment outside of bumping the native resolution, but it’d probably still take a little while to add the hooks for the trophy unlocks and get it up and running on the new hardware, so there’s some investment required.

I haven’t tried many of them myself, Destroy All Humans 1 & 2 were my only experiences. The first ran pretty great and was a treat to go back to, the second ran horrifically (and was just kind of a bad game) but I’m wondering if the original had similar framerate issues since I never played the second one back in the PS2 era. There’s also some confusion in that there are essentially two different PS2 catalogues on the PS Store, some titles run in full HD with trophies and some are just straight ports that run in software emulation, and it’s sometimes not immediately apparent which variety you’re looking at.

By all accounts, Sony has a fully functional software emulator for PS2 titles and it’s what is used by the more “budget range” re-releases of PS2 games that don’t feature trophies, it was included in the second wave of PS3s after they removed hardware emulation but before the slimline model came out and ditched the whole concept. It would be a hell of a big deal if Sony just essentially “unlocked” this functionality for PS5, but it’s arguable they’d be leaving money on the table by doing so (all those re-releases…) and whether there’d be enough positive goodwill / sales generated by including backwards compatibility where you can just insert the disc and play. I mean, fingers crossed.

Personally I’m hoping they let us play PSone discs again, even those were still compatible on the last versions of PS3. I think they left it off from PS4 because they didn’t bother with licensing CD technology for it, can’t even play a regular album. Sony must have found some loophole in the Blu Ray Disc Association’s regulations considering all players are meant to support CD playback. I guess as a founding member they can do what they want.

I really have stopped caring about backwards compatibility on consoles. I just go with emulation. Cemu gives me full Wii U access (Zelda-BotW at 60 Hz and 1080p looks and plays incredibly well), I have a PS2 emulator that works good enough, and on modern hardware performance is just not a problem any more. Yeah the PS3 emulation isn’t really there yet, but that console has so few exclusive games it barely matters.

With how few exclusive titles that are truly worth playing there are (about five per console if I’m being generous), how obnoxious updating and DRM procedures on consoles are, buying a console really makes no sense right now, certainly not an XBone or Playstation.

As for the PS5: Sony is trying to sell us an SSD as a brilliant innovation. Dear Sony, I’ve been using SSDs on all my PCs for a decade! And I’ll eat a broom if the drive they put into the PS5 will be anything like a high-end NVMe with 2TB+.

Yeah, trying to awe with an SSD is…It speaks to the perceived console market, actually. They probably expect a large portion of the audience to not realize the absurdity of putting a standard hard drive in the new console. It’s possible that at the time of the PS3 SSDs were more expensive, but that doesn’t mean they get a ton a brownie points now.

SSDs were only just starting to come on the market back in like 2005 when the PS3 was released, and even at the time of PS4’s unveiling they were still wildly expensive for anything decent sized. I remember buying a terabyte SSD for nearly £200 in 2013, you won’t find components that expensive in mass produced console hardware.

I think this is a massively exciting development for the next generation. Games are designed for the largest possible audience and that’s console users. Having much faster read/write speeds will have a big impact on the kind of experiences we’ll see and be a huge quality of life improvement over what we have now, where loading into a game even on PS4 Pro can still take a couple of minutes and just getting to the console’s dashboard on startup is infuriatingly slow.

Shamus: Fanboy or Hater?

I think we all know the truth:

Fan-Hater.

Well, fans are the worst.

With consoles, it seems to me that they don’t have to offer significant hardware improvements to get people to buy. They just have to stop releasing games for the old system.

I’m surprised you didn’t bring up the implications this all has for PSVR. A lot of people latched onto Cerny’s statement about 8K, which is probably upscaling, but I imagine the PS5’s specs are to provide a better, more competitive VR environment first and foremost. What I personally am hoping is that this results in a system capable of better performance. I don’t care about 4K, I care about 60fps, and so many (particularly Western) developers insist on pushing that resolution while locking at 30fps. Meanwhile, Resident Evil 2 and Devil May Cry 5 are looking gorgeous and running at 60fps.

Largely because whoever is building Capcom’s engines is a far greater talent. MT Framework was one of the best engines of last generation, and RE Engine is pulling out some major surprises on PS4 Original.

Nevertheless, reading about Microsoft’s plans have me groaning. You’d think they would have learned not to launch with “the casual and the hardcore” platform, as even with the Xbox 360 no one wanted that divide. I was hoping we were done with that sort of thing, but it would seem not. Similarly, Xbox One was also more powerful than PS4, and the Xbox One X moreso. Look at the marketshare. It didn’t matter.

Microsoft is clearly hoping the smaller studios they’ve recently absorbed can create new IP capable of combating Sony’s exclusives, all chasing the same tone/style of “look at us, we’re so prestigious and meaningful” story-telling. Of those acquisitions, Ninja Theory may be in the best position to provide. Want a competitor to God of War? Boy howdy are they the ones to make it. inXile was a bit of a shocker, with Obsidian being less so, but given both studios are known for narrative titles it’s easy to see why Microsoft would want to combat Sony’s hold on Death Stranding and Naughty Dog.

The problem is, whatever executive team was in charge of ruining Lionhead Studios and completely demoralizing Hideki Kamiya and canceling Scalebound, they need to be [i]gone[/i]. You need people handling these studio acquisitions with patience, and my biggest worry is that they’re going to be pressured and rushed into releasing games ready for the new Xbox’s first year (which I’m predicting will be 2020).

Sony put out the feelers first, and I’d say they’re already getting a positive, excited response. I also imagine they’re waiting for Microsoft to unveil their new Xbox at this year’s E3 before they start finalizing the PS5’s behavior. This was their strategy regarding DRM policies, it turns out, with the PS4. They saw the negative reaction the Xbox One had and decided to remove all of that on their current system, all while pushing for Digital so that physical DRM would be more and more meaningless.

Which brings me to my concern: Cerny’s phrasing makes it sound like the SSD is specifically optimized for the PS5. If this is the case, does that mean you have to purchase Sony-branded PS5 Solid-State Drives? Will off-the-shelf SSD’s not be suitable? Right now you can upgrade your PS4 with any old hard drive, up to 2TB. But Cerny spends time time making it sound like this isn’t just any Solid-State Drive, and therefore it has me concerned we’ll be right back where we were with the Xbox 360 hard drive issues. SSD’s are already expensive, and the PS4 maxes out at 2TB, which isn’t enough to sustain all the potential game purchases one can make throughout a console’s life cycle. Given the next generation has a hard on for 4K, game sizes are bound to only get bigger. Even if you buy physical, you still need to install the game’s data onto the hard drive. So what’s the default hard drive size, what’s the max permitted, and how much is it going to cost?

That’s my greatest concern.

I do, however, hope there will also be PS3 backwards compatibility, but largely because my PS3 seems to have bit the dust and I would really like to play some of those games again…

Microsoft can be patient. They were near-endlessly patient with Chris Roberts during the development of Freelancer, for all the good that did them. They had to boot him before they finally got a shippable (if not necessarily finished) game. Patience is overrated. What Microsoft needs to do is keep their developers focused and on-task–they really don’t or shouldn’t want their own Andromeda or Anthem–while at the same time not interfering too much in the creative process. It’s a tricky proposition, I admit.

I know nothing of Ninja Theory. What games have they made? I’m familiar with inXile and Obsidian, but I’m not sure what Microsoft hope to get from them. Both studios are capable of making competent RPGs, but I suspect that they were acquired mostly because they were for sale, cheap. I don’t think that either Bard’s Tale IV or Tyranny were big successes and Bard’s Tale at least was or is regarded as weird and buggy.

Ninja Theory were behind the recent indie success Hellblade: Senua’s Sacrifice, which was an interesting mix of emotional psychological drama and hack & slash gameplay set amongst Norse mythology. They’ve got a fair bit of pedigree combining above average storytelling with deep(ish) combat, and their games (barring the Devil May Cry reboot) have been largely popular.

It was a bit of a controversial move when Microsoft acquired them considering Ninja Theory were held up as the way forward for “AA” level independent game studios. Hellblade was largely self-financed and a really carefully managed production, one that was very transparent from concept to completion about its development process. Now that the evil empire has absorbed them, a few people, myself included, are a little worried about what’s going to happen and whether that ethos will go away. We’ll probably get something like Enslaved: Odyssey to the West again, an overproduced thing going for mass appeal that tries too hard to ape other games, instead of playing to the company’s strengths.

The huge advantage that InXile and Obsidian have is that the principals can walk away, start their own studio, and crowdfund millions of dollars based on “We’re going to do another thing”.

Those individuals retain that property even if they push out shovelware for Microsoft for a decade. If Brian Fargo says that he quit Microsoft to do his own thing again, he’s got at least one full normal game of runway, if not a Star Citizen of money available on his word.

Yes, I did just use ‘Star Citizen’ as a unit of money.

Well, yes, but what does Microsoft get? That’s what I’m trying to figure out here. Both inXile and Obsidian have track records that are, shall we say, mixed at best.

Really! After the Torment: Tides of Numenera debacle, some people may be more leery giving Fargo money.

True, I should have specified. I actually agree that it’s a fine line to cross, as management does need to make sure the creatives are kept on track. The problem with Microsoft was their efforts to force companies into making games that fit a broad and “popular” mold, rather than letting the devs make something they were good at. Heck, the problem with the multiplayer Fable game was how the execs themselves couldn’t make up their minds what they wanted that game to be, constantly forcing Lionhead to change it.

Publishers and executives aren’t always inherently bad. They can do well and recognize real talent. The problem is that, right now, Microsoft has a bad track record.

Nope. Of the two, PS4 has the more powerful GPU.

Really? I could have sworn when the two released Xbox One was perceived as having the edge over PS4.

Xbox One X is most certainly the most powerful console currently on the market, at least, but at the price they placed it, marketshare hasn’t really boosted much. Maybe I need to watch more Digital Foundry, though (in the end, the specs are so similar that it doesn’t really matter to most players anyway).

The PS4 was definitely perceived as more powerful, and that was a disadvantage to Microsoft. That’s why the Xbox One xboX X was so powerful, to try and shift some of that narrative back to Microsoft.

So being someone who only plays on PC I have to question how many people who are the primary market for consoles actually pay attention to specs? Like maybe they acknowledge the “mine is bigger” argument on some level but I’ve always gotten the feeling when the conversation came up that the two primary factors for console buyers were price and exclusives. I was under the impression the majority of people who are primarily console gamers do so largely to not have to deal with specs.

Price, exclusives and what console do my friends use.

It’s going to depend from person to person. Specs are just one element of determining which platform you get, and for some folks they make all the difference. While the console wars aren’t as prevalent as they used to be, there are still people taking note of what has more power. The problem is, the amount of power itself is very minimal and when you look at the systems side-by-side most people won’t know the difference.

As such, it then comes down to console features, exclusives, and price. Personally, being a Nintendo fan, it’s clear that I care more about the games themselves than the specs of the system. However, a lot of hardcore gamers still want those realistic graphics with hi-res textures. There’s a weird divide in console gamers that would take graphical hits if it meant being able to play a game at 60 FPS, preferring performance over resolution. There are others that feel the opposite, but they usually don’t play action games like, say, Devil May Cry 5. At the same time, you’ll find a lot of fans of said action games also absolutely hate Unreal Engine 3 due to becoming so prevalent and yet worse looking and worse performing than Capcom’s own MT Framework, which made Resident Evil Revelations and Monster Hunter look shockingly stunning on a Nintendo 3DS and had Devil May Cry 4, Lost Planet, and Resident Evil 6 running better than any Unreal engine game on Xbox 360 and PS3 hardware (while still looking absolutely beautiful). It’s no surprise that Capcom was able to get RE2 and DMC5 running 60fps with gorgeous graphics on even the original PS4 hardware rather than the Pro (though it certainly kicks the fans on, lemme tell you).

But that’s where things continue to get muddled. I feel like the limited specs of consoles communicate moreso what engines and developers are actual engineers and who else relies on powerful hardware to get things done. id Tech tend to do really well for Western developers, and I largely view that as being part of Carmack’s legacy. I’d argue that Unreal Engine was never that well optimized but is popular for being really easy to use.

I’m curious if DICE could put together a decent engine if they made a more all-purpose one rather than a specifically FPS one like Frostbite that has had nothing but disaster stories for the past several years. Anyway, that’s a major digression from the original question, but to link back to why I digressed in the first place, Nintendo as a first-party developer always has lower specs, but almost every first-party game runs at 60fps and looks gorgeous. Breath of the Wild is probably one of the only games that is stuck around 30fps, but I’d chalk that up to it originally being a WiiU game that didn’t have enough time to be optimized for Switch while also being their first game of that breadth. Even so, I’d take it above other open-world titles due to being able to climb anywhere. Horizon: Zero Dawn was prettier, but I hated the fact that I was surrounded by artificial walls that would have been perfectly climbable in Breath of the Wild.

So, really, it all comes down to the games. Nintendo demonstrates that you can use lower specs to create amazing experiences that feel more next-gen than the actual next-gen titles. At the same time, a lot of people are on board with VR, so it is in Sony’s interests to try and push their tech while managing an acceptable price point so they can have VR experiences more comparable with what you can get on PC. Microsoft… who knows? We’ll have to see their plan at E3.

Do we offer spelling corrections here?

should probably be “risk”

And this makes me laugh:

You should know better, Shamus.

I forget what this psychological trick is called, but the idea is if you preemptively give someone credit for something, they’re more likely to do it.

I wouldn’t do it here on the blog where everyone knows me, but in the drive-by world of gaming sites I thought it was worth a shot.

How did it work?

This column got less engagement than anything I’ve written in months. Maybe I should have been attempting to stir controversy rather than avoid it.

Do you mean less engagement on the Escapist? Couldn’t it also be because the audience there doesn’t generally care for more technical topics such as this one?

It’s possible that people just aren’t ready to engage with the idea of a new console generation before the big players have something concrete to put on the table.

There was more appetite for rumour and speculation back in the days of analogue media, where there’d be months-long information droughts between industry events, and the technological leap between generations was more substantive. But now we live in an age where our every expectation is micro-managed on the hour by an unimaginably elaborate public relations apparatus. I think a certain amount of apathy has set in, even among teh hardcorez.

I just don’t think there’s much to engage with yet. It’s a very low-key announcement.

“The upcoming system doesn’t have an official name yet, but everyone is reasonably assuming it will eventually be named the PlayStation 5.”

They’ll call it the Playstation Pentium.

(Still think of the first Mission Impossible movie where Luther gushes over asking for the new prototype 686 processors)

I think the “Microsoft is trying to turn PC gaming into a close platform” part is severely uninformed.

Don’t get me wrong, it’s completely in line with how Microsoft behaved in the 90s and early 2000s, but now they’re pretty committed to supporting open-source development.

They sponsor Vulkan, WebGL (and everything else Khronos does), and WebAssembly, their latest browser Edge is open-source (and will soon switch to Chromium, Google’s own open-source browser, as a back-end), they recently released a ton of hardware patents for Linux users, and that’s without getting into development tools.

I haven’t used UWP apps, mind you, but I’m very skeptical of anyone claiming Microsoft is aiming to create a walled garden without serious evidence.

https://www.microsoft.com/en-us/windows/s-mode

Still not a walled garden, at least not in the technical sense. S-mode can be turned off, and it doesn’t try to set up a proprietary Microsoft API that developers can’t get the tools for without a license (eg, like the IPhone does).

Also, there are a few benefits to limiting new users to installing apps through a pre-installed app store. It allows developers to create a more polished experience for computer illiterates, and it’s infinitely safer for companies, who know their employees can only install sandboxed software without having to create a whitelist of authorized apps.

I mean, you can always say that Microsoft is creating S-mode as a ploy to get people more dependent on their platform… but really, that’s just not where the market is anymore (let alone the company culture). For one thing, almost all new apps are developed for the web these days, and there’s not much Microsoft can do to control that. And on the other hand, there’s too big a legacy of non-standard software from 10+ years ago that will never be ported to UWP for Microsoft to pull the rug under everyone and go “Muahahah, from now on, you can only use S-mode everywhere and forever!”

It’s not a walled garden YET, but that’s definitely the direction Microsoft is trying to push things.

Windows S is basically Microsoft’s attempt to get a piece of that sweet, sweet Chromebook action. I don’t think it’s intended as a mass-market OS.

I wonder why flash drive games never even appeared, let alone became a thing. I feel like it could satisfy everyone – it should be somewhat simple to create some kind of hardware lock as DRM solution, it’s a physical possession that gives consumers the feeling of “I own that”, with USB 3.0 the interface is fast enough so you could arguably do without installation, and you can style drives in a million of ways for special editioning. Maybe I should create a startup, eh?

My guess is the same reason why cartridges died out: the hardware is just too expensive compared to optical discs.

A 32Gb USB 3.0 Kingston Flash drive costs about 6 USD on Amazon. I guess that’s a lot compared to a single BluRay disc, but that’s consumer cost. A company would get it a lot cheaper. And I just wonder why no one even tried anything like that, I’d do a small-scale trial run at least.

And a 100-pack of blank burnable 25gb Blu-Rays costs $44, or less than 50 cents per disc.

Sure, a company buying in bulk could get flash drives cheaper, but they could also get Blu-Rays even cheaper. Suppose that the cost for Blu-Rays would be something like 25 cents per disc and the cost for flash drives would be something like $3 per drive. This year, Read Dead Redemption 2 sold more than 23 million physical copies. Using the above estimates, using flash drives instead of discs would have reduced the publisher’s revenue from that game by more than 60 million dollars. That’s nothing to sneeze at.

You miss the point. It’s not about cost, it’s about ‘performance’. High performance SD cards cost a lot more, and you’d NEED high performance for all the random read/write operations a game or software requires. We’re not talking straight up MB/s speeds here, same as with the IOPS figure for SSDs.

This is exactly the problem Sony had with the Vita. The memcards were insanely expensive in large part because they had to be high performance, assuming you care about your games running smoothly and without constant hitches as the slow as hell card tries to keep up with the console’s demands.

Mark Cerny is really interesting as a developer. It’s not that everything he touches turns to gold or anything, he’s not my favorite director(do anybody care about Knack, baby?), but the devs at Naughty Dog and Insomniac specifically have nothing but kind words for the man as he helped nurture their designers and IPs. It’s part of why they’re so eager to work with Sony. Insomniac has had a major presence at GDC this year, with a whole lot of conferences about Spider-Man. During the one about creative challenge’s, the game’s creative lead Bryan Intihar talked about how Mark Cerny was one of their big contacts for Sony and helped mentor him into the role. He contributed to the design of the first Ratchet & Clank in a similar way.

As far as reasons to upgrade, it’s always gonna be games(, baby). It doesn’t matter how technically flawed or strong a console is. Not really. It’s all about bringing you the best games you can only, or most conveniently, play on that system.

Precisely this. I didn’t upgrade from PS2 to PS3 or from PS3 to PS4 because I was excited for the (at launch) marginally better graphics, but because I wanted to play the games that would no longer release in my then-current console. Same here, if I have to upgrade to PS5 to play the next God of War or whatever I’ll have no choice.

Microsoft can go on about how fancy their next console is (god those puff pieces from both MS and Sony are so embarrassing, trying to sell their off-the-shelf laptop APUs as “custom” hardware) all they like.

When it comes down it, the important difference between the PS5 and XXXbox NeXXXt will that one of them will run Bloodborne and the other won’t.

I’d much rather there weren’t any exclusives, but as long as Sony keeps funding the best, shiniest single player experiences and MS fiddles about with completely anodyne stuff like Crackdown 3, I guess I’ll have to live with it.

HAHAHAHAHAHAHAHAHAHA!

That this would exciting to anyone is . . . kind of sad, really. It should piss people off.

While the consoles have decent hardware, its all locked down. Which is bullshit. The idea that you really can’t just open up an XBOne or PS4 and put in a very basic, damn near PC standard, piece of equipment is ridiculous. If there’s one item of hardware that should be user swappable, its your storage device.

I mean, you’re not getting that much of a discount on the hardware – and that discount is expected to be eaten up by the premium clawed back on the games themselves and the monthly console service-fee added on – for it to really be fair to trade free-upgradeability for lower cost.

At this moment, it’s still unclear whether the PS5 storage solution will be upgradable with standard components. Even if not, Sony has allowed additional storage even for systems with built-in, unswappable storage.

I dropped out of the console race when the PS4 came out and got onto to Steam instead. Higher spec graphics leaves me cold. The PS5 leaves me cold. It’s just a low end PC and I just might already have a much better PC with SSD and all. The Sony exclusives are admittedly good but not crucial enough.

However … I was nearly lured into getting a Switch.

Consoles have always been “just a low end PC”. I never understood why they existed at all. Exclusives are the only thing they offer, and that’s something they offer to publishers, not consumers.

Part of it was probably that even in the PS3 generation almost everything got ported everywhere, so the situation became too stark to be ignored.

Back in the 80’s and 90’s PC hardware could not offer the same gaming experience offered by consoles, since that hardware was dedicated to gaming. Since the 2000’s you’re pretty correct that consoles are low-end computers.

But they work because the majority of the public don’t understand, and don’t want to understand, computers. At least in the 2000’s this was true. It’s much less true this decade and will probably be even less so next decade, substantially contributed to by the fact that the consoles themselves are becoming more like PCs, since they connect to the internet and get updates and such – nothing like the Sega and Nintendo of old where you turn them on and can jump into any game you want in 10 seconds.

I honestly didn’t think we were going to even see another console generation, due to smart phones and streaming games pioneered by OnLive, but looks like there’s at least 1 more generation to go – OnLive did end up failing.

In the ’80’s maybe – certainly PC’s outperformed consoles in the 1990’s. Doom wasn’t going to run on the PS1 or SNES. This was also the time when dedicated GPU’s for PC’s became mainstream.

That’s when we could ‘save everywhere’ to start with – consoles stuck with checkpoint saves for another decade. It wasn’t until around 2010 that having more than one user-controlled save slot. Heck, the N64 was still using ROM cartridges.

https://www.lukiegames.com/Doom-Super-Nintendo-SNES.html?msclkid=491f1442abe91afeeb114883e211315d&utm_source=bing&utm_medium=cpc&utm_campaign=Shopping%20Games&utm_term=4577060748929841&utm_content=SNES%20Games

https://www.ebay.com/p/Doom-Sony-PlayStation-1-1995/79224814

Sales of Doom for both SNES and PS 1.

“That’s when we could ‘save everywhere’ to start with – consoles stuck with checkpoint saves for another decade.”

That’s because consoles didn’t have the same storage capabilities that PCs had. They had strengths in other areas, such as dedicated sound and graphics processors that PCs didn’t have. The original xbox was released in 2001 and was literally an intel celeron processor running a cut-down version of windows – that’s when consoles became low-end computers.

A lot of it also had to do with developers targeting a known, specific fixed specification piece of hardware, and therefore being able to wring out every ounce of processing ability that was possible for their game, so they could do all sorts of visual tricks to simulate 3D and parallax etc (for example). Games for PCs didn’t have the same luxury – you couldn’t make a core part of your game rely on functionality that may not be present on all of your customers machines.

Except my PC – and PC’s in general – did have those things. Remember companies like Sound Blaster? Created in 1990?

Are you joking? Did you see Final Fantasy IX? It was made for PSX. Doom (awesome game of course) is nothing compared to what this console was running.

“substantially contributed to by the fact that the consoles themselves are becoming more like PCs, since they connect to the internet and get updates and such – nothing like the Sega and Nintendo of old where you turn them on and can jump into any game you want in 10 seconds.”

True. Tablets and smartphones have taken over that capability, and I see the kids gaming on those all the time. I even do a fair bit of my gaming on my ipad myself these days (with a controller). Mostly limited by the available titles, really. Wow, now I’m getting fired up about the Switch again.

I don’t see a problem with “digressional”, but “referral” isn’t related to the process of checking references. Referral is just the noun form of the verb “refer”. It’s what you receive when you get referred (to something or someone else).

While it is true that the incremental improvements in graphical quality/fidelity/realism/etc are hitting diminishing returns, there is a lot of room to grow still in terms of scene complexity. For example, in Vermintide 2, the console versions spawn enemies in smaller numbers and instead increase their health to keep the overall difficulty roughly comparable, simply because the consoles cannot handle the large hordes that appear in the PC version. Also, stuff like rendering distances, level-of-detail, and managing the risk of pop-in of scenery objects, characters etc are still areas that have lots of room to grow.

This brings us back to this part of the article:

Raytracing has one peculiar advantage over rasterization rendering – it scales better with really large numbers of polygons, and with large numbers of computing cores (in theory, you could make a chip with 1 core for each pixel, and maybe in a few decades GPUs might actually be built like that). Basically, there is a point of scene complexity where raytracing will naturally exhibit better performance than rasterization, and once we have crossed that point, rasterization might eventually disappear altogether. I think the AMD and NVidia are preparing for exactly that, and trying to make the road to the eventual transition as smooth as possible.

So the summary of that is really that raytracing isn’t specifically for allowing better graphics, but to allow for better gameplay through more objects being in the scene at once at the same or better level of detail that we currently have. Curious.

It’s unclear to me how ‘more objects rendered at once’ and ‘better detail than we currently have’ aren’t ‘better graphics.’

Regardless, the tl;dr I got from that is ‘actual ray tracing scales better than the melange of hacks that are currently used to fake it.’ Also, that right now we’re pretty close to the inflection point, and the continued dominance of light hackery is as much a matter of momentum as anything else.

Exact same visual fidelity, but drawing 10,000 objects instead of 100, is not really “better graphics” first and foremost.

You have a giant misconception that Sony cares about the massive library of PS2 games and bringing them to you. PS2 graphics are something that even me, who is a prime generational target for a PS2 throwback, don’t really look back to playing those games with the graphics of their generation.

FFX is helped immensely by the remaster, and really only the games that are worth remastering are worth putting the money into. They’ll probably do something like the PS1/NES/SNS mini consoles that have the “best” games on them, but I doubt they can really cover even a smidgeon of what was out on the PS2.

I dunno, it just feels like you’re overstating the nostalgia that people will have for the PS2.

Not really. PS2 have some of the most legendary games in industry, and even if you ara talking graphics many of these games have very stylized art, which makes them not age as much as “photorealistic” graphics.

Sony is currently about where Microsoft was at the beginning of Gen 8, or where Sony themselves were at the beginning of Gen 7 – riding high on a wave of success that went straight to their fool heads. The other party took the drubbing, learned lessons, got humbled, and changed up. Right now Microsoft is the one shotgunning strategies, even some dubious ones like buying up hollowed out mediocrity factories like Ninja Theory, InXile and Obsidian to round out what must otherwise be a very anemic lineup. They’re making gestures that suggest the idea of the walled garden has either run its course or it’s at serious risk, and they seem to be smart enough to realize that pissing off Valve hard enough to get them off their asses and finally getting somewhere with SteamOS and Proton is going to carry a cost they’re probably not prepared to pay. Sony seems to be actively antagonizing devs, especially Japanese devs, with policies that are hand-tailored to get them running somewhere else.

Whenever you look outside you realise that graphics have so much further to go before we get to photorealism.

And it’s still rare to get games with proper crowds outside of Hitman.

I think PS5 games will still be distinguishable from PS4 games

I really wish they would just add PS2 emulation like some of the early ps3s had. For whatever reason my parents bought me an original xbox when I was a kid, which was great in its own way with Halo and Kotor, but I’ve missed out on so many ps2 classics and I’d rather not fuss with buying a ps2 or upgrading my hardware to emulate it. Yeah, yeah some games like Shadow of the Colossus get amazing remasters but Silent Hill 2 is still stuck in the past with only a shitty 7th gen remake that ruined it.

*Sony exec meeting*

– Gentleman, we have a hard choice here: we can gave PS2 retrocompatibility for free… or force them to buy the same game for the third or fourth time on our online store!

– But, sir, gamers aren’t gonna be pissed if we charge them for something that they think should be free?

– Maybe, but they will gonna be pissed enough to buy an XBOX HideossurnamemygodMSisfuckingaround ?

*check sales numbers for PS4 vs XBOX ONEBUTNOTHEFIRSTONEITSANOTHERONE*

– Ok, charge them at will!

In the article you’re dismissive of raytracing as the latest “5% more photorealistic” technology, but even if it is that, it’s still a big deal. Yes the industry spent a long time building up tools that could smoke and mirrors their way to 95% as good as raytracing, but all those tools take a lot of work to use. One of the big advantages of raytracing is that it’s a turnkey solution: no more 12 hour compiles every time you move one element of the scene and mess with the prebaked lighting. I’m sure we’ll find some new and interesting problems once we start seriously using raytracing, but on balance it promises to be a lot less fiddly than the existing methods for making nice lighting, and while that’s not directly visible to the consumer, it frees up developer resources to work on other things.

Regarding game streaming, I have to say that US people have a very weird idea of what internet connectivity outside of the US looks like.

Sure, there are some northern European and smaller Asian countries which pump up the averages, and even in a shitty country like mine (Croatia) you can get optical fiber if you’re lucky enough to live in a few very specific locations, but I’d say that a lot of people in EU still only have access to 20-40 megabit connections, and that’s not even opening the topic of gigantic markets like India which have internet connections that require separate Lite versions of stuff like Facebook and Messenger.

What Google could be aiming for with Stadia is getting desktop titles on mobile – they do make Android and have some stake in this, 4G connections are faster than wired connections in a lot of places, and you’re not really going to notice your game dropping from 1080p to 480p on your 6 inch phone screen (RIP Galaxy Fold) as much as you would on your 65 inch TV (on which you should be aiming for 4K anyway, and, well, good luck with that).

What others are aiming for – no idea, but I’d say that thinking that streamed gaming could become a mainstream thing before 2025 (at best) is a bit naive.