Let’s talk about OpenGL. No wait. Don’t hit the back button yet! Let’s talk about OpenGL in a way that non-coders can hopefully follow. I’ll even sprinkle some screenshots of my most recent OpenGL project through the article to break up the scary walls of text. It’ll be easy and maybe even funI’ve found that people’s definitions of “fun” are surprisingly flexible!.

OpenGL stands for Open Graphics Library. It was originally devised in 1991. When it comes to talking to your graphics card, OpenGL is one of only two ways to get the job done. If you want to render some polygons, you have to use either Direct X or OpenGLWell, a third option would be to render WITHOUT using the graphics card. This will – no exaggeration – be thousands of times slower. So it’s been years since the last time I saw a software-rendered game.. Everything else – Unreal Engine, Unity, or any other game Engine – has to go through either DirectX or OpenGL if it wants to make some graphicsAssuming you’re working on a desktop computer. Things are a little different in console world..

OpenGL pre-dates graphics cards. At least, it pre-dates cards as we understand them today. There were industrial-grade cards in 1991, used by render farms and CAD stations. I don’t know enough about that hardware to know how OpenGL worked at the time, so let’s just ignore all of that stuff while I make broad, hand-wavy gestures. The point is that the world of consumer-grade graphics acceleration sort of grew up around OpenGL, and over time it adapted to give us programmers a way to talk to all that fancy graphics hardware. OpenGL also pre-dates the widespread adoption of C++ as a programming language. Also, it was devised long before multi-threading was part of normal development. This means that OpenGL has a lot of things about it that just don’t make a lot of sense to game developers in 2015. It’s a system that uses crusty old C code, it doesn’t play well with multi-threaded approaches, and it’s filled with legacy systems that nobody is supposed to use anymore.

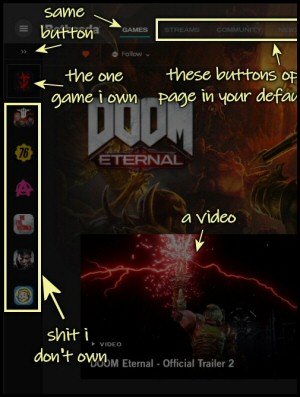

|

| This is my game “World of Stone Pillars in a Blue Void With No GamePlay”. Coming soon to Kickstarter! |

The full story of the rise and fall of OpenGL is much too long to recount here. This is the fullest version of events as I’ve been able to find, tucked into the middle of a very long thread on StackExchange. Imagine a world where the only coherent account of the rise of the Ford motor company was from a single user in an r/automobiles subreddit. That’s what this is like. I’m not even going to try and outline it here. It’s a mad story that involves several hardware companies, Microsoft, a committee, and a lot of bad decisions on the part of everyone. The most important player in that drama was the committee, which is called the ARB.

The point is: OpenGL has changed quite a bit over the years. As graphics technology changed, the ARB would bolt some new functionality onto the Frankenstein monster of OpenGL and it would shamble onward. That’s fine, inasmuch as adding functionality is what they’re supposed to be doing. But now, 23 years after the release of OpenGL, things are a mess. There are now five ways to do everything, and the four most obvious and straightforward ways are bad and wrong and slow and people will yell at you if they catch you doing them.

This is a shame, because like most programmers I’m really attracted to “obvious and straightforward” solutions. In the old days, you could sit down and write a dozen or so lines of code that would put some polygons up on the screen.

The old way:

- Set up your OpenGL context so you can draw stuff.

- Position the camera.

- Specify a few vertices to make a triangle.

- If you’re feeling creative, you could maybe color them or put a texture map in there, but whatever.

The new way:

- Set up your OpenGL context so you can draw stuff.

- You’ll need a vertex shader. That’s another whole program witten in GLSL, which looks a bit like C++ but is actually its own language.

- You’ll also need a fragment shader. Yes, another program.

- You’ll need to compile both shaders. That is, your program runs some code to turn some other code into a program. It’s very meta.

- You’ll need to build an interface so your game can talk to the shaders you just wrote.

- You’ll need to gather up your vertices and pack them into a vertex buffer, along with any data they might need.

- Explain the format of your vertex data to OpenGL and store that data on the GPUThe graphics card. But you knew that..

- Position the camera.

- Draw those triangles you put together a couple of steps ago.

- If you’re feeling creative, you can re-write your shaders and their interface to support some color or texture. Hope you planned ahead!

This list kind of undersells the drastic spike in work required to get something to appear on screen. Every one of these new steps is more difficult on both a conceptual (What is happening here and why do I need to do this?) and programmatic (How do I do this?) sense. If you have any experience with modern gaming engines you’re probably used to ignoring all of this. You’ve most likely got a game engine between you and the fussy details. These steps are difficult to wrap your head around, but they only need to be done once and most people don’t do it at all.

|

| Colored lighting! Let’s party like it’s 1999! |

Note that I’m not really faulting OpenGL for this rise in complexity. The way things are done today is unavoidably difficult. You’ve got your multi-core computer there running a game, and that computer is talking to another fabulously sophisticated computer (the GPU) with hundreds or thousands of cores. Each computer has its own memory and its own native processor language, and as a programmer it’s your job to get these two systems to talk to each other long enough and well enough to make some polygons happen.

So that’s what this series is going to be about: How OpenGL used to work, how it works now, and what I think we’ve lost in the transition.

Footnotes:

[1] I’ve found that people’s definitions of “fun” are surprisingly flexible!

[2] Well, a third option would be to render WITHOUT using the graphics card. This will – no exaggeration – be thousands of times slower. So it’s been years since the last time I saw a software-rendered game.

[3] Assuming you’re working on a desktop computer. Things are a little different in console world.

[4] The graphics card. But you knew that.

Top 64 Videogames

Lists of 'best games ever' are dumb and annoying. But like a self-loathing hipster I made one anyway.

Philosophy of Moderation

The comments on most sites are a sewer of hate, because we're moderating with the wrong goals in mind.

Bethesda’s Launcher is Everything You Expect

From the company that brought us Fallout 76 comes a storefront / Steam competitor. It's a work of perfect awfulness. This is a monument to un-usability and anti-features.

Control

A wild game filled with wild ideas that features fun puzzles and mind-blowing environments. It has a great atmosphere, and one REALLY annoying flaw with its gameplay.

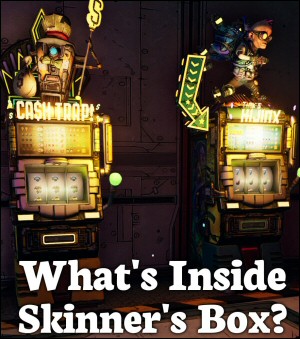

TitleWhat’s Inside Skinner’s Box?

What is a skinner box, how does it interact with neurotransmitters, and what does it have to do with shooting people in the face for rare loot?

T w e n t y S i d e d

T w e n t y S i d e d

Ah, one of your programming posts. A great way to start the day!

(Oh, and since we’re partying like its 1999…

*Ahem* “First!”)

I always find these sorts of posts and series fascinating (and I’ve been lurking here for YEARS). I know nothing about computer graphics but I’m a physics grad student and so I “program” in C++ everyday (imagine a monkey banging rocks on a keyboard, that’s about my knowledge level). Anyway, I’m always glued to these posts because they help me to get a handle on all the under-the-hood stuff that goes on and I must understand ALL OF THE THINGS in life. So please keep them coming!

You say you don’t know graphics but know physics. Well my friend graphics and physics go hand in hand. If ya ever took a shot at the graphics stuff, especially pixel shaders you would feel right at home. The bulk of my notes for graphics programming are physics equations broken down into components that can be executed in parts (for example components that are the same across all points on the surface on cpu, components that change in vertex or pixel shader depending on how they change).

The four most obvious and straightforward ways are also the best documented, and all of that documentation says that it is the canonical answer and that any previous methods are now obsolete.

This means that when you just want to push some polys onto the screen, you have to very carefully vet your sources for when they were published/posted.

This is why I haven’t written an engine. But I still want to. I want to make all these little low-level things work and then abstract them far far away in the bowels of an engine.

For extra funsies, my current (home) coding machine is a chromebook with an NVIDIA-built system-on-a-chip that combines an ARM processor (what you see in phones and other lightweight computing devices) with a Kepler-based graphics card that uses the standard OpenGL interface instead of OpenGL ES (although that is also supported because it’s basically a subset of OpenGL but still core pipeline [citation needed]).

On the upside, it looks like most current hardware will eventually get drivers supporting Vulkan, and that seems like way less of a mess.

It still has the problem of putting a lot of steps between A and B, but at least it was designed that way from the beginning to reduce the abstraction distance from the hardware.

Fortunately, OpenGL v3 has a “KILL THE LEGACY” switch you can turn on which ensures it will immediately explode on you if you try to do any legacy stuff.

OpenGL ES v2.0, 3.0 and 3.1 don’t even have the legacy pipeline, so those tutorials are safer. OGLES v3.0 is a pure subset of OpenGL v4.x.

Unfortunately, none of the well-known cross-platform OpenGL wrapper libraries actually do everything that even OpenGL ES v2.0 does, let alone desktop OpenGL 4.x

So the moment you try to do anything ‘fun’, you’re back to raw glXXXX() calls.

I like that switch. That’s my new second favourite switch. (My all-time number one favourite switch is typically known as “Battle Short,” and I think every device ever manufactured should have one.)

I have seen a literal switch with that position. You needed to open the panel to access it and it removed all automatic safeguards from a sensitive component of a warship.

No one has ever been authorized to touch that switch except for maintenance.

The problem with that switch is that it only kills 3/5 of the ways to do things (i.e. it leaves at least one wrong way).

Oh, and the right way isn’t a part of OpenGL 4.5.

And the right way involves ignoring all these things OpenGL gives you like multiple texture objects, and instead you should make a massive TEXTURE_2D_ARRAY and stick all of your textures in that, because switching textures is horribly slow.

I’m starting to remember why I never took a game programming job after getting my graphics CS degree. Turns out being a corporate drone and talking to databases all day is better for one’s sanity.

Are you going to mention how nobody actually uses raw OpenGL, and that even if you’re not using a graphics engine you’ve pretty much got to use some third-party libraries to do things like set up contexts and define the OpenGL function calls correctly? And that there’s no standard library for this, so newcomers are stuck trying to piece together code from the 10 different tutorials that each do a part of what they need, but each use a different one of these libraries?

OpenGL is also an excellent example of where exceptions would come in really handy. When OpenGL fails, you get very little meaningful feedback. Sometimes you wind up having to check for an error after every OpenGL call to debug the code, which is a mess. And then the error code you get tells you almost nothing.

Oh, and there’s the fun of GLSL having multiple, mutually incompatible versions.

*raises hand* I use native OpenGL. All I had to do was write a bunch of vector and matrix functions, my own model loader, code to work out normals, code for generating meshes of arbitrary size, my own texture loader, code for handling uniforms, and figure out how to configure framebuffers, and then I was all set to display my first triangle!

That’s not even what I’m talking about. I’m talking about stuff like GLEW that hides the even more arcane stuff needed just to make OpenGL calls properly.

http://glew.sourceforge.net/

Ah, but good luck finding out which of the two dozen libraries a) has actually been updated since 2004, b) doesn’t take over your entire engine and c) doesn’t secretly miss some vital feature that will cripple your attempt to use it and make you start over.

GLEW, for example, doesn’t do OpenGL ES bindings. Which is a damn shame, because GLEW is usually a neat library to relatively cleanly and portable handle all those messy extensions. But then you want to extend your code to also work on mobile devices, and suddenly you’re back having to navigate around those yourself again.

Ah, good point. I’m only doing it as a hobby, using Cocoa/OSX, which will give you a context and sync your code to display refresh pretty easily if you ask nicely.

I do think, though, that it’s easier to end up bouncing off basic graphics programming if the novice makes the mistake of thinking of it as “3d graphics engine” rather than “method of transforming triangles, optionally in order of depth” (as I did).

The other side of the coin is that if you look at, say, the Unity forums, people often seem to react to somebody implementing, say, normal mapping as if it’s the second coming because they’ve never had to really think about how their software actually works

The third side of the coin (which is a Triganic Pu in this analogy) is that knowing about the guts of the graphics API makes reading gaming forums tooth-grindingly irritating whenever some blowhard talks about a game being “poorly optimised” :-E

Unrelated to the post, I really like the Cities: Skylines background image! Great choice. :)

I think he swapped out the pony one too soon. :(

I’m not kidding when I say that I’ve just spent the last 2 hours before you posted this trying to work with the black box that is OpenGL.

I took object loading code, and object rendering code from another project and was building a rendering engine. I’ve got my context set up correctly (that part really is just a plug in object now that holds all the messy stuff like compiling shaders). But when it comes to rendering all I get is a black screen. Since you can’t put a breakpoint in OpenGL, you have no idea what you’ve forgotten to do.

Have I not loaded my vertices into the buffer correctly? Is my shader not quite right, maths wise? Is my camera facing the wrong way in this near empty scene? Is one of the values in one of the matrices wrong? It’s most likely a copy-paste error with reusing code from an old project, but finding where the mistake is will take me hours.

But OpenGL just prints out a blank black screen and it sucks to try and find where the thing have gone wrong. OpenGL just seems too quite to take gibberish values, some warning messages would be really useful in determining what has gone wrong.

See my above post. You have to manually check for errors in OpenGL.

And I do, but it tends not to complain much as long as the values are valid. Most problems I run into are mainly because of values that don’t make sense, but are valid for rendering. That’s the point where you’ve made a mistake “somewhere” but the blank feedback tells you nothing.

The first step in debugging OpenGL is to clear the main framebuffer to a different colour, as in glClearColour(); glClear(). After that, you get to be really good at interpreting arbitrary messes of colour that you throw up all over your viewport as a way of expressing some part of your program’s internal state :)

Clicking links inside tooltips doesn’t seem to work, since the tooltip closes when I mousedown, trying to click a link just makes the tooltip go away. (I’m seeing this behavior in Chrome, at least)

I am seeing the same thing in Chrome. Swapped over to IE and same behaviour. Clicking on the link closes the tooltip before launching the new page.

Also … YAYYYYYY!!! more coding posts!

I’ve uploaded a “fix”. We’ll see how it works.

Works in FireFox.

Works for me, now. Thanks!

@Shamus: This isn’t as useful to you now that you have a working fix, but this “bug” had already prompted me to rewrite the footnote javascript with JQuery with the goal of making it more robust.

The result is at http://pastebin.com/XyZLWgSK – you may do with it as you wish.

“Let's talk about OpenGL. No wait. Don't hit the back button yet!”

I wonder: is this actually a thing? I mean, I assume that most of the pageviews these days come from Spoiler Warning and its fans, but is there really a much lower page view count on programming posts? I mean, I like SW and such too, sure, but I’m a non-coder here since way before SW ever started (fuck I’m getting old) and I love your programming posts! They’re probably my favorite category of posts after the game deconstruction rants :)!

Previously Shamus has said it’s actually the opposite, I believe. The video content gets the lowest hits, while his long text posts on things like programming minutia get the most hits.

I’m thinking that first sentence is mostly just self-deprecating humor; not really an expectation that a significant number of blog followers are going to be scared off.

Correct on all counts.

Here’s a another recount of the history of consumer 3D, by the guy that invented DirectX:

http://www.alexstjohn.com/WP/2013/07/22/the-evolution-of-direct3d/

The interesting thing is that, by his recount, consumer-PC-level HW acceleration and OGL might’ve not existed at all (or heavily delayed) if it hadn’t been for DX. Way back then OGL vs DX was literally nothing more than a MS internal politics spat.

The guy has a bunch of stories from his MS days, which paint a really interesting picture of how MS works internally and just how crazy things were back then.

His story with Talisman is almost ridiculous, could very well be a movie.

Oh man, this guy. One of the best DM’s I’ve ever had. Didn’t know he had a blog of this caliber, though.

Alex St. John’s post is kind of a bizarro world. There are many errors in the article. Some of his criticisms for OpenGL apply even more strongly to Direct3D (capability bits? Direct3D was full of capability bits).

Direct3D won in the Windows world because it was much simpler to develop device drivers for and Microsoft’s support for OpenGL was rather screwed up. I haven’t paid close attention in years, but in the 90s they went through 3 different driver models for OpenGL and ended up deprecating or retracting two of them (3D-DDI and MCD), leaving them with the original model (ICD) which really was too difficult for most chip manufacturers (essentially, each OpenGL call was simply handed down to the driver and the chip manufacturer had to implement all of OpenGL themselves).

left-handed coordinate system? Is that guy mad?

Noone should be able to make it through highschool without knowing that this way lies chaos. The entire body of vector mathematics uses right-hand systems, as does physics, engineering etc…

This means that if you’re using DirectX, you cannot just look up rotation matrices or vector products in any of the standard math books, instead you either need to work it out from scratch yourself or confine yourself to that comparably tiny world of DirectX.

Madness, I say!

Or you use right hand with DX. It’s been an option since…

Oh, I don’t know.

Over a decade? More?

Unity also uses a left-handed coordinate system, which makes it exceedingly troublesome to pass matrices to any other program for example

Great article. It’s strange how weirdly reluctant video game culture (as with many other ‘nerd’ cultures) is to document its own history.

Also, the link to the Outcast article in the second footnote doesn’t seem to work. When I click it just closes the footnote. Maybe it’s just a Chrome thing.

It also appears to be a Firefox thing. Click, alt-click, center-click… all just close the note.

I’m using FireFox and the same is happening to me.

It’s interesting how widely used OpenGL still is. If you’re a PC gamer it’s like “man, who even uses that anymore? It’s all DirectX!”

Except for… *deep breath* all Adobe applications (Photoshop, Premiere, After Effects etc.) most CAD programs, all Playstation platforms (PS3, PS4, Vita…), all Nintendo platforms (Wii, Wii U, DS, 3DS…), Mac, Linux, iOS, Android… okay, those are all I can think of. Right now.

Meanwhile I look at stuff that uses DX and isn’t written in C# and think “Why would you do that? It’s slower and less portable.”

It’s the market, basically.

If you use DirectX then you can target Windows and the XBox.

If you use OpenGL (ES) then you can target Windows, OSX, Linux, Android, iOS, embedded ARM…

– in theory the XBox as well via ANGLE.

So if you ever intend to run your program on anything other than Windows & XBox, you use OpenGL.

However, as Shamus will no doubt explain, it’s not that simple in either library as you have to be very careful about the features used. Not all hardware does everything.

Sometimes the fallback is “run slow”, sometimes it’s “crash” and most commonly it’s “behave weirdly”.

Also, you have to consider that Windows IS a large share of the computer market (especially for gaming, though that may be slowly changing), so targeting it alone may not be so bad of a decision, especially if you only plan to target one platform.

Sure, but even then if you plan on making use of the newest features, you’ll usually end up only supporting the newest version of Windows.

…Web browsers…

That’s mostly congruent with the target audiences. OpenGL was made for and used by professional applications way before DirectX was a thing, and worked across platforms (Because serious people were using Unix). And DirectX was made for games on Windows, and for some reason MS hasn’t seen fit to port it to anything else…

DX is for crazy effects and stuff, while openGL’s origin is mostly just in getting loads of polygons on your screen, where the number of polygons is not determined by what your graphics card can render in 1/30th of a second but whatever the user is constructing.

Those are quite different scenarios, and although the use cases have started overlapping quite a bit, it makes sense that some of those differences are still visible

The ins and outs of doing even basic graphics programming still makes me hate it. Every time I think of getting back into writing a game, something related to low level graphics burns me. I suppose I should just switch to using an engine, but some of the things I want to do won’t be supported there. There’s no substitute for getting down and dirty. But did it have to be SO dirty!!

What do you want to do that an engine won’t allow you to? The modern engines (like Unity) are pretty versatile if you don’t need to be doing things like manually handling vertices. And even if you do want to do that, I think it’s possible, just a bit cumbersome.

To do cube world things efficiently, you need a lot of low level optimizations. To do the huge distances I wanted, I have to play games with the z buffer. I don’t think I can get to that level of performance with an engine.

Is this of any interest to you?

https://twitter.com/nothings/status/583169299470159872

Hey Shamus. Love your approachable coding posts. It allows me to pretend I know what I’m talking about when talking about games to other people!

On another note, though, there’s a weird bug with your image annotations. Apostrophes are substituted by “&-#-8-2-1-7-;” (ignore the hyphens) on a Mac’s Safari instead of being substituted like normal. Nothing major, it is still perfectly readable, but, you know… it is certainly something that shouldn’t happen. Also, this only happens on the floating annotation (is that the right term? The annotation that shows up if you linger the pointer on top of the image, not the one you sometimes attach to a box under the image).

Cheers!

I get that same apostrophe replacement in the alt-text/title-text on a Windows PC in Chrome.

Checking the source code, it looks like an overly-clever encoder (probably in the guts of WordPress somewhere) is turning the &’s in the HTML unicode entities into & amp; (extraneous space inserted to prevent this turning into yet another ampersand) entities themselves , thus messing up the intended character.

OK, I got myself confused by comparing “View Source” against the chrome dev tools.

If you view the source, the ASCII ampersands in the unicode entity escape codes have definitely been replaced by the corresponding unicode entity & amp; (extra space added to defeat whichever bit of WordPress is automatically doing that replacement). This is what’s messing up the alt-text.

Oh my, I am going to enjoy this.

I have tried to do OpenGL recently, but when I reached the “before we can start, write your own shader” step, I thought “Heh, no” and gave up.

Maybe at the end of this I will at least see some method to the madness.

Yeah, that’s where I gave up too. Well that and time constraints.

It’s all very off-putting to someone new to 3D programming. I got a little further along with a web gl tutorial… but then it came time to actually do more than draw plain colored triangles. I realized it really wasn’t worth the time or effort to use it for that specific project.

Is Vulkan going to be mentioned in this series? I understand the need for it, but how and why it fixes things (or whether it does or not) is a bit beyond me.

AFAIK Vulkan is basically ideas from Mantle and OpenGL Next.

Vulkan is a new low level API that game engines/rendering engines can build upon.

DirectX 12 is MicroSofts answer to Mantle (and therefor Vulkan).

OpenGL Next will probably be dropped and focus set on Vulkan instead.

OpenGL 4.x and older will still remain for legacy support and for high level stuff.

DirectX 11 will still remain for legacy support and for high level stuff.

OpenGL will still be updated with new features.

DirectX 11.2 is being released together with DirectX 12, basically bringing feature parity to DX11 vs DX12.

DX12 is a tad silly name choice, it should have been called something else as it is not DirectX version 12 as the name might imply.

In all likelihood there will be a DX 11.3 and DX 11.4 and so on.

If you think of DX 11 and DX 12 as two different generations then that should help understand it more.

So in the future there will be: DX 11 and DX 12 and OpenGL and Vulkan living side by side.

Mantle will probably be phased out in favor of Vulkan.

Also, AMD (and DICE that created Mantle for the Frostbite engine) gave permission for the KRONOS group (the guys behind Vulkan) to use whatever they wanted from Mantle.

Eventually DX 11 and OpenGL will be replaced by DX12 and Vulkan, which means developers need to use a engine or middleware library.

All the big name engines will support DX12 and Vulkan obviously, as will probably SDL and similar middleware.

The important thing here though is that DX12 and Vulkan allow the dumping of a lot of old legacy cruft, which “should” simplify things.

OpenGL Next is Vulkan. The former was just the working name. Have a look at the Vulkan/glNext talk at GDC: http://www.gdcvault.com/play/1022018/

And yeah, Vulkan is based quite heavily (from the general concepts, at least) on Mantle, since AMD came along and said to Khronos “Here, you can have Mantle, no strings attached”.

Funnily, Microsoft also seems to base DirectX12 on Mantle: https://twitter.com/renderpipeline/status/581086347450007553 , but adding their signature reboots. :P

Vulkan is Mantle so its a spec that doesn’t have 20 years of cruft (yet) because its a clean sheet.

The problem is I don’t see nVidia following it so I suspect they will pull out of the OpenGL board and push their own proprietary format.

I’m not sure how that sentence reads so let me be clear, AMD isn’t doing this out of the kindness of their hearts but the shallowness of their wallet. If the spec becomes ..er… an open spec then someone else can throw money into maintaining it.

Hrm, now I’m not sure how THAT line reads so lemme add this too. I love AMD and I think I can see where they are trying to go with fusion and their goofy core vs FPU vs GPU count thingy. I hope they have enough nickles floating around to get their hardware vision out there, it’ll be interesting to see how it works if they can get the software support behind it.

I guess nVidia will try to subvert Vulcan, as using it would give AMD an advantage. However, if (IF!) Vulcan is sufficiently easier to use for developers and gets more performance out of nVidia boards, too, then users will prefer to use Vulcan on nvidia crards, and then nVidia would hurt their ever-so-precious benchmark results by not supporting it, so they would have to keep using it, which gives AMD a market advantage, but everyone else would be better off since games run faster and development becomes easier, and AMD would get a deserved bonus for having brought this about.

… if Vulcan is not that much better, well, then nVidia probably has the power to kill it by just not supporting it and therefore forcing developers to support either two frameworks or staying with DirectX. In the professional market, though, openGL is the thing. So nVidia (which makes good money on the fact that people still think their Quadro chips were better than the FirePro ones) would shoot themselves in the foot by not supporting the new official openGL iteration, bit time.

=> I’d expect them to support Vulcan only in their Quadro drivers and try to subvert it in the consumer market.

I hope that fails.

The first half of your post kinda reminds me of AMD and Intel, and x86-64 and Itanic (…I mean Itanium), actually.

Huh.

History is weird.

Shamus, have you ever spent …oh dear… three hours writing and rewriting a stupid post only to realize that everything could fit into a numbered list?

Yeah…me neither.

Especially not while I’m at work.

1) AMD and nVidia, publish a frick’n hardware spec. I don’t care if they are the same between the two of you as long as the front end is consistent between hardware generations. You both have ARM licenses so you should know how this works, the competitive advantage is in the magical black box fairy land behind the gate and not in the front end.

2) Once step one is done and we can set your GPUs as a target in the (prefix)C(suffix) compilers of our choice OpenGL can go back to worrying about the embedded environment where changes happen at a pace they can keep up with such as continental drift, glaciation or the flipping of Earths magnetic poles.

3) ??? for some reason. I don’t remember where it started and I don’t really care.

4) Yes having multiple code branches is a huge pain in the ass but I don’t think its any worse than what vendors have to do right now to ship cross platform titles. Oh no! I have five x86 targets (PS4 XBone Intel nVidia AMD) rather than the three we were dealing with before.

5) If you can target the hardware then you don’t have to worry about the OS as much. Yes I know that the interface part is a SNAFU but I’m going to conveniently ignore that. Mac and Linux ports for everyone. Weee.

6) There is no rule six. I know where this one comes from. Yes I stretched things out so I could type this which is why…

7) Yes having multiple code branches is a huge pain in the ass but. I think its a better solution than the chuck it over the wall system we have now.

I just realized that since AMD GPUs are in the Xbox One and the PS4 that the possibility of Sony and MicroSoft doing a update to support DisplayPort Adaptive Sync could happen.

The same with the PS4 and Xbox One getting Vulkan, I assumed they would get Mantle previously but as the direction no seems to be Vulkan I’ll assume AMD is going full steam on that.

Oh yeah and SteamOS/SteamBox/Linux will have Vulkan as well.

Surely you can still find indie games doing 2D graphics at a software level? That can’t require all that much power. Hey, I’ve written a bit of a proto-proof-of-concept-2d-game in VB.Net, and I don’t *think* I’m using DirectX (it’s possible the VB.Net graphics routines talk to DirectX in the background without me knowing, but I don’t think so).

I don’t even *have* a graphics card (well, not a real one; just a software version). I can’t run recent AAA games, no; but I can run anything 7ish or more years old or most 2d/ indie stuff. Trine 2 runs fine with all the graphics setting down, for example. I had a bit more trouble with Giana Sisters; I don’t think it’s well optimised. It’s *playable*, but a bit jerky.

Is using DirectX / OpenGL to talk to a software-only graphics card likely to be faster than any other method, or is the benefit only realised if you have a real GPU?

Yes, you have a graphics card. It might be embedded (IE, part of your motherboard), but you do have one. And Windows/VB is talking to it, it just hides it all from you.

2D graphics done purely on the CPU can still get pretty slow. I know that Java’s basic swing graphics is unsuited to games.

All modern x86/64 CPUs have graphics card hardware built into them, either Intel HD or AMD APU series.

It’s been like that for several years.

They’re pretty powerful these days, though a recent discrete card will of course blow it out of the water.

For example, a current (5th generation) Intel Core i5-5200U has an Intel HD 5500 graphics ‘card’ inside it that has 24 GPU cores (compared to the >100 in a mid-level discrete AMD/nVidia card)

Not all. AMD’s FX line and I think most/some of Intel’s chips (primarily i7’s) don’t come with built-in GPU’s. These are high-end CPU’s, though, and so if you’re getting one of those you’re probably getting a discrete card as well. The performance of integrated cards is actually really good right now. I think about a year ago AMD made a push for 1080p 30fps gaming (low, but not unbearably so) for all of its APUs, with the higher-end ones exceeding that by a good margin.

Yeah, but these don’t try to fake it in software – if you try to plug in a monitor, it just won’t work.

Not like the old days, where motherboards with “integrated graphics” had little more than a framebuffer and all the real work was done in software.

I tried doing software graphics with Java’s AWT for a tactical RPG. It was… adequate on my modern, but low-end desktop graphics card, over 100 fps typically I think, and under 60fps on my netbook with the low-end graphics card (still playable though). When I added normal map lighting so I could look forward to using Spritelamp, it dropped like a rock. If memory serves, it went down into the 20s on my desktop and to like 4fps on my laptop. I think a lot of that was because Java doesn’t automatically hardware accelerate stuff that changes every frame, or some problem like that, I don’t remember exactly.

So, if you're doing really basic stuff for mid-to-high-end computers, Java can do software rendering for games. If you want to do lighting or anything, you basically have to do what I did and grab an engine or (in my case) LWJGL and start moving your drawing to OpenGL. Even using ancient OpenGL v1 (and maybe 2?) techniques, my FPS shot up. I haven't tried it on the netbook yet because its built-in chip is so bad it doesn't even support fragment shaders until I'm able to hopefully work out how to use the extension for it that it may or may not support.

Yesssssss! More programming articles! And it’s about OpenGL, which I’ve been sort of trying to use! And this one even discusses the apparent jump in complexity that, along with having a different range of compatible cards, makes me hesitate to learn the New Ways.

I always love your programming posts, Shamus. They’re always informative about things I have no clue about, yet they don’t make me feel stupid. You have a real gift for making these kinds of things interesting. Thank you.

Where/what are the three images in the post from?…Top and bottom are from the same game/program, I assume, but which one?