We’re here to work on shaders, but we can’t very well just start writing code without blathering on for a thousands words about random things. I mean, I can’t. How you program is your business.

Code re-use

I’ve written all this before. In Project Octant I made a block world.

I will say that to a certain extent “code re-use” is overrated. Not to say that it’s not incredibly important. It’s just not the end-all-be-all of software engineering. Sure, it’s nice to be able to make use of code you wrote in the past. But it’s also really nice to be able to write a more perfect version of something. Every time you attack a problem you understand it a little better, and every solution is just a little lighter and cleaner than the last. Yes, you can gradually improve something you’ve already written, but there’s nothing like a re-write to let you really fix structural problems.

I’ve discovered that re-writes aren’t nearly as expensive as they seem. They’re mentally daunting and often boring because it feels like busywork, but if you wrote the previous code and you’ve still got the structure rolling around inside your noggin, then re-writing isn’t that much worse than re-typing.

Of course, all of this is only true to a point. If I was working on some behemoth like the Crysis engine I wouldn’t be able to get away with re-writing. The job is too big, too interconnected, and it’s so complicated I’d never have the whole thing in my head at once anyway.

Still, I have to say that re-writes have a lot of good points. This new version of the cube-based world is shorter, cleaner, and clearer than my last attempt. And I’d be lying if I said that didn’t feel good.I guess re-writes are kind of decadent: The work of someone who is able to polish their code instead of being obliged to ship something useful.

And now…

A Brief History of Shadows

Let’s talk about why I’m doing this.

Back in Ye Olden Thymes, when buxom barmaids served flaggons of ale to world-weary pilgrims as they traveled from kingdom to kingdom, we had games with really terrible graphics. I mean, they didn’t even have shadows. It was a stark, ugly existence where everything was out of scale and the pixels were so big you couldn’t tell the giblets from the bullets.

|

I’ll be using Minecraft here to demonstrate what I’m talking about. Just to be clear, all of this is photoshopped. This is easier than digging up and playing all the old games and finding screenshots to illustrate what I’m talking aboutAnd then I resort to that later anyways..

The problem with not having shadows is that everything seems kind of floaty and it’s difficult to judge scale. Eventually we discovered that if you draw a simple black circle under a character it roughly approximates having a shadow.

|

By putting a shadow directly under his feet we can suddenly see that the little guy is actually slightly off the ground in mid-jump, and not larger and further in the distance, which is what your eye might assume. This trick seemed to work well, even when the light source wasn’t directly overhead. Our eyes are just used to the idea that we’ve always got shadows around the base of things. Tables, chairs, people’s legs: It’s usually darker underneath stuff. I mean, duh.

But the little blob shadow has its drawbacks. It’s kind of cartoony and you’re missing out on some really interesting gameplay. (Shadows falling across doorways in a stealth game is a good example.)

There were a lot of ways to half-ass this. Here is one of many:

|

Between frames you take something that should be casting shadows and draw it into a texture. Draw it from the point of view of the light, pure black.

|

Then stick it on a panel, and position the panel so that it’s projected away from the light.

|

The bad things about this system:

- How many little textures do you need to draw? You need

number of lights×number of thingsto cast shadows. That scales poorly, particularly when it means a lot of fiddling around to get those panels positioned properly. - The larger the shadow, the more texture memory you need. This sucks, because there’s no upper limit on how long a shadow can be. If we’re casting a shadow at an extreme angleLike at sunset, for example., then we can either devour tons of memory, chop off the shadow at some arbitrary cutoff point, or lower the resolution of the texture, making the edges more pixelated.

- No matter how much texture memory you use, the edges of the shadow are always a little ugly

- This technique is really only useful for stand-alone objects. You would get very poor results (bordering on disastrous) if you tried to use it to have the interior walls of a building cast shadows.

- The shadows “stack”. So if I’m standing in the shadow of a large object, I will cast an even darker shadow on top of it.

- The shadows really only appear on fixed surfaces like floors and walls. One character isn’t going to cast a shadow on another, and the item on the table will sometimes cast this odd shadow under the table.

There are ways to mitigate these problems, but only at the expense of more processing. And no matter what you do, it always feels like a bit of a hack. I experimented with shadows like this way back in the early aughts, and I hated the entire system. For all this fiddling around, there are still situations where they look more ugly and incorrect than those cheap and easy blob shadows of yesteryear.

This is just one shadow solution of many. And to be fair, I think this was the worst one. It’s certainly the worst one that I tried.

For static stuff we could bake shadows in. The Quake games had shadows that were created by the level editor. They looked amazing for the time period. The drawback was that the shadows couldn’t move. If I put a shadow on some bit of moving wall or platform, then it would carry the same lighting with it whenever it moved. If I had a lift shaft that was brightly lit at the bottom and dark at the top, then it would look horrible no matter what. Either the platform would be lit at the bottom position and then seem to glow when it was at the top, or it could be lit at the top position and be jarringly dark when it got to the bottom.

|

| This is a secret wall in Quake. The level is lit with the panel fitted seamlessly into the wall, where lights can’t reach that edge. So when the panel slides open, the edge remains black. |

John Carmack called this the “Hanna-Barbera effect”. All animators suffered from this to some degree. Even Disney. But HB – who were pretty aggressive and inventive with their cost-cutting – were particularly bad about it. The moving scene details would be flatter and simpler than the more lavishly drawn static backgrounds, which made them stand out. You could tell ahead of time that the precipice of rock was going to fall away when someone stepped on it, because the rock didn’t match the rest of the scene in terms of lighting and color.

|

| What is going on with this wooden floor? Is it supposed to be polished? (It’s not a reflection.) Or are those shadows? (They’re too bright.) Or are the colored patterns supposed to be marks on the floor? (They don’t look like it.)

Anyway. The rake in the foreground is drawn flat-color while the background is drawn using fancier shading. This makes the rake stand out, so it’s obvious it will be a moving object in the upcoming scene. |

So you’ve got all these different shadow and lighting techniques. One is good for small stuff that moves. Another is good for static walls. Another is great for objects that need to receive light but don’t need to cast shadows. So you’d have all these different lighting systems, and they didn’t really interact intuitively.

|

| I know it’s popular to dump on this game, but I still really dig the first half or so. |

One of the breakthroughs of Doom3 was the unified lighting model: It was designed to do away with these various hacks and tricks. There wasn’t one technique for walls and another for furniture (with a special side-case of usable items) and another for people. There was one pipeline for everything.

This has an interesting effect on complexity. The unified lighting system was at least as complicated as what came before, but all of that complexity, once managed, could be shoved into a box and you could forget all about it. You didn’t have special case-checking right in the middle of the game code, and the artists just had to concern themselves with a few basic rulesLimits on the number of lights shining on a particular surface, for example. and not have to be aware of the more technical systems underneath. Art was as challenging as ever and the code was as complex as ever, but the two were no longer mixed together in a big soup of compromises, exceptions, and special-case uses.

I really like this, and I think it’s a good place to start messing around with advanced shaders. The techniques and theory are pretty well documented, so I shouldn’t need to invent anything new.

Onward.

Footnotes:

[1] And then I resort to that later anyways.

[2] Like at sunset, for example.

[3] Limits on the number of lights shining on a particular surface, for example.

How to Forum

Dear people of the internet: Please stop doing these horrible idiotic things when you talk to each other.

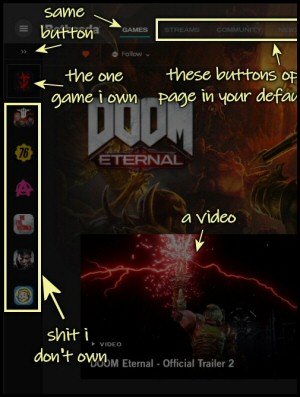

Bethesda’s Launcher is Everything You Expect

From the company that brought us Fallout 76 comes a storefront / Steam competitor. It's a work of perfect awfulness. This is a monument to un-usability and anti-features.

Charging More for a Worse Product

No, game prices don't "need" to go up. That's not how supply and demand works. Instead, the publishers need to be smarter about where they spend their money.

The Strange Evolution of OpenGL

Sometimes software is engineered. Sometimes it grows organically. And sometimes it's thrown together seemingly at random over two decades.

Another PC Golden Age?

Is it real? Is PC gaming returning to its former glory? Sort of. It's complicated.

T w e n t y S i d e d

T w e n t y S i d e d

Ah the good ‘ol days. I have distinct memories of playing Unreal on a machine with a VooDoo2 card and falling off of cliffs because the scenery was so amazing. I had a save game from on top of the waterfall outside the prison ship and anybody I could wrangle got to see me fall off the edge as I excitedly pointed out how good the water looked.

The smoke poring off the rear tires in Grand Prix Legends…I always had horrible starts in that game, watching the cars in front of me rocket away down the straights at the Nurburgring.

*sigh* Increasing the number of pollys by an order of magnitude in game X+1 just isn’t the same.

It is interesting to note that Unreal Tournament had poorer quality. They removed things like detail textures. You can actually load up Unreal levels inside Unreal Tournament, it’s really interesting to see the difference in quality, I assume due to multiplayer limits. Just look at a wall really close using both sometime, I think also things like fog etc… were all effected. I do miss those games though.

On topic, another game I liked which has fairly simple 3D techniques was Battlefield 1942, which also had prerendered shadows right on the terrain. You could see this on some levels if you loaded up a MOD which allowed you to fly jet planes as I did one time on the Wake Island level, then flew really fast out into the ocean, the game repeated the level in all directions, minus the objects, houses, trees, just the terrain for looks as you were never meant to be able to fly that close to it. Anyhow, I was able to fly over the duplicate and see the shadows on the terrain without the buildings.

And they rarely draw shadows for the player character in a First Person game, which definitely decreases the amount of high quality shadows required as compared to Third Person games.

Keep the movables far away and the corridors dark.

Ooo…shadows. I’ve looked at how to do shadows with OpenGL shaders a few times in the past but most of the time the explanations have gone over my head. I’m looking forward to your description then.

Two words: ray tracing.

Okay, a few more words. Real time ray tracing has been achieved as a proof of concept some time ago already, and I am still eagerly awaiting its break-through. I am no expert in graphics programming in general or ray tracing in particular, but what I have learned so far, is that compared to the traditional “scanline” rendering method, ray tracing

– Can produce 100% authentic looking lighting and shadows directly, making many of the current special graphics features obsolete, for example stuff like “ambient occlusion”.

– Scales better with parallelization.

– Scales better with increasing complexity (i.e. number of triangles in a scene)

The last two points seem most important to me because that has been the trend indeed – ever more parallel computation in the GPU, and ever more complex landscapes, objects, character models etc. As far as I understand it, there is a sweet spot beyond which ray tracing automatically has the better performance due to the sheer pressure of numbers, and we can expect to reach this point in our lifetimes.

Or maybe we have surpassed it already? I am not up to date on the developments in this area.

Doesn’t ray tracing scale negatively with resolution? If each pixel is it’s own ray?

And if you do reflection and/or ambient lighting, each ray has to ‘bounce’ multiple times in order to calculate the true colour of the pixel?

As far as I am aware, ray tracing scales linearly with the number of pixels. Due to parallelization, a higher resolution could be offset with more GPU cores working in parallel.

It’s worth remembering that fill rate is a common bottleneck for traditional rendering too. The cost scaling with resolution is really not unique to raytracing.

Are you sure about it making ambient occlusion obsolte?

Of all things I would assume ambient lighting models can not easily be reproduced by ray tracing, since they model infinite scattering behaviour.

AO is easy to do with ray-tracing (It’s the default Blender AO method). You can do environmental lighting with ray-tracing as well. Though, as you say, it does require multiple hops, it’s usually only two or so before the margins get thin enough to not matter. You certainly don’t need to do an infinite number of calculations.

As with anything mathematical, there are two approaches, the analytical, and the numerical. The analytical produces an exact answer using carefully constructed formulas. It’s not necessarily the correct answer, but it’s fast. The numerical approach is basically brute force. It’s slow and memory intensive, but can also deal with much more complicated problems.

Ray tracing is numerical. Current gen graphics are analytical. Eventually we’ll have enough processing to make real-time ray-tracing feasible… but it’s never going to be faster than analytic shading renderers. You don’t use ray tracing in games for the same reason that you don’t run FEA on ball bearings. There’s an established analytic model that works well enough already.

Quite a lot of shaders trace rays, like steep parallax mapping shaders, for example. That works nicely on the GPU where all of the rays are hitting the same thing, but doing it with rays bouncing around the entire scene is very expensive and much harder to do while getting the benefits of a GPU’s parallelism.

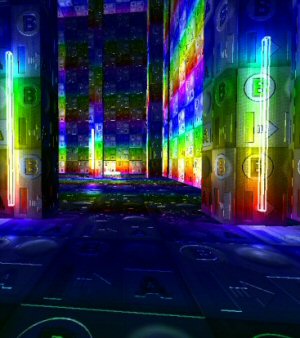

On the other hand, I wrote a shader which traces rays through two depth maps, using depth from the camera and depth from the light source to give god rays, volumetric lighting and shadows, which runs surprisingly well. I reckon it’d actually be quite easy to implement some sort of ambient occlusion and faux-global illumination in the same system. The sort of reflections you get out of a classical ray tracer? Not so much.

It looks surprisingly good too! What’s the system load like?

Thanks! The performance is “good enough” but you wouldn’t want to try to do all of your lightning that way. In my (extremely amateurish) renderer, drawing a pretty large area of terrain and with a GPU water simulation, I’m getting about 30fps. There’s a lot of other post processing, including reasonably good quality ambient occlusion. This is running in OS X, which doesn’t exactly help performance :-/. The whole thing is very fragment bound though – I’m using a framebuffer one quarter of the dimensions of the main buffer. Not surprisingly it scales with the square of the screen area and linearly with number of samples along each ray. I might save a bit of performance if I combine it with SSAO rather than doing each seperately.

So, in conclusion, this sort of thing only really works at interactive rates because you can render transparent glowing effects at surprisingly low resolutions and still have them look okay :-)

If you’re mathematically inclined, there are some recent ways to speed up the raytracing for this effect: http://graphics.tudelft.nl/Publications-new/2014/KSE14/KSE14.pdf

http://groups.csail.mit.edu/graphics/mmvs/

I tried to implement a simple version of this but got caught up with the scattering equation: http://www.cse.chalmers.se/~uffe/multi_scatter.pdf

I just hacked together a fragment shader so the “volumetric light” could be used in the normal transparent objects render pass. Looks ok – we weren’t going for realism though: https://patrickreececv.files.wordpress.com/2014/06/screen2.jpg

Thanks, that’s really interesting although you very nearly broke my brain… I do wonder exactly how much graphics memory that method uses, though, since they’re basically making a 2D texture for each scanline. It does look as though the technique can keep up with re-drawing all of those textures when the light moves, which makes it a lot more convincing. I suspect I’m too dumb to implement it without a good long think, though!

I did try the approach of rasterising shadow volumes, but it was much more trouble than it’s worth – it’s slow, working out whether or not the camera is inside the volume is a pain, and it doesn’t even look all that good with lots of aliasing artefacts jumping around; also trying to do it with a cascaded shadow map is very tiresome.

Yeah, what little I’ve heard and understand of ray tracing (which is, to be fair, not a whole lot) is that ray tracing can produce some really good results that look fantastic, but say bye-bye to a nice smooth framerate.

My own knowledge is limited, but if I understand correctly part of the problem is that ray tracing needs a general purpose processor cores, and you computer isn’t going to have more than 32 or so (probably 4 or 8). Meanwhile, modern GPUs have mind boggling numbers of cores, but they have really strict limitations that make ray tracing difficult.

We’ve been hearing promises of how real-time ray tracing that is just as good as triangle rasterization is just around the corner for more than a decade now, it’s never been true. While ray tracing tech advances, the fake ways advance too.

By way of example, Pixar’s rendering engine tries to avoid ray tracing because it’s so slow. It can do it, and does in some cases, but even Pixar with their massive render farm is using the cheating methods. (Although different methods that you’d use for real-time graphics)

Remember Larabee? 128 generic core chips? Perfect for ray tracing, but never happened techinically.

Weirdly, mobiles are getting real-time raytracing before desktops: https://www.youtube.com/watch?v=QTuf3ed0Jo4

Apparently the GPU hardware has some differences making it more feasible for handheld devices.

“Or maybe we have surpassed it already? I am not up to date on the developments in this area.”

Voxlap uses real-time raytracing. Which means that Ace of Spades does as well, but you can’t tell because they scaled up the voxels to Minecraft-block size. Most people ignore the engine because it’s just a quiet open-source hobby project released by Ken Silverman and isn’t multiplatform and doesn’t even have any marketing. But it’s fast voxel-based real-time raytracing already used in a commercial game.

Two more words Path Tracing. ray tracing’s bigger cousin that is perfectly happy on the GPU. Problem is that we need GPUs orders of magnitudes faster and with more cores than we have today. Give it another 10 – 20 years and we will probably see some thing like this emerge.

EDIT: Here is a fun demo. https://www.youtube.com/watch?v=pXZ33YoKu9w the noise is still a huge issue.

Thanks for the links. This is the coolest thing done with a Monte-Carlo algorithm I have ever seen. :D

Huh.

About five years ago, in college, my radiant heat transfer class basically used this method to simulate focused sunlight entering a cylindrical solar furnace, to find the temperature profile within the chamber. It was a complicated cylinder, where emissions and reflections depended on temperature, but it was still just one surface.

Since I was the only one in the class that knew C++, I had the distinguished honor of only having to wait 30 seconds for my simulation to converge to an acceptable error level. The rest of the class used (unoptimized) MATLAB code, which took on the order of half an hour.

It’s really amazing that anyone is thinking about doing this in real time five years later.

So…another 20 years after that and I’ll have a laptop-GPU powerful enough for it? :P

I bolted a path tracer onto my ray tracer a couple of years ago.

You can see it here

The good thing about doing this was that once the ray trace had been built, the only thing that needed to be done to get a path tracer working too was reverse the algorithm. Fire rays into the scene, and then rather than work out lighting for a single event, KEEP tracing it through random bounces until it hits the light source.

There has to be some depth limit, otherwise you risk overflowing, and it works FAR better in a completely enclosed scene.

The linked image is a mock up of a cornell box – 6 planes make up the ceiling and the walls, nothing can escape. The colour bleed is most obvious on the matt sphere itself. You don’t get anything fancy like fuzzy shadows or colour bleed with vanilla ray tracing.

Slow though. Very slow. It took a few hours to run. It was CPU only, I still think occasionally about porting it to the GPU.

Wish I’d put up some additional pictures.

Edit- and that Brigade video puts me to absolute shame. I have to remind myself that I do this for fun, and try not to be jealous.

See to me it would be interesting if some one used this tech to reproduce impressionist paintings. I know we cant render 1080p at 30fps(or even 24) . Maybe we can render sub 720 then scale up and essentially run a maximum(from photoshop) filter over the whole image. I think that would compensate for the noise and create a neat look. You would have to also clamp the amount of samples you take, so that the image doesn’t just brighten for no reason once you stop moving. I know I’m oversimplifying things. The guy can only run his latest demo on two titans, but we can dream.

What really broke Doom 3’s lighting, and this isn’t immediately obvious but once you’ve noticed it or had it pointed out, you can’t help but see it in almost all scenes and screenshots: there’s no real ambient lighting.

Sure, there are things that can cast glows onto other things (the blue plasma gun being the principal one), but light never reflects off of anything. So you can have pitch-black areas right next to brightly-lit areas, which does not happen in reality.

Yeah, people don’t seem to realize it, but ambient lighting is way more important for spatial congruity than direct shadows are. That’s why the “blob on the ground” works so well, because it’s quick and dirty Ambient Occlusion.

*nod*

The best lighted scenes are those where an artist places light sources and decides how shadows should fall, a good artist can make a scene look way better than a automatic lighting and shadow routine could do.

The only thing that can do better is ray tracing (and similar) but that is so processing intensive.

I guess it’s the uncanny valley issue.

Which means that faking it may look better than trying to simulate it.

(you can only simulate it as emulating it is too computationally intensive).

“It was a stark, ugly existence where everything was out of scale and the pixels were so big you couldn't tell the giblets from the bullets.”

Originally, just to display something on screen representative of something, and the 6502 microprocessor had just come out and non-recorded graphics became possible, you had to make compromises.

But recently I’ve come to realize that we’ve sacrificed something incredibly important. I’d been aware since at least the late 90s that games of my youngest days compensated for their lack of graphical fidelity by making you use your imagination. As a kindergartener who was constantly being told by the Muppets that Your Imagination Is The Best Thing Ever, 1980s video games were a completely intuitive extension of that. It was obvious.

But more recently I’ve come to realize that it’s not strictly a matter of graphical fidelity, or iconography. It’s what I call Abstract Perspective.

Super Mario games are side-view perspective. Tank is top-down perspective. Pac-Man and The Legend of Zelda are not viewed from a single perspective, but multiple perspectives at once. Consistent, representational perspective where each kind of thing is viewed in the same way and doesn’t change, but the perspective may be different for different things.

For example, the Pac-Man maze is assumed to be top-down or some kind of isometric (like the later Pac-Mania), but Pac-Man himself is viewed side-on. The power pellets and fruit can’t possibly be the same scale as everything else. The ghosts are actually moving backwards, or possibly facing south, when they move up/north. But it all feels fine, and doesn’t look awkward, because everything is assumed to be an abstraction.

The way Link and most monsters move in The Legend of Zelda implies a top-down perspective, but he is actually viewed side-on or somewhere between top and side. All of the trees are side-on. The dungeon rooms are top-down. The keys and other items are not remotely to any kind of scale. Some of the bosses look side-on (the multi-headed dragon), but others look more top-down (the floating eyes). Piles of rupees look exactly the same as individual rupees and negative rupees. Dialogue floats in mid-air, or possibly on the ground.

Early video games have much in common with cubism and M.C. Escher’s work. I think it’s tragic that current “retro style” usually means chunky pixels but a uniform perspective while overlooking the fact that proper imitation of the style requires an abstract perspective. But even more tragically, this style can be imitated with high fidelity, but no one seems to even be attempting it.

The Spoiler Warning episode where you complain that they resized the chests and you can tell because the smaller chest has a proportionately small lock is an example where it is dissonant because the rest of the game is mostly to-scale or when things are scaled differently, it is less easy to notice (like with rocks). I say that someone should make a game where the art direction deliberately, carefully screws with perspective, scale, coloring, and even texturing.

Team Fortress 2 is almost an example of this, but the “cartooniness” justifies the impossibly large hands and such. I want something that screams “THIS IS A VIDEO GAME AND YOU NEED TO INTERPRET WHAT YOU SEE TO UNDERSTAND WHAT IS ACTUALLY HAPPENING. FOR EXAMPLE I KNOW APPLES ARE NOT THE SIZE OF YOUR CHEST AND SOMETHING THAT LARGE COULD NOT BE EATEN WITH ONE BITE. LIKE I JUST SAID YOU ARE PLAYING A VIDEOGAME AND VIDEOGAMES ARE ABSTRACT SO DEAL WITH IT.”.

Like if they made Skyrim with the same art budget but deliberately tried to make it look like Ultima I-V. And had one palette of no more than 256 colors for texturing everything. And if anyone even said the word “desaturated” they would be drawn and quartered.

Heck, even making Skyrim with the graphic/art style of Team Fortress 2 would have been a huge improvement. Probably could have saved a metric buttload of money, to put towards making the engine, interface, and quests better. :)

Example: the more the Civilization games have represented units, the less acceptable unexpected battle results have become. In the first game, when a tank met head on with a knight, these were just square icons meeting head on. Annoying to see your tank destroyed, but ok. Plus, on the abstraction side, you could always reason that the square icon may have represented a lot of knights, all facing off against a single tank.

The more representative the art becomes, the less palatable this handwaving becomes.

Shadows

Looking around the room right now I see a lot of shadows with a 50% opacity (or there about), the edges of the shadows are diffuse/smooth.

The shadows themselves are very solid and could easily be recreated using projected shadows (like in the example photo in Shamus’s initial post).

The room here has (if we ignore hte light from the monitors) the light from the hall way, the light through the curtains (from the window), the light from a lamp at the table edge near the wall point ed upwards (for indirect a indirect lighting effect), and the ceiling light.

The dominant light is the ceiling light, the other lightsources do not really contribute much to the shadows cast, they may be responsible for diffusing the edge of the shadow though.

In most cases there is one dominant light source, creating a projected shadow based on that and diffusing the edges of the shadow should look nice in most cases.

But what about multiple light sources? In that case you end up with several weaker shadows (usually) pointing in all directions which do not look all that impressive in my opinion (I’m talking in real life here).

Single projected shadow with diffuse edges plus some form of ambient occlusion should give you something that looks good.

If the level/areas are designed well (and the plot/story told well maybe with time of day jumps for variation) you can strategically place the primary light source to make it varied and striking.

Processing wise this is also very cheap in processing cycles (as opposed to multiple volumetric shadows), and some of the shadowed can be pre-baked into the the room or done during loading of the level. (myself I prefer stuff getting calculated at load time)

EDIT:

Long shadows are tricky, but if the light source is low and the object casting the shadow is small then fading out the shadow the further away it is from the object casting it may be acceptable.

If the object casting the shadow is very large (skyscraper?) then you could fake it I guess by just having the shadow a sized down version of the object (so it hardly uses texture memory) and then just scale up up when drawing it over the terrain/ground, if you can blur the edges of the projected shadow then nobody should notice that the shadow texture is low resolution (since it’s that huge the overshadowed terrain itself should help mask that), you could probably even reuse it (multiple skyscrapers could use the same shadow, have edges blurred and scale it up then then paste multiple copies over the terrain).

Actually, now that you mention it, I am confused.

The way Shamus described it, why would the shadow take up more texture memory for long projected shadows? Aren’t you only storing the silhouette of the object doing the casting, then projecting/deforming it onto the landscape? Where’s the memory load come from?

He’ll probably point it out.

But my guess is that he re-sizes the stencil (right term?) before storing it in texture memory, which is kind of backwards in my opinion, you may be able to get nicer edges if you blur them this way, but I don’t think most people would notice.

In fact a stored shadow texture could e smaller than the object it’s made from, especially if it’s to be blurred a lot or will have low opacity.

BTW! A shader that quickly and roughly blurs the edge of a “black” texture should not be hat difficult, then just alpha blend the shadow on the terrain or wall etc.

Damn you Shamus, now I kind of feel like messing with (and learn) OpenGL myself and I got all these other projects I really should finish first…damnit!

Stand beside a lamp, and hold your hands up in the shape of a box, outlining a shadow cast by the lamp shining on a nearby object. Try to get it so half the shadow is on a desk, and half is far away on the wall. Now imagine that in your little finger-rectangle is only 10×7 pixels (for simplicity). Those pixels are the amount of memory you’re spending on making that one shadow. Now look at the close-up part of the shadow, versus the part which is cast far away, on the wall. The close-up shadow has pixels which are about a centimer wide, and the pixels on the wall are about 10 cm wide. So, you either spend more pixels (memory) making the far-away shadow look less blocky, or you hope the player doesn’t notice the ugly shadows.

Nope, you just blur the edge/details.

Also if half the shadow is on one object and half is on another then that is actually two shadow projections (one for each plane) so you could use different amounts of blur.

On issue though (as pointed out by Shamus in the original post) is that you could end up with dozens of these.

So when faking it you kind of have to choose a plane to focus on (plane distance = blur amount).

“the pixels on the wall are about 10 cm wide. So, you either spend more pixels (memory) making the far-away shadow look less blocky”

Actually, “doing the lamp thing” here the more distant cast shadow is more blurry so detail wise it’s the same as a close shadow (which has sharper edges).

I was elaborating for Abnaxis, Shamus’ explanation of old-school shadows, not your proposed method. :)

But what good does using more pixels to store the texture do, unless you are doing some other processing (like blurring or alpha blending like Roger said) to your shadow texture? Otherwise, all the blocky edges will be in the same place, you’re just using more pixels to cover that 10cm in the same shade, aren’t you?

They’ll be smaller blocks. So, yeah, up close in the 10 cm area, it’s already got small enough blocks, and you’re wasting memory. But the farther shadows become less blocky, and more smooth.

Just wait until you have to start thinking about the temporal stability/aliasing issues! This point happens immediately after you’ve dealt with “crawling” shadows caused by a lack of depth precision. You sit back, finally satisfied that you’ve made a nice visual effect… And then immediately notice huge blocks of shadow flickering on and off in the background (that’s why the shadows in skyrim only move “on the hour”, and look terrible while doing so)

Yeah the Skyrim shadows are weird, especially since they seem to be blended/morphed when they change too(!).

I guess one way that would be better (as I mentioned in past comments) is to up the quality when the player is still and then cheat when they move.

For example Skyrim could have added high rate shadows when standing still (the camera that is),

and when the camera/player is moving, the faster they move the faster/rougher shadow updates can be,

if done right it could even give a sense of greater traveling speed than,

it also gives the nice effect of the longer the player “travels” the more time seems to pass,

while just standing still time moves slower.

On shadows I forgot to mention that the closer a object is to (let’s say the ground, or a wall) the higher the opacity of the shadow, the further away the lower the opacity. The edge of a shadow gets more less blurry as well, so that farther away the object casting the shadow the bigger the blurred edge becomes.

Code reuse

I do reuse code a lot.

For example I have a archive folder with several include files.

These includes have a version number in their filename.

When I use a include I copy it to a project’s include folder.

If I end up editing the include for some reason then those changes are applied to the include in the archive folder and the version number in the filename is increased.

At first the version numbers climb rapidly, but after a while only the minor version number changes, and in some cases a file may remain unchanged for a year or more.

Why the version in the filename you might ask…

Well, if I do changes then it’s better that the a compile breaks so I don’t end up using the wrong include file (which may have changed how things work or change the error codes returned from a function etc).

It also allows a simple versing system without having to use one.

And it allows me to keep the include file with the project, that way I won’t ruin all projects if I delete/change files in the archived include files folder.

So what kind of include files are these, well anything from a internet download include (handy for fetching files from the net to check for version/updates), to things like reading/writing to the windows registry, getting default paths. And do keep things consistent from Windows 2000 up to Windows 8.1 as various things do change in the APIs so my includes have stuff that deal with that (so the main program do not have to have special code added to deal with it).

The primary benefit though is that of iteration, each improvement will benefit not just the current project but future ones, and should a older project need to be recompiled then a few tweaks and it can take advantage of the improvements too with minimal time needed.

I try to maintain the version such that it is:

Example_1.3.inc

With “Example_” being the include name and “.inc” the include file extension.

1.3 is the version with 1 being major and 3 being minor.

If only the minor version changes then the include is “call compatible” and any changes done are such that the include can be used by other projects that use the version 1 include also.

If the major version has changed then the changes are such that any projects using the include would probably need some tweaks to make everything compatible again.

Now all this is manual, it could be automated and for large projects with multiple coders it probably should as well.

But for smaller projects or solo projects this works very well.

The main point and benefit of doing this is for the code re-factoring / iteration, over time the code in the includes get better, tighter, any loops get insanely tight and efficient, you may even end up throwing some assembler in some smaller loops if you can.

There is one downside though, feature bloat, it is very easy to feel like you can add/improve things.

But if you stick to a less is more mentality it should remain pretty tight hopefully.

Each include either have only one function / procedure in them, or several related ones (like various windows registry related functions) in a include called Registry_x.x.inc for example.

Over time this archive of includes will grow and you will soon realize they have become your coder toolbelt, or personal programmers library if one may call it that.

I also have a folder called just “misc” that has a bunch of one off standalone code examples which I sometimes go to if I forget how I did something or should do something, it’s messy but acts as a nice treasure chest. Basically it is just a bunch of test snippets, code I wanted to keep and ideas that just remained an idea.

That stuff sometimes end up in a project or into the archived include files if good enough.

I do something similar. Except instead of include files I make static libraries out of them. And I don’t name them for versioning, I have git repos of each library, tagged with revision strings.

I use semantic versioning for the revision strings: http://semver.org/ It basically follows your scheme: x.y.z are major, minor and patch versions. Patches don’t change the interface of the library (improvements to code, etc). Minor versions add functionality, or deprecate parts of the interface. Major versions break compatibility by removing parts of the interface. So if you use version 1.0.0, you can change to 1.0.1 or 1.1.0 without worrying. It’s only when moving to 2.0.0 that you need to see what’s changed.

I think that so long as you keep libraries small and focused, and are careful about designing your interfaces, rewrites are not very traumatic. It’s unlikely that when redoing a piece of gui code your collision system will need an overhaul (unless you went and coupled everything very tightly, which is why I separate everything into libs). And you can get up to speed on any new projects by grabbing the pieces you need.

Your theory about code reuse does make some sense if you’re working on your own, self-contained, smallish-scale projects (and even then, as Roger Hà¥gensen above me has mentioned, you’ll probably want to have your set of tools that you carry between projects).

But this is a pretty uncommon use case. As soon as you have a team, you’ll want to have an infrastructure that everyone can rely on. As your projects get bigger, you don’t want to have to rewrite every little detail every single time. And you definitely don’t want to have to write the basics for every single projects, e.g., if you’re making a chat app you’re not gonna start with the TCP/IP stack because it’s already been competently implemented by other people.

THAT is why code reuse is regarded as the end-all-be-all of software engineering. Because without it, coding just isn’t scalable to any degree.

Yup, the culmination of code reuse and iteration (or at least it should be) is code libraries and middleware.

Sadly there are a lot of bloated libraries and middleware out there.

Not to mention libraries which have a non-uniform, unintuitive, etc, API. ^^;

I’m pretty green when it comes to shadows, but one though that occurred to me is that one of the easier ways and which will (or should) look fine with stereoscopic 3D (like the Oculus Rift) is polygonal shadows.

The benefit of polygonal shadows is that the shadow is “physical” and in the world, so even if the camera changes position the shadow does not, and when rendering two views this is what you want.

A few shadow techniques break down with stereoscopic 3D, giving shadow in only one eye, or there is a shadow miss-match (as it is calculated differently for each camera/view).

A simple shadow based on projection from the light source should not change with camera position and should look completely fine in stereoscopic 3D.

Another thought on shadows.

Since making a polygonal shadow of an object could eat a lot of cycles, while the detail it could give is welcome, one could also use a stand-in object.

These would be a invisible dummy object (a square, a circle, whatever) and the polygonal shadow would be created from that, but the size and angle is based on the object rendered. With a blur/edge alpha/whatever this should (I hope) give OK but cheap (computationally) shadows.

Another thing to keep in mind is movement speed, the faster the player or camera moves the less detail you need, and when standing completely still you usually can afford to do more “expensive” shadows.

Although if you can pre-compute (at level load?) the polygonal 2D shadow of a object then you could re-use that.

Depending on your use you could even pre-pare various angles and store those, a leftright shadow, top down shadow and frontback shadow.

Anyway, I’ll shut up now.

All of the methods of casting shadows in realtime appear to only allow for point light sources.

In the real world, there are few point sources of light.

Yeah, and if they are bright enough to light anything, they are also bright enough to blind you if you look at them.