Perlin Noise is a technique for quickly generating a metric crapload of really interesting pseudo-randomness. “Interesting” in that it forms nice organic patterns instead of pure random noise. “Pseudo Random” in that you can give it the same input and get the same output. “Crapload” means that you can make a final data set thousands of times larger than the noise you start with.

Note that in the context of this project I’m going to discuss Perlin in terms of 2D images, but I’m using it in 3D. It’s just easier to show you what we’re doing in 2D.

We begin with a basic image of really random noise, which I will depict as a 2D greyscale image. The more random the better. We want areas of light, dark, and medium brightness. We want it to be really diverse overall, but have small local clusters of bright or darkness. We don’t want large areas to be homogeneous, and we don’t want the small areas just just be a scatter of white and black pixels. We can accomplish this a lot of ways. I could churn out a bunch of values in a random number generator, for example. Or, we can just open up a new image in your Photoshop of choice, crank up the noise filter on a blank image, and hit save.

|

Awesome, right?

No. Obviously pure noise is boring.

To make Perlin noise…

Okay. According to Chris Serson below, this is not Perlin noise. This is… It’s complicated. The point is, when I Google’d for Perlin noise I ran into multiple pages that made the same error I made here. To avoid perpetuating this mis-naming of noise systems, I’m adding this note here.

I will say that it is hard to find a proper implementation of either Perlin Noise or the (sometimes preferred) Simplex noise. Most of the stuff I find is:

- Impenetrable jargon.

- Pages of indecipherable, Greek-laden maths.

- Example images of what the system can do, provided you can figure it out.

- A history of all the noise systems Ken Perlin has made, how he used them, and how people keep mis-attributing other noise systems to him.

So… not much in the way of code out there. In the end, what I have here works well enough for my purposes. If I need something more robust I can go on the quest for the One True Noise Algorithm at another time. Let’s just get on with this.

…we have to sample this image at different resolutions. Massive resolutions. Bigger than we would want to bother keeping in memory, really. What we do is take this noise and kind of zoom in on it. For example, here are the four pixels in the upper-left of our noise image.

|

Blending between these four values, we can come up with values in between. If it asks for the value 20% of the way across and 80% of the way down, we get this:

|

To be clear: We’re not calculating ALL of these values. It only creates the one pixel that was requested. I’m just showing you how it arrives at that value.

The neat thing is, I already had 90% of the code needed for Perlin Noise. For those of you reading through Project Frontier, check out MathInterpolateQuad (). It has everything you need for doing the above.

The terrain generator is feeding us coordinates. We’re dividing those values by a thousand, so that it would require a thousand samples (a thousand meters of scenery) to cover the gradient from one edge of this four-pixel square to the other. Of course, if we stopped here we would have some really boring, super-flat scenery.

So we take another sample. Instead of 1/1,000, this one is taken at 1/500. Then we take another sample at 1/250, and another at 1/125. Each level of noise is at twice the frequency of the one before. Then we add all of these samples together, giving them all equal weight. We average them.

The upshot of all of this is that we get this large pattern of interesting noise.

|

This might not look terribly useful, but we can use this to generate interesting topography. Obviously we could use this to make hills where brighter = taller. But we can also use it to generate underground scenery. Let’s say we set a threshold. Everything above a certain brightness will be hollow, and everything below that threshold will be solid rock.

|

Which gives us a system of caves. If we set the threshold higher, then instead of large caves we’ll get little pockets, like Swiss Cheese.

|

If we take a narrow range of values above one point and below another, then we get a bunch of passages, all twisty-like.

|

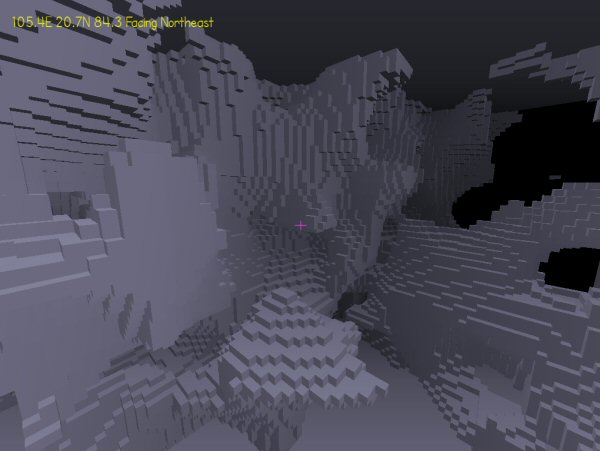

So let’s take this noise and feed it into our cube-world and see how it looks:

|

This is just raw Perlin output. I’m not doing anything fancy with it at this point. Eventually it might be worthwhile to combine different sets of noise. (Perhaps one to make hills, and another to bore tunnels in the hills.) Or to set up different ways of deciding what’s solid and what’s empty. (Mess with the thresholds.) Maybe I’ll stretch noise along one axis to make tall or long caves. Or flatten out the bottoms to make Star-Trek style caves with lumpy walls and floors level enough for championship billiards. (A classic trope. Actually, is it a trope? I’ve never seen it officially listed.)

|

One of the things I wanted for this project was to experiment with noise like this, and see what other sorts of shapes and places one can make.

|

So now we have a foundation for making scenery. I’m sure we’ll come back to this when it’s time to work on the output.

Fable II

The plot of this game isn't just dumb, it's actively hostile to the player. This game hates you and thinks you are stupid.

Mass Effect 3 Ending Deconstruction

Did you dislike the ending to the Mass Effect trilogy? Here's my list of where it failed logically, thematically, and tonally.

Skylines of the Future

Cities: Skylines is bound to have a sequel sooner or later. Where can this series go next, and what changes would I like to see?

Trashing the Heap

What does it mean when a program crashes, and why does it happen?

The Biggest Game Ever

How did this niche racing game make a gameworld so massive, and why is that a big deal?

T w e n t y S i d e d

T w e n t y S i d e d

[little nerdgasm]

Towel please!

significant chunks of that went right over my head….

but the results are weirdly pretty.

I wonder if there’s any way to do this project without it looking incredibly similar to minecraft…

Not before you write shaders that make the lighting and texturing look different. Before that, any block-based game will look like Minecraft.

Unrelated: I do not understand the explanation on the creation of Perlin noise. I understand its properties and how to use it, but the sampling stuff? Really unclear.

Still, any block-based 3D game is going to remind people of Minecraft, in the same way every 4X game reminds people of Master of Orion. Or every space combat sim of Elite. Or every MOBA of Defense of the Ancients. Or every pop-up shooter of Gears of War. Or every FPS-RPG of Mass Effect. Or… You get the idea.

I was getting a little unsure of your comparisons until you got to DotA…

In my mind, Civilization is the 4X everyone thinks of, and Wing Commander or Freespace is the space combat sim.

Googling Elite (which I hadn’t even heard of) and Master of Orion (wow that’s old) gives me the distinct impression that you’re pulling from a… deeper pool of games than I am.

My point was that Minecraft is a genre-defining game – as in, it might not be the first to have certain mechanics or design decisions, but is the first to gain enough popularity to ‘inspire’ several projects* – so it is completely natural that anything using even its superficial elements will remind many of it.

* And yes, I know, I know, Mass Effect was followed only by Alpha Protocol so it doesn’t really count.

What can I say, I used to read a lot of articles about videogames history – and now I am scarred for life.

Beat or equal minecraft at what it does, and someday all similar games will remind people of your game….just like your reference pool is younger than the OP’s

Minecraft had a LOT of hype to draw from though. It was a phenomenon. It’s very tough to get something like that without being really novel (at least in the experience of the common gamer/user), and it’s what helped make minecraft such a powerhouse later on.

Beating mass effect in its game by comparison, is a lot easier, in the sense that it’s more controllable; a better game can become the new marker for subsequent games. Being a better blockish game than minecraft would ‘just’ make you “that awesome minecraftian game”.

This all is not to say minecraft isn’t awesome or doesn’t deserve what it got (hype and all), but it’s something that needs the luck of seven gods to equal, let alone match.

Basically, he’s saying you generate random noise at different resolutions, smooth each one out and then stick them on top of one another.

So if you start with 256×256, you then get the top left quarter (128×128), stretch it out to the original resolution and average out all the missing pixels so you it’s kind of blurry. You now have two 256×256 noise textures, one sharp, one less so. You then repeat that with the top 64×64 of the original, expanding and interpolating and so on with 32×32, 16×16, 8×8, 4×4 all the way down to 2×2.

So then you have 8 256×256 textures, each blurrier than the last, you then average out each point on the image to create a single texture that’s an amalgamation of the others, and looks like the one in the post.

Assuming I understand correctly.

Yes, you can.

In 2d its pretty simple. Instead of using blocks, you use each pixel as the height of a point on a surface. Shamus used this method for his terrain in Project Frontier, just with a different image as the basis instead of a “perlin” based image.

In 3d the principle is the same – you use the information as a connected system of points instead of individual blocks. With the method Shamus is currently using (where he sets a threshold and ends up with the black and white blotch images) I think you could do it by setting anywhere where a black pixel edge meets a white pixel edge as a point, and connecting that point to any adjacent points.

After that, you have the trouble of figuring out which part of the shape is the inside and which is the outside (which is important if you want to be efficient and not render the side you cant see)…

First off, I just want to say I’ve been reading your blog for a few years now and I have a ton of respect for you. Your work has influenced my own research.

Having said that, I hate to burst your bubble, but you haven’t actually made Perlin Noise here. Perlin Noise is a form of Gradient Noise, while what you’re actually describing is Value Noise.

Ken Perlin posted a talk online which may interest you. On slide 15, he begins to describe the actual algorithm used to produce Perlin Noise. I would also recommend checking out the DirectX SDK as it has implementations of all 3 of his Noise algorithms (Perlin Noise, Enhanced Perlin Noise, and Simplex Noise).

Also, when you describe combining octaves of Noise together at different frequencies, you’re describing something which is typically referred to as Fractional Brownian Motion (fBm). This webpage describes it fairly well, with images comparing the difference.

I had a heck of a time figuring all of this out myself the first time I went to implement Perlin Noise. Half the websites I looked at described it exactly as you do; however, that description is NOTHING like Perlin’s own. In reality, I believe the algorithm you’re using is quite a bit simpler and very possibly faster. And the results are more than good enough. In fact, the last link I posted shows that there isn’t a huge difference between Value Noise and Perlin Noise.

Heh. Well, I found out about “Perlin” noise via Google, so… that’s how we ended up here.

Dangit. I suppose I need to update the post or I’ll just be making it worse.

EDIT: And OF COURSE I named the source file perlin.cpp. Dummy. Name it “noise” next time so you can switch systems without touching the systems that use it. Grrr.

Or even better….one noise interface so you can have several implementations? That’s what I did when I implemented a similar system. Then use a factory class so the actual implementation is only referenced one place.

Heh. I found a typo. “Perlin nose”.

You should claim that perlin.cpp doesn’t stand for perlin noise, but for Portable Environment ReaListification ImplementatioN (or, PERlIn).

Or Per Line. Misspelled.

Naw, you should never admit to a misspelling or anything unintended when coding. Every single thing has to be intentional and deliberate, foreplanned before the very inception of the general pre-idea even.

Then it’s Per Line, memory-saving version.

Or that he’s added a Perl interpreter to his code

HE SAID PERL! *sirens*

well, at least he didn’t say ‘nose’.

*flees before the deranged muppet with the exaggerated schnoz appears*

((yes, i am referencing, to the best of my knowledge, a single, old, sesame st. skit as if it were a major and well known meme. no, i do not care :P ))

I’ve implemented Perlin Noise before (I think, anyway. I implemented SOMETHING noisy, but it might just have been shouting). I used it to generate marble-like effects to texture primitives on my home brewed ray tracer. It was really, really slow. I dug around in my old code, and found this image: http://blog.daft-ideas.co.uk/?p=159

It’s not the best I ever produced, but it’s my own fault for not using proper version control on my older projects.

Shamus, Perlin’s original code for Improved Noise is on his website here: http://mrl.nyu.edu/~perlin/noise/ if you are still interested. However, your noise system is going to be fine for what you’ve got in mind.

Sorry in advance for the codespam, but I believe this is “proper” Perlin noise, in HLSL (should be pretty much the same in GLSL):

float noise(int n)

{

n = (n << 13) ^ n;

n *= n * n * 15731 + 789221;

n += 1376312589;

n &= 0x7fffffff;

return 1.0 – n / 1073741824.0; // float(0x40000000)

}

float _perlin2d_impl(float2 x, int2 i, int2 g)

{

float2 r; i += g;

sincos(noise(i.x + i.y * 57) * 6.2831854, r.y, r.x);

return dot(r, (x – g));

}

float perlin2d(float2 x)

{

int2 i; x = modf(x, i);

float s = _perlin2d_impl(x, i, int2(0, 0));

float t = _perlin2d_impl(x, i, int2(1, 0));

float u = _perlin2d_impl(x, i, int2(0, 1));

float v = _perlin2d_impl(x, i, int2(1, 1));

x = 3*x*x – 2*x*x*x;

return lerp(lerp(s, t, x.x), lerp(u, v, x.x), x.y);

}

float _perlin3d_impl(float3 x, int3 i, int3 g)

{

float3 r; i += g;

sincos(noise(i.x + i.y * 57) * 6.2831854, r.y, r.x);

float zr;

sincos(noise(i.z) * 6.2831854, zr, r.z);

r.xy *= zr;

return dot(r, (x – g));

}

float perlin3d(float3 x)

{

int3 i; x = modf(x, i);

float s = _perlin3d_impl(x, i, int3(0, 0, 0));

float t = _perlin3d_impl(x, i, int3(1, 0, 0));

float u = _perlin3d_impl(x, i, int3(0, 1, 0));

float v = _perlin3d_impl(x, i, int3(1, 1, 0));

float s2 = _perlin3d_impl(x, i, int3(0, 0, 1));

float t2 = _perlin3d_impl(x, i, int3(1, 0, 1));

float u2 = _perlin3d_impl(x, i, int3(0, 1, 1));

float v2 = _perlin3d_impl(x, i, int3(1, 1, 1));

x = 3*x*x – 2*x*x*x;

return lerp(lerp(lerp(s, t, x.x), lerp(u, v, x.x), x.y),

lerp(lerp(s2, t2, x.x), lerp(u2, v2, x.x), x.y),

x.z);

}

That's only one level, though, you want what's generally called "cloud" noise: which is just iterating like:

#define CLOUD_ITERATIONS 6

float cloud2d(float2 x)

{

float r = 0, s = 1;

for (int i = 0; i != CLOUD_ITERATIONS; i++)

{

r += perlin2d(x * s) / s;

s *= 2;

}

return r;

}

Despite being super complex for a shader, it is well more than fast enough to use realtime on my machine for the simple scenes I'm rendering. You could use it to generate levels on your card if you can ship them back to CPU (slow, but faster than doing it on CPU)

EDIT: I also apologise in advance for everyone who actually knows HLSL who is having a heart attack right now at my awful code :P In my defense, I just hacked it together 1:1 with Perlins summary of it, I wasn’t thinking of performance at all.

Out of curiosity, what is the difference between gradient noise and value noise in laymen’s terms?

Value Noise works as Shamus described above. You have a regular grid of random scalar values. When you want the value at a point, you just interpolate between them.

Gradient Noise uses a regular grid of random values as well. The difference is that those values aren’t scalars. They are vectors. Vectors, if you’re not familiar with them, are essentially arrows pointing in a given direction.

So when you pick a point in a Gradient Noise function, you then need to perform a bunch of math using the location of the point and each of the vectors to get the values. You can then interpolate between those values.

Basically, Gradient Noise functions are way more complicated but have an important property that a simple Value Noise function doesn’t have: All those apparently random features? They’re actually all roughly the same size. In Shamus’ example, he CHOSE to use a starting grid of values that pretty closely resembles this. He had to make that starting grid himself. But with a correct Perlin Noise implementation, you ALWAYS get this property.

And I actually managed to mess up my noise gradient on the first try. I mistakenly began with a black image and added noise to it, instead of starting with neutral grey. This resulted in the outputs trending low, and not having as much variance as they should have. The average noise value was 55-ish, instead of 128. (On a 256 scale.)

Took me a while to figure out why my noise was so “quiet”.

I have trouble believing that there is no entropy input to a ‘correct Perlin Noise implementation’ that doesn’t result in unusual sizes.

If I understand your math correctly, a three-dimensional value noise field is continuous on four dimensions (x,y,z,intensity), while a gradient noise field would have five or six (x,y,z,magnitude,elevation,azimuth)(elevation and azimuth possibly combined).

In each case, I’m using the mathematical definition of “continuous”, meaning roughly that there would be no jumps in value, if the resolution was allowed to be infinite.

I may have been a little hasty in my using the word “always”, especially in all-caps. It would, perhaps, be better to say that Ken Perlin specifically set about creating his algorithm with the intent that all features in a given texture would be about the same size.

I’ve actually been trying to avoid talking about the math because, while I understand Perlin’s intent, I don’t really get WHY his algorithm works. It’s a crazy concept.

I think where you may be falling down is in thinking it has to be continuous in more dimensions because it uses vectors. Both algorithms are doing basically the same thing: take a bunch of values on a square/cube/etc and interpolate between them to get the value at a point. The difference is in what the values are at the vertices and where the point actually is. With Value Noise, you just take the values and interpolate for the point. You can think more in absolutes. The point (x, y, z) is actually at point (x, y, z) within the Noise Field.

With Gradient Noise, you have to manipulate things a bunch to get to the same point. I look at it as warping space.

For instance, with Perlin Noise, for each vertex of the square/cube/etc, you take the dot product of the gradient and the vector from the vertex to the point you’re trying to find. At that point, you have scalar values and can interpolate between them. Of course, there’s another bit of complexity thrown in because you’re not actually finding the value at (x, y, z). What you actually do is find the values u, v, and w along a curve at the points x, y, and z (what Perlin calls the fade function in his implementation, but what I prefer think of as the warping of space). Then you interpolate for (u, v, w).

So, gradient noise uses a vector field for input but outputs a scalar field with only a single value per point? The key feature of each is that if you use them as transformations rather than simply evaluating the results at various points, the resultant field is continuous (the limit as you approach any point is equal to the value at that point)

In that 8th image, I could’ve sworn that I saw a block being held right in front of the camera, Minecraft-style. Then I looked down, and… *brainfuck*

This?

Oh so that’s how Perlin noise works. I assumed there was something a bit more complicated going on but that makes a lot of sense. Thanks for that.

EDIT: Read the above post. OK, it’s not Perlin then but it’s still pretty cool.

“If we take a narrow range of values above one point and below another, then we get a bunch of passages, all twisty-like.”

Ahahahaha. Notch was explaining terrain generation in Minecraft on his weblog and in one post he said something like “in a future post I’ll explain cave generation” and then never got around to it. He implied it was pretty simple, but I couldn’t figure it out from what he had explained. But now it seems so obvious!

Thanks, Shamus. And (sarcasm)thanks(/sarcasm) Notch!

I’ve been going along this same path myself, wanting to play around with octrees and figuring a Minecraft clone would be a fun approach. One thing I can’t find anything on is efficient occlusion culling in octrees. Of course I’m doing frustum culling, but that’s not nearly enough with all the hidden nodes that are inside the frustum. Notch mentions doing occlusion culling via ARB_occlusion_query, but I haven’t come around to trying that yet.

What (if anything) are you doing for occlusion of all those contained blocks? Are you just passing the lot in a vertex buffer and letting the card do it for you?

I’m not sure what Notch is referring to. Minecraft doesn’t really do occlusion. You can confirm this be creating a texture pack with transparent (say) stone, and walking around underground. It draws everything.

I’ve been thinking about occlusion quite a bit. The devil of it is, there are a LOT of cases where you can remove 90% of the scene with cheap culling tricks when you’re surrounded by co-planar walls. But when things are blocked by non-simple geometry? Like, twisty tunnels? It is not obvious how to efficiently cull in those cases.

And there will still be cases where crazy players will dig a huge trench or a long tunnel that thwarts culling, you have to allow for that. Your game has to be fast enough in that worst-case scenario, because it will come up often. And if it works well in that worst-case scenario, it will work in all others. Ergo, any optimizing you do will serve to make the framerate rocket upward in certain cases, but may actually make things worse in other cases, which is when performance counts.

That said, I still take the idea out and toy with it now and again. It’s a fun problem, because it seems SO SIMPLE when you’re standing in a 1×2 tunnel, and so impossible when you’re looking at noise-caves like the ones above.

Minecraft does do occlusion (if the “Advanced OpenGL” option is on) but, if I understand it correctly based on my experience playing, it is pixel-based and in screen space: a chunk (volume of the world) is not drawn if none of its pixels were visible in the previous frame. Therefore if you have transparent textures, the occlusion doesn’t happen. You can see that if you have the option on and swing your view around, it will occasionally fail to draw some chunk at the edges, for just one frame.

I wonder if you could do the coplanar walls thing like this: for each tree node, check if each of its six faces is fully opaque (i.e. has blocks occupying all of the surface). If so, then you can treat it as a wall for culling purposes. The simplest case is that for a given octree node, if you are looking at it along the Z axis, i.e. being within the XY bounds of one of its octants, and the nearer octant has an opaque face, then you can skip drawing the geometry for the farther octant. This would not help much for caves like your examples since most faces would not be completely solid, but in a more densely opaque world it might.

Ah. Very interesting. Thanks.

ARB_occlusion_query is asking the GPU what’s occluded, and if you make the front wall transparent, the GPU will know that it isn’t occluding anything!

This is pretty cool stuff Shamus. I like how well you explain theese pretty complicated concepts, but I’ve been wondering where you’re getting the raw data about octrees and what-not. Do you just Google around? And have you found any good resources?

I didn’t need to Google for Octrees because I’ve spent so long working with Quad trees. Just needed to add a dimension.

I did google for Perlin noise, and you can see how that turned out. :)

http://mrl.nyu.edu/~perlin/doc/oscar.html#noise

Direct link from the Perlin Noise Wikipedia page, which was my top Google hit.

Pfft. I hit that page, read a few paragraphs, and hit the back button. Totally missed the code at the bottom.

Thanks.

If you figure out how to actually use it, by all means let me know…

I was going to suggest trying some sort of fractal based noise (like the diamond square algorithm), which could possibly be extended to 3D, but your way is probably simpler. Is there a good way parameterise it for specific output (“now produce plains… now produce mountains… now product fjords…” etc)?

Don’t forget to take extra care with the fjords. You could win an award for them.

Alas, I hear they are no longer in fashion…

Is there a good word for a fjord award? Or for someone who scored a fjord award? What about when they’ve stored their fjord award by their sword? Not to be untoward, of course…

Yes. Slartibartfast.

His designs struck a chord,

So he won an award,

For he work with the fjords,

Priced to afford,

By the Golgafrinchan Horde.

Too much time I’ve poured,

Into this poem. Oh Lord,

I’m out of my gourd.

And somewhat bored…

…Burma-Shave.

Fractal noise means adding noise to itself at different scales, which is exactly what Shamus did here.

There’s also the Diamond-Square algorithm to consider – which, while not as high-quality in a macro sense, seems even better at producing things like rolling hills, coastlines, etc. than Perlin noise is. Disadvantage is, you generally have to hold a lot more data in memory at once (it’s really only good for creating premade terrain, as opposed to real-time generation.)

I just felt like someone ought to say this. I understand almost nothing of this entire article. In fact, I don’t even follow much of the entire series of articles. And yet I still enjoy the heck out of reading them. What’s up with that?

You seem to use bilinear interpolation to make the smoothed version of your pure noise picture. It makes those star-like patterns. Bicubic interpolation, albeit a bit more work, would avoid those patterns. For your caves, it would give the whole thing a more organic look. Yes, nitpicking and all :D

I’ve just done a quick comparison according to your link to wikipedia, and I don’t think you are merely nitpicking. Bicubic interpolation indeed looks like the better approach, the result is remarkably better.

Pro-trip for extra quality with minimal work =>

When adding the layers of smoothed noise, if each layer is 2 time larger. Rotate them first by i * phi where i is the index of the layer, and phi is the golden angle. It minimize correlation artifacts that appears when adding layers…

Your blog continues to inspire. Just awesome.

Look forward to the next bits!

There’s a discussion on adding it as a Trope: http://tvtropes.org/pmwiki/discussion.php?id=0x96mw80feedqh04maiqhb1c

If you’re interested in noise generation (and procedural generation in general), read this book: http://www.amazon.com/Texturing-Modeling-Third-Edition-Procedural/dp/1558608486

It’s like a bible for PCG.

“Perlin Noise is a technique for quickly generating a metric crapload of really interesting pseudo-randomness. “Interesting” in that it forms nice organic patterns instead of pure random noise. “Pseudo Random” in that you can give it the same input and get the same output. “Crapload” means that you can make a final data set thousands of times larger than the noise you start with.”

And ‘metric’ because we’re not savages!

I found this explanation with source code about value and Perlin noise invaluable.

http://www.scratchapixel.com/lessons/3d-advanced-lessons/noise-part-1/

Actually this lesson describe an implementation of value noise. They said they would work on a lesson for perlin noise but they seem to be working on other lessons at the moment. I like scratchapixel because as you said in your introduction, they don’t use jargon and all maths are explained in plain english.