In a recent post I mentioned that I leave my PC on all the time. In response to that, Dreadjaws said:

I confess to be entirely ignorant about this subject. Some people swear to me that there’s no benefit in shutting down the PC every day, while others say there is. At work the machines are turned on 24/7, but at home I tend to shut my PC down when going to bed. While, being fair, the primary reason I do this is that the PC is in my bedroom and the bright lights and occasional fan noise annoy me when I try to go to sleep, I would really like to know what’s the deal.

Doesn’t it cost more to keep the PC on all the time? Or is the act of booting it somehow more costly? What are the real benefits for each?

I’ve been wondering this for years.

Back in the 80s, it was objectively better to shut down for the night. The PC couldn’t shut down those energy-sucking CRT screens, which was a big part of the energy drain. Certain systems might be smart enough to blank the screen, but that just means the monitor was working very hard to display nothing. If you looked at a supposedly “black” screen in a dark room you could tell it was still on. That electron gun was still firing, still being guided by rapidly-shifting magnetic fields. The hard drives kept spinningHow many people remember the days when you had to park a hard drive?, the CPU kept twiddling its thumbs, and the machine used nearly the same amount of power as when it was in regular use.

Over the decades we’ve replaced the wasteful CRT with LCD panels. We’ve added sleep and hibernate modes to devices so they can enter a low-usage state. On top of that, a lot of components need less power overall. At some point it’s possible we crossed a threshold to where it’s better to just leave the machine on.

Machines burn a lot of power when booting up and shutting down. Rumor has it that shutting down apps on your phone can make your battery usage worse because the per-app startup and shutdown is more expensive than the idle. It’s not unreasonable to think that perhaps the same might be true on a desktop machine where loading a program involves spinning a physical disk at 7,200 RPM and moving the physical head around faster than the human eye can see.

I’m not sure why it takes Windows fifteen seconds to shut down, but it does seem to get the machinery spinning. Likewise, starting up is a big deal that puts more stress on the machine than you see when the machine is in regular use. And don’t forget to include the cost of starting all those programs again. If it takes another minute of spinning drives to open up Adobe BloatWare 7, Microsoft MemHog 2016, and your 15-tab web browser session before you can begin work, then all of that is also part of the overhead of shutting down. It’s entirely possible that a full cycle shutdown at night and startup in the morning will burn more power than if you just let the device sleep overnight.

I suppose the numbers might depend on usage time. I begin using my computer the moment I wake up and I keep using it until I go to bed. I do this every day. Even in best-case conditions where I get a full 8 hours of sleep in a row, we’re comparing a shutdown + boot cycle against 8 hours of sleep mode. On the other hand maybe you only use your machine for work. It only needs to be on from 9 to 5 on weekdays. Maybe the longer intervals of downtime tip the scales and make it more efficient to turn the machine off.

But before we get too excited about measuring electricity usage, let’s not forget the ravages of entropy. Those shutdown + boot cycles get the physical parts moving around, which increases wear and tear on the hardware. Cars have a similar trade-off. Idling when you’re not using the car is a waste of fuel, but starting an engine is really hard on it. People come up with these vague rules like, “If you’re going to drive again in less than five minutes, then it’s better to leave the engine running.” Of course, that “five minutes” figure is an arbitrary round number and the true “break even point” for shutdown probably depends a lot on how big the engine is, how old it is, and how sophisticated replacement parts are. How much energy goes into a replacement starter motor? The energy required to replace a modern starter is going to be astronomical compared to the gas burned while sitting idle for a minute or two. Certainly it would be bad to turn off the engine for ten seconds, and leaving it running for an hour would be a huge waste, but good luck finding the crossover point.

So What’s Best?

On one hand, maybe you should leave the machine on rather than pushing the device through a daily power cycle that wears out the equipment more quickly.

On the other hand, most hard drives outlive their usefulness. A majority of drives end up in the trash not because they failed, but because they’re obsolete. If a drive is likely to operate for 12 years but it’ll be obsolete in 5, then the extra wear and tear doesn’t matter. Even if the extra boot cycles cut its life expectancy in half, it’ll still last long enough to be retired before it dies.

On the other, other hand, we’ve hit a plateau in terms of hardware and our machines are turning over more slowly than ever before. My current computer turned 5 this year and it’s still running modern games like a champMy graphics card is getting a bit long in the tooth, but I can’t afford a new one in this market.. The point is that we’re no longer throwing these things away every two years. You may indeed still be using that new hard drive in a decade, and maybe that extra wear and tear matters.

On the other, other, other hand, waiting for a boot-up each morning is a waste of your time. A desktop PC burns anywhere from 75w to 200w, depending on what you’re doing with it. In sleep mode, the power usage goes down to ~5. Maybe sleep is more efficient than off, or maybe off is better, but either way we’re only talking about a couple of wattsYour PC still draws about 1w even when its off, so it can power itself on when you push the button.. Sure, maybe you’re willing to sacrifice a couple of minutes of your day to save some tiny handful of watts. But if that’s the case, then save up those minutes until the end of the week and spend them washing clothes by hand or hanging your laundry up to dry instead of using a machine. You’ll save about a hundred times more than the power savings you’ll get from optimizing your PC power policy.

On the other, other, other, OTHER hand… who cares? It’s easy for us nerds to get distracted by trivial problems and waste time optimizing things that don’t matter. The fact that there’s no clear answer between shutting down and leaving on means the overall difference is probably very small. All of this is a drop in the bucket compared to the really big stuff. If you’re worried your on / off policy might be wasting some absurdly tiny bit of power along the way, just turn off your air conditioning for one day in the middle of summer. Sure, it’ll be miserable around the house that day, but you’ll save enough power to offset your slightly wasteful PC usage for several years.

On the othermost hand… I’m still curious where the crossover point might be. Is it worth shutting down for eight hours? four hours? One hour? I don’t think the answer will alter my behavior, but I’d really like to know anyway.

Footnotes:

[1] How many people remember the days when you had to park a hard drive?

[2] My graphics card is getting a bit long in the tooth, but I can’t afford a new one in this market.

[3] Your PC still draws about 1w even when its off, so it can power itself on when you push the button.

The Best of 2013

My picks for what was important, awesome, or worth talking about in 2013.

Pixel City Dev Blog

An attempt to make a good looking cityscape with nothing but simple tricks and a few rectangles of light.

This is Why We Can’t Have Short Criticism

Here's how this site grew from short essays to novel-length quasi-analytical retrospectives.

The Truth About Piracy

What are publishers doing to fight piracy and why is it all wrong?

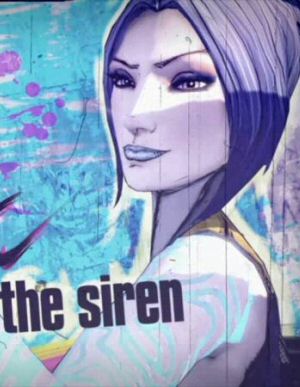

Borderlands Series

A look at the main Borderlands games. What works, what doesn't, and where the series can go from here.

T w e n t y S i d e d

T w e n t y S i d e d

One thing to keep in mind is how your electric grid is set up. Many power plants take an extended time to start, so they’re left running constantly. But power can’t be stored to any real degree, so if nothing uses power it gets wasted. Therefore, a small increase in draw when a lot of people are using power(say, at 8 am as every office turns on the lights and coffee machines) matters more than a larger increase in usage when no one is using power(2 am). This is why “Free Nights” deals are so common, the power is actually free.

Of course, the real answer is that personal choices have always been a distraction from the large-scale social and political movements necessary to truly reduce energy consumption. If you actually want to save power, call your city councilman and yell at him to add more buses to their routes.

“But power can’t be stored to any real degree”

Interestingly Elon Musk built a giant battery in Australia (with his own two hands!) that connects to the power grid and can respond to rapid changes in demand almost instantly. It can’t power the grid for very long, but it’s more than enough time for the power stations to catch up. However, the part I worry about it how ludicrously expensive lithium is at that scale, and how much maintenance will cost.

Have a quick read if you like.

Yeah, that was written at 4 AM and I didn’t want to get into the details of where they are now, but there have been recent advancements in batteries that have me hopeful. Personally, I like the British solution of pumping water uphill when they have a surplus and then letting it run downhill to spin a hydro generator when they have sudden excessive draw. Granted, their needs are fairly unique, but it’s a relatively cheap solution to a nightmarish technical problem.

That’s not just a British solution, it’s used all over Europe (except maybe in the Netherlands, for lack of hills, and in Norway because the majority of Norwegian electricity is generated from water plants (lots of mountains, lots of rain, and glaciers, too), so they just shut off the turbines when demand is low.

…and I’d be surprised, actually, if it wasn’t used in at least some parts of the US, too.

It is used in the US too.

And Japan has an extremely well developed system of that sort.

Thank you for the interesting article. Not a topic which attracts much attention outside specialist forums.

Please note that most modern chipsets and systems would use way less than the 75 W during normal desktop use. For example, I’m running a RoG Strix B350-F with a 2700X and a GTX 1060, allowing both to undervolt when not in use.

So sitting at a web browser typing this comment, my wattmeter is currently (lol) reporting ~32 W. Keep in mind this same system draws several times that at load. Also I have to use third-party tools to undervolt the GPU, otherwise the stock Nvidia drivers keep the GPU at 100% power if i have more than one monitor.

This is a far cry from days when core parking was punishing on performance, modern chipsets are way more aggressive with power saving than even the previous generation.

Don’t powerful gaming PCs still use a lot of power when crunching all the polygon-numbers? I’m pretty sure I’ve got a couple gamer co-workers whose power supplies are around 600W – 1000W.

Most power supplies these days are oversized by a good deal, just to be safe, and to get a PC to maximum power intake, you need to load all processing units in the CPU (all cores, all floating point units, all integer units, all SIMD units…), while reading/writing to RAM, while the GPU is pulling all registers, while all drives are reading/writing like champs and so on and so forth … not something you’re likely to encounter often, if at all, because even in really taxing situations, most components will be waiting for whatever the current bottleneck is.

The peak power usage of a gaming PC is indeed higher than that of a non-gaming PC (e.g. one that doesn’t even have a dedicated video card, just uses on-board graphics), but power usage in low-load scenarios (web browsing, idleness) is basically the same, because the CPU and GPU will just dynamically adjust.

Also something to consider here is that while video cards get more powerful each generation, their energy demands either stays the same, or even go down from generation to generation.

For example, comparing the official specs, a GTX 780 is specified for a power usage of up to 250W at full load, while a GTX 1080 uses up to 180W at full load. Which also means it needs a less powerful and thus less noisy cooler (in fact my 1080 is the least noisy card I’ve had in a decade).

Another point to consider is when you are playing a game that doesn’t tax the system to the limit, where a weaker card may running at full load may consume more power and a stronger card at just a fraction of its full load. Plus, I am sure there is less wear on the stronger card in that case as well.

Something you barely mentioned there, too, is the use of a watt meter. They’re not very expensive and if you want to know how much your computer (or your fridge!) is using, just plug it into the wall and plug the device into it. My computer uses about 200-250W running Prime95 and OCCT’s GPU stress test at the same time; it uses about 60W (IIRC) if it’s just sitting at the Windows desktop. Doing day-to-day stuff that isn’t gaming it usually doesn’t go over 100W.

I think it uses around 2W when asleep, so I almost never turn it off (even though it’s in the bedroom and I can see the lights blinking; I’ve just gotten used to it and it doesn’t cause me any trouble getting to sleep.)

These days you can get cumulative plug-in energy meters pretty cheap, so you really could leave the thing plugged in to the meter overnight and compare the watt hours it measures against those when you shut down and start up.

I’m a little frustrated at the number of questions you raised without answering them. I, too, want to know, but now you’ve got me wondering with no more answers than when I started. Curses!

Also, I try to always shut down at the end of the day, even though my boot time exceeds 3 mins (I’m really due for a format).

It just seems like shutting down is part of doing the job RIGHT, you know?

Maybe it’s a holdover from my first laptop and it’s pitiful battery life.

A quick calculation will give a ball-park estimate. Let’s assume Shamus’ 5W for low-power mode is over-generous, and is 25W instead.[1] This puts our savings at 24W * 8 hours of power-off * 7 days per week. That works out to about 1350Wh saved per week.

Now consider small, energy-efficient vehicle, the Smart Fortwo, which has an 89 horsepower engine. According to the conversion charts I found, that works out to about 66000W. Let’s assume that the person driving this car also lives relatively close to work, and only drives five minutes per day, five days per week. That works out to about 27000Wh per week.

Upshot: this is probably a best-case scenario, and the vehicle is 20 times worse! If you’re worried about the carbon[3], then bike, carpool, or take the bus to work. If you’re worried about the precise amount of pennies saved or lost, I’ll leave it as an exercise for the reader, to extend these calculations.[2] :D

[1] Don’t worry, even with this generous extra savings, I’ll show that it’s not worth worrying about.

[2] Hint: You’re probably still better off with an alternative transportation method.

[3] No further discussion needed; This is probably close enough to the no-politics rule already.

1: That’s a 5-minute acceleration race to work? My (Diesel) car has 90hp, but I worked out that during my commutes, I use less than 10% of that on average. An electric car could be even better since it will recuperate some part of the car’s kinetic energy rather than rurning it into heat, like conventional brakes do. Also, my car is significantly larger than a Smart fortwo. But really, comparing sleeping PCs to electric cars is not the best comparison if you ask me.

2: In Britain, 1.35kWh cost about 30 US-cent. In Germany it’s 46 cents.

“You do not have püermission to edit this comment”? I wasn’t even over the time limit!

2: In Britain, 1.35kWh cost about 30 US-cent. In Germany it’s 46 cents, which works out to 25$ per year. Not an incredible amount of money but if you think about TVs, Routers, switches, radios, and (potentially several) PCs never going below standby, that can add up.

According to eVie a few posts down, 1 kWh costs 12 US cent in the US, so you’d end up with just 8.76$ per year. A lot less, but I’d argue that no number is too small for a real nerd to argue over :)

(You could also argue that that’s a bit cheap, and would explain why the US put so much less emphasis on energy-efficient stuff than other places)

I forgot that figure is maximum available power for the vehicle, not what’s used during constant speed, or average for a commute. I can’t seem to find any figures for that however, so I’ll have to ball-park it at maybe…a tenth? That’s what it feels like when I’m driving my vehicle to work, vs maxed-out acceleration. Although I suppose I could use money spent on gasoline, vs money spent on electricity. Where I live in Canada, electricity is $0.11 per kWh; That’d work out to about $0.15 per week on electricity saved. My truck (larger than a Smart-car, but smaller than others) uses about $60 every month or two, and I basically only drive to and from work (an 8 minute commute each way – crap, I forgot to double the commute time in my example, too…). So that works out to at least $8 per week. Still a lot more than the computer, and I imagine a small car would be about $2-$4 per week, given what my friends use for gas. So I’m still not totally wrong! :)

No time to look up which car it was, but I remember an efficient electric car using about 13kWh/100km (that’s 63 miles for anyone still stuck on imperial units…)

So if you have a 10 km commute (6.3 miles), you’d use 1.3kWh one way — which happens to be about the same as you save in E.T.’s example from turning the computer off overnight for a week.

==> not a lot, but not nothing either. And I’d still argue that there’s no benefit in terms of wear on the hardware, with the potential exception of hard drives maybe, but additional wear would still be tiny compared to regular operations.

My car (when I drive it, efficiency-obsessed as I am) uses 3.9l/100km, (which works out to 60 miles per US gallon), that means 0.64$ for a 10km commute (I suppose that’d be way cheaper in Canada…) — although over such short distances the engine would just barely reach operating temperature and be less efficient … really in that case, I’d much rather cycle. Anyways, this is actually the moment where I realize that energy costs for electric cars are now lower than fuel costs for combustion engines, even at household energy prices! (at least in Western/central Europe)

Hard drives will spin down anyway after a while of inactivity, so leaving the pc on at night doesn’t really do them any favours.

Although I think you can mess with the S.M.A.R.T. settings to keep them spinning indefinitely.

Shamus, watts are already a measure of power. If you want to measure STUFF per hour wasted, that STUFF would be Joules (or calories, if you want to get old-school). And if something draws 5 W of power, it draws 5 Joules per second.

It’s just that one Joule is such a miniscule amount of energy that even burning thousands of them isn’t a big deal. In fact, you yourself burn thousands of them every day to stay alive, through a much less efficient method of energy conversion.

So, as a logical conclusion: If you stop living, you save lots of energy.

A more practical unit of energy is the kilowatt-hour, which is the energy consumed by leaving a 1 kilowatt device running for one hour. The price of a kWh of electricity is about $0.12 on average in the USA.

If your computer uses 5 W of power while in sleep mode for 8 hours, then that’s equivalent to using 120 W for 20 minutes, so as long as booting your computer takes less than 20 minutes to finish booting up (which seems like a long time) and doesn’t use an additional 120 W of power compared to running normally during that time (which again, seems highly unlikely), it’s certainly better to just switch it off (you can shift those numbers around – 10 minutes and 240 W has the same energy consumption as 20 minutes and 120 W, but again, I don’t think there’s any realistic way in which booting your computer is more energy intensive than leaving it in sleep for 8 hours).

Wear and tear on components is not, to my knowledge, particularly impacted by the process of starting up. If your computer is going to break from being booted up, then it’s going to break from heavy use first.

Certainly — in the summer. In the heating season, a wasteful power expense is just a funny looking heater. All winter long, incandescent bulbs and sleeping CPUs just offset the work of the furnace.

Is the furnace more efficient? Of course! But it means that instead of costing 12/200 ¢ to leave the computer on for an hour, it costs that less the cost to operate the furnace for a few microseconds.

In a modern fairly well isolated house (with a heat ventilation recycler of some sort), the only heat sources in the apartment I live is from making food on or in the stove or microwave, the water heater/shower/hot water use, and the computer which is on all day (although CPU cores are “idling” at a lower powerstate/clock), the air coming out is fairly warm and heats up the room the computer is in quite well. The lighting/light bulbs are all LEDs so they each generate less heat than a candle probably.

Modern PCs are quite energy efficient. When the PC is idling the majority of the heat if from the power supply. When working the CPU makes the most heat and when gaming the GPU makes the most heat.

It is only during the coldest parts of the year that a extra mineral oil heater on wheels are used to make extra warmth. The rest of the year (like 300ish days of the year total) a simple electrical wall mounted oven with a thermostat heats the entire apartment with the assistance of a all year round heater in the bathroom (which is ancient and should probably be changed, it just dumps electricity into heat, set it at 300watt and it’ll pull that and dump as heat not sure if there is efficiency gains to get on that with a new one).

There is some decorative LED lights on the balcony, they used to be on a timer but a quick math showed that the outlet timer probably used as much power as it saved due to how low energy the LED lights are so the timer was removed.

So while a PC may not help to heat up an apartment and certainly not a house, a home office or small (kids?) bedroom can be kept at a comfy temp through most of the year thanks to a medium/large desktop PC.

“Is the furnace more efficient? Of course!”

Not necessarily. Can the furnace do computing?

It would be fun to do actual math on this but I’m just gonna make a blanket statement and say that a desktop PC strikes a nice balance between heat and computing.

Mobile phones generate so little heat (compared to their computing power) that they provide no heat benefits.

If you game a lot or do lots of work during the day and the PC pulls say 300 watt (hours) on average, this would actually equal a heater that is set to 300watt. The power draw is turned into heat regardless of what device/tech it is.

A properly built PC also has the benefit that it’s designed to get rid of the heat (from the CPU and GPU) and out of the case as quickly as possible, so in a sense it’s very similar to a space heater with a fan in it.

So the wattage slash heat is not really wasted.

Around here, most people heat with natural gas, and it costs about 1/5 of electricity, per kWh — so the benefit of heat coming off an electrical device is pretty small compared to how much heat you could get by burning gas.

We had the central heating fail last winter, and had to use an electrical heater for almost a week, That left a _very_ visible mark on our utility bill.

FYI, the body of an adult human requires about 8-10 million (!) Joules per day.

Small note: watts are already a measurement of power usage per unit time (1 watt = 1 joule per second, or 1 watt-hour per hour), so “watts per hour” would technically be a unit of how quickly power usage is changing – an acceleration rather than a speed.

Uhm, its (joules/sec) * hours – which would just be joules (but in a more convenient format), not Joules/sec*sec (which would indeed be a measure of rate of energy change)

This is pedantic nitpicking (we all know what you were saying) but watts is a rate. An amount of power is “watt-hours” (or watt-seconds, or watt-years, or kilowatt-fortnights… you get the idea), which is the amount of power used in one hour at a rate of one watt. Watts per hour would mean the rate at which power use is accelerating.

Edit: Wow, it seems that all the pedantic nitpickers wake up at 8 AM. Sorry to be one more piled on.

Okay, that’s three corrections in seven minutes. That’s gonna go on all day and drive me crazy.

I’m going to edit the mistake rather than read several of these an hour all day.

Heh, sorry, took me long enough to check my facts that I didn’t realise it had already been said.

Should have known I’d be beaten to it… a unit error on the internet is like some sort of bat-signal, but for nerds.

Oh no! Has gratuitously pointing out every small mistake in your article become the new “First!” of this site?

I’m feeling very conflicted about this now.

To be fair, I pretty much brought it on myself.

I KNEW it felt wrong to type “watts per hour” but rather than looking it up I just plowed forward. That’s a dangerous game on the internet.

Depends really. For example, while kilowatt is the “standard”. 0.3kW/hr is the same as 300watt/hour.

Also, if pointing out mistakes is the new first, then that is a welcome first IMO as having mistakes corrected makes reading the post/article more enjoyable for the next (new) reader of it.

I think you might have misunderstood us here. This and your other post seem to indicate that you think “kilowatt hour” stands for 1kW/1h, when it really stands for 1kW*1h. You basically state how much energy you used up (1kWh) by saying how fast you drained energy from the system (1000W), and then for how long you did that (1h).

That’s why Lazlo called “watts per hour” an “acceleration”: if you graph total energy spent over some time interval, you will get an increasing (as in: sloping upwards) curve similar to a graph of total distance travelled over some time interval. In the first scenario, the slope of the graph is the power used at that particular time, so 1 watt would be a slope of 1 J/s (or one Wh/h), analogous to how speed is the slope in the second diagram.

And then watts per hour would be the curvature of the first graph (or the slope of the power usage over time graph), just as acceleration is the curvature of the distance over time (or the slope of the speed over time) graph.

Or, TL:DR: watts per hour would be the second derivative of energy, just as (m/s)/s is the second derivative of distance, with respect to time. Instead, energy is measured in Watt-seconds (1W * 1s) or kilo-Watt-hours (1000 W*1h), since a watt is already a rate of energy.

https://xkcd.com/386/

^ pretty much

“Watts per hour would mean the rate at which power use is accelerating”

What?

It’s consumption or constant draw, not acceleration. (actually this is not correct either, kilowatt hours are how many thousands of watts are “consumed” on average during that hour, assuming a hourly or finer measurement granularity. If the granularity is each 2 hours then the kW/hr is the average over 2hrs for example.

If it was “accelerating” (speeding up) as you call it, then at 300watt this would mean the draw would go from 0 to 300 to 600 to 1200 to … etc.

I’ve never heard of this before, if that is happening then that is very odd power of two thermal runaway situation.

A quick Googling for “watt” and “acceleration” provided no results, so not sure where you got that from.

I think you mixed up heat released (normally stated in wattage) and power drawn (normally stated in watts) with energy/power consumed and billed (normally stated in kilowatt per hour).

If you have two 1000watt heaters on that each draw 1000w and you leave them on for 1 hour, the power meter should have shown a power consumption of 2kW/hr.

If you have two 500watt heaters on that each draw 500w and you leave them on for 2 hours, the power meter should have shown a power consumption of 2kW/hr.

Now I may be using the “/” wrong here as it might be stated as kWHr or similar for consumption and kW/Hr for the current drawn (erm, currently). I don’t have a power meter currently to look at and see hot it displays the two figures (draw, and total).

Acceleration is the wrong word/term to use, I’m sure there is a correct one but I got no clue. Delta? Over/Under? Actually “under” would be wrong too as you don’t have to use 1kWh/Hr, that’s 1 kilowatt hour pr hour (aka rate), not to be confused with 1 kilowatt hour. You are allowed to use less power. And I don’t think any thinks of it as a rate (other than “rate of cost” shown on their electricity bill maybe)

If you bill says you used 15000kWHr this year then you can divide that by 365.25 divide that result by 24 to get how many kW/Hr (kilowatt per hour) you use on average (1.711kW or 1711watt in this example).

Only reason for kWh and kWh/hr etc. is to make the numbers “smaller/shorter”.

15000kWh sounds less dramatic than 15000000watt.

With new digital meters the power companies can get granularity down to the second if they really wanted, but the kilo per hour will probably remain as the number unit.

They should have called it something else though IMO. I’m able to confuse myself sometimes. If it’s been called “1 Power Unit” instead of “1 kilowatt hour”, then that would have made it much saner to say “1 kilowatt hour per hour” and similar.

Watts are already a unit per time. Watts per hour are different, and watt-hours are different again. Nobody says “1 kilowatt hour per hour” – the “saner” thing to say, as you put it, would be to just say “watts”.

“Who remembers parking hard disks?” I DO! :D

That aside, I’m basically with Dreadjaws on this. I put my computer into sleep mode if I’m going to be away from it for more than an hour, but I shut down the computer completely at night because I’m a sensitive sleeper and those tiny lights on the motherboard and the soft whirr of the fans are enough to keep me awake. I need a room that’s completely dark and silent to sleep.

I didn’t even know this was ever a thing that humans ever did manually! Although I suppose it makes sense, if the algorithm to know when to spin down / move the heads out of the way was too slow for computers at the time, or some other reason. ^^;

>> “Back in the 80s, it was objectively better to shut down for the night. The PC couldn’t shut down those energy-sucking CRT screens, which was a big part of the energy drain.”

Assuming the monitor was a separate device you could always shut it down overnight while leaving the computer itself on. That’s what I used to do for a long time. In fact, I still do it even though I have an LCD now.

Now that we have LED backlights, a sleeping LCD uses basically no energy (measured by the entirely scientific method of feeling how hot it is). The mercury bulb backlit LCDs are a different matter, though.

One place I worked was addicted to motivational screensavers; at one point they even had one encouraging workers to turn off their monitor! I wrote to IT with some back-of-the-envelope maths to prove that constantly running a screensaver instead of letting the monitors sleep basically used the same amount of energy as you’d save by turning them off overnight.

They didn’t write back.

I know it will ruin your hand pattern of paragraphs but the truly geeky way to follow “on the other hand” is “on the gripping hand”.

Yeah, that’s the usual phrase for a third hand that trumps the first two. But I do also like “othermost” for the last in a longer series of hands.

Shamus uses the phrase himself- I expect he didn’t on this occasion because he was ramping up to 5 other hands.

Best to be vague if you’re going past three. You really don’t want to know what those other hands are doing.

I used to keep the PC on 24/7 back in the day, when the internet was so slow it was always in the middle of a download, and I could take a shower before windows finished booting up.

Nowadays with SSDs and windows’ hybrid shut off/hybernation thing, it’s so fast to resume from a cold boot, it doesn’t even register as an inconvenience. And yes, the dark and quiet at night is nice.

I just turn it off overnight because it feels like the logical thing to do. I’m not using it, and it has no other reason to be on, therefore it should be off.

And, like you say, it’s one of those trivial things that you could optimise forever for very little gain. I suspect the single most effective thing you can actually do is to vote for politicians who will address climate change properly. (I’ll leave that thought there though, I don’t want to draw this comment section into a political argument…)

Don’t underestimate climate focused purchasing habits. At the end of the day what motivates businesses the most is the bottom line

You can actually measure this directly – grab one of these: https://amzn.to/2AyrmHb

I just ordered one and will report back. My system is a 2011 build

I shut down my PC when I’m not using it. But my use case is very light at the moment. My PC is from 2010 though it’s been renovated a lot – new RAM, motherboard, and graphics card (though b that’s mostly due to power problems with the old mobo).

Can it run modern games? Mostly. But that’s not the point. It’s a studio/ dvd ripping machine and most of my current games are consumed on console so the relative lack of CPU power doesn’t come into the picture.

Compared to on/off power usage? I have a laptop from 2013 that I keep in permanent sleep mode. It’s less power hungry than the PC and my day-to-day go-to for computer usage.

This: Those shutdown + boot cycles get the physical parts moving around, which increases wear and tear on the hardware.

I heard from a friend who’s a professional computer nerd that the shutdown/boot causes enough wear and tear on the hard drive that shutting down at night is a bad idea. This was the 80s, a time when I and every other regular computer user I knew had lost a hard drive (and all the data on it — serious motivation to keep up with our backups) at least a couple of times, whether it’d actually crashed or just quit one day. So the idea of minimizing said wear and tear seemed like a good candidate for Primary Factor To Consider. The power usage never really came up as an issue, even back when it was much more of one than it is now.

Hard drives are a lot more reliable nowadays, so, like you, I don’t know how much of a factor this is anymore. But I got into the don’t-shut-down habit a long time ago, and will probably stick with it until I get a really good reason not to. I don’t think I’ve ever actually shut down the laptop I’m working on in the… three or four? years I’ve had it. I reboot periodically, when it starts looking at me crosseyed, but other than that it’s always on.

Angie

I don’t think power consumption was ever the primary motive; I remember it being because if you didn’t reboot periodically you’d start having performance issues or bluescreen. Operating systems in general were worse at freeing up memory automatically and a lot of programs didn’t explicitly release memory back to the OS, so you could eventually end up with a whole bunch of memory assigned to programs that weren’t using it.

Still happens these days, but it’s rarer and takes much longer to become an issue; modern memory management is better at telling whether a piece of memory is actually in use as opposed to linked via circular references to memory that can’t be reached from outside of the cycle. Plus it pretty much doesn’t affect the operating system itself, so you just need to restart a problem program rather than the computer.

Indeed, the problems used to be that the OS itself was ‘leaking’ memory – essentially, it can’t prove it’s not being used, so has to keep the content around.

It doesn’t matter too much when it’s only applications doing that, but older OSs (especially Windows) would leak their own memory…

These days that’s not much of a problem.

On Windows, the memory management is really good and pretty much* no matter what you do, when the application exits (or is killed), all the memory is released to the wild unless another application is still using it, in which case it’ll be released when that one ends.

Linux memory management isn’t quite as good, it’s quite easy to ‘leak’ shared memory used for inter-process communication as Linux doesn’t clean it up when they’ve both exited – it remains both reserved and with the last lot of content. I’m told that this is in case a future instance wants the data back.

* Windows applications can still leak ‘handles’, the one you’ll notice is files getting locked by an application so impossible to delete until you reboot.

The one I actually noticed was the Windows audio device isolation driver leaking threads (it would start some new threads when a program started making sounds, then fail to release the threads… ever). 15.5 thousand threads later, this one driver was using a steady 20% of my CPU’s capacity just cycling through now-useless threads to see if any of them was actually doing anything (they obviously weren’t), and causing noticeable delays on a human timescale (significant framerate drops in games, and if I started a video the audio would be out of sync by about a second). Looking online, the earliest reports I found of this bug were from before Windows 7; the machine I noticed it on is running Windows 10.

Ha.

Haha.

HAHAHAHAHAHAHAHAHAHAHA…!

Haaah.

It’s 241 tabs, actually. They’re like the growth rings on a tree!

(I’m a monster, I know.)

I’m at 33 tabs right now… In this window. I have 4 browser windows open, some with more tabs, a few with less.

That said, I have a extension that unloads tabs after a few hours of not looking at them. The extension doesn’t help startup though, which is one of the many reasons why rebooting my PC as little as possible is somewhat important to me.

While I’m on my pedantic streak: Do you realize you just claimed that you had four numbers such that one of them was smaller than (at least) two of the others and at the same time larger than (at least) two of the others?

Can’t you just set the browser to only load the active tab on start up? That’s how I have Pale Moon configured, and I figure it’s the same with Firefox and probably an option with Chrome.

I’ve only got 5 tabs…

I go down to four when I close my browser, but I also regularly go up to many more while in use- currently I have six, but I expect that I’ll get an additional sixteen when I get to checking Youtube.

I don’t know which browser you’re using, but I think in most of them, just having tons of tabs open, will consume CPU cycles, even if the window is minimized — so those will increase consumption, not just for loading/saving but also for just keeping them open.

Well it’s really easy. Typically there would no difference in consumption patterns for accessories (monitors, sound system etc.) between idle PC and shut down PC as next to nobody turns them off when the PC shuts down. Everyone just leave them on with in-built auto-sleep timeout. And so we can only focus on the magic box under your table.

So let’s run some rough numbers. The PC cannot exceed the limits of your power supply. Typically people have 500-750 Watt PSUs. I have a 750 in my case so I’ll continue using that number. Typical PSUs operate at some 80% efficiency which would make a 750 Watts into some 940 Watts (I have a more efficient one but let’s ignore that). That’s the worst case scenario where the PC eats as much power as it can get (which is never the case and the safety circuits would shut your PC down. But let’s ignore that.) Let’s also ignore that with an SSD your system boots within a minute and let’s stretch that into a 4 minute chore with a good old HDD. Let’s assume shutting the PC down takes as much energy but is a bit faster. Give it a minute. And let’s assume you use you PC once a day – that’s one boot up and one shut down. That’s 5 minutes of this power intake a day. What does that amount to in a year? Electricity is typically priced per kWh

(5/60) [0.8333=5 minutes ] x 940 [watts] x 365 = 28 591 Watt hours = 28,5 kWh

In my country that would cost about 5 dollars.

Add the price of leaving your two monitors sleepy 16 hours a day. 5 Watts seems to be the agreed middle ground:

2 x 5 x 16 x 365 = 58.4 kWh

That’s about 2 times more so 10 more dollars

Now, in addition, what if I leave my PC idle 16 hours a day? Idle, not sleepy, not hibernated. Reportedly systems similar to mine consume some 140 Watts in idle.

140 x 16 x 365 = 817.6 kWh

That’s almost 130 dollars in my country.

How long would it take me to burn through 130 bucks in “gaming mode”? My system should eat some 500 Watts when under AAA gaming pressure. My two monitors should use 38 Watts each. So 576 in total.

817,600 / 576 = 1419 hours. With 3 hours of playtime a day that’s more than a year.

And now I’m hoping I made no mistakes.

Well, you better measure your computer power usage because it may be well below what you think.

I have a reasonably beasty gaming PC (i5 4590, HDD + SSD, GTX 1070) and an old 19″ LCD screen.

I recorded it for a while and got those results:

– 65-100W when idling or desktop use

– 150W on average when gaming

– max spike recorded during 2 weeks was at 250W

A funny thing is that on Grub menu (OS selection and parameters) it was using more energy (~95W) than when Linux was loading from the HDD (~80W).

Maybe I should redo that a couple of times to be sure. I thought it was because no advanced power management driver was loaded.

You could buy a power-measurer that you plug your power-brick / power-bar into, and save calculations. You’d be able to leave it overnight, or during a gaming session, and get a real Wh measurement. :)

I think it’s worth considering the halfway-state in this argument- hibernate mode (for Windows PCs). I use a laptop all day on battery in the field- I’m very conscious of my power usage patterns (so as to minimize downtime), and while a full shutdown/ restart is costly in terms time/ battery power, going to hibernate mode for an hour or two is often very beneficial and uses noticeably less power than sleeping for an equivalent period of time. To my understanding, hibernate mode is essentially a full shutdown, minus the logging out/ closing of windows/ active apps (with RAM copied to HDD). FWIW, my laptop uses a spinning HDD (5200 RPM), with a small SSD for cache (16 GB- hybrid drive).

My tablet is the opposite. It uses nothing when completely off and powered back on. (Takes forever though.) If left in hibernate it will lose 10% battery a day.

Same here, I started doing it a year or three ago. Either way long before Windows10 started attempting to reopen on boot all apps that were open while shutting down – except not in the state you left them ‘so you can pick up where you left off’ but just opens them afresh to a login screen, blank document or whatever – literally the worst of both worlds, that nobody asked for, can’t be disabled and was and still is made totally unnecessary but the already existing sleep and hibernate functions. I always worry about power outages when it’s sleeping for long stretches and if I really wanted to save that much more power I’d chuck it all in the ocean.

I shut down my laptop when I’m moving it, to make as sure as possible that the hard disk isn’t operating at the time the system is most prone to accidental physical bumps. Power consumption never entered the equation, though I’m quite aggressive about turning off unused lights.

Ugh, spinning disks! Get with the times! :P

When I first got my current comp, it ran Win 8 and wouldn’t reliably restart/boot, so I turned it off as little as possible. Once I upgraded to 10, that went away (though Lenovo told me not to, I decided I hated 8 enough to try 10), but I’m still in that habit. Plus, it’s a laptop that lives on my bed and if I’m awake and at home (which is 2/3 of my day at least) I’m on it. I figure we waste more energy via the ancient windows (ancient for Atlanta, meaning late 60s, my city is weird) than my PC does, so eh…

To crush your enemies . . . no? We’re not doing that?

“What is it that a man may call the greatest things in life?” – “Hot water, good dentishtry and shoft lavatory paper.” — Cohen the Barbarian in conversation with Discworld nomads (Terry Pratchett, The Light Fantastic)

Honestly, it’s even funnier in video…

https://www.youtube.com/watch?v=aYHFMuvCsr0

I don’t know about this.

Since I’ve been on W10 with an SSD (skipped W8) my boot-ups are so fast that I turn it on, go get something to drink, and by the time I come back its not only done booting, its already gone back to sleep (its on a ‘if 15 seconds after booting no key is pressed go to sleep’ thing – I don’t know why but its not inconvenient enough for me to arse around trying to change it).

But I also have a very clean startup – self-built computer so no OEM bloat, only Steam and malware protection load at start.

As for AC – yeah, because of how hot it is where I live the difference between a month of using AC and a month of not using it (and I still use it sparingly) is over a hundred dollars, around 150 at peak.

Oh, my God, I got mentioned in Shamus’ blog! I feel like a celebrity! Please, no autographs. OK, OK, I’ll give a few, but it’ll be $50 each. I have games I’ll never play to buy.

Kidding aside, I’m glad for this discussion. While it’s true what you say, that whatever we choose is minimal gain, it’s still something that piques my curiosity.

I’ll Take 10!

One thing I’d forgot about previously- I also used to leave my old computer on overnight because shutting it down meant the noisy fans that I listened to all day would stop. Leaving dead silence which was far worse to sleep in than just leaving the thing running, ‘s too different. New computer is much quieter but it still has some noise, and now I’ve got an air purifier churning at all hours anyway, so it’s just the convenience.

“I’m not sure why it takes Windows fifteen seconds to shut down”

I’m not an expert on Windows internals. But I’m gonna guess, committing registry changes to disk. Writing user changes to disk. Waiting for programs to quit.*1 Updating/closing up system logs. If a small patch was downloaded in the background then prepare it so the other half of the process can be done after bootup *2. And a few maintenance things are probably done too (to avoid slowing down the bootup any more). I’m sure there is a ton more stuff Windows does as well.

Also if you have hibernation enabled this ads a lot to the shutdown as all system memory is saved to disk (in a hib file on the C drive), this takes quite a few seconds if the HDD or SSD has very slow sequential writes.

Even if you do not use hibernation but fast boot is enabled the memory of the kernel and key drivers are saved to disk; this will take a few seconds to do.

*1 Windows sends a shutdown warning to all programs, wait for an acknowledgement (a program can “refuse” for example and prevent shutdown), programs should also save their settings etc at this point and close open files/stop writing, then later it sends the shutdown signal to programs, programs that do not quit is given 5 seconds to close before windows forcibly shuts down the process.

*2 If you have ever seen “Update and Shutdown” or “Update and restart” this means a patch has been half-installed.

I think you got it right with the idea of trying to offset your computer usage by doing other things that save noticeable power. I live in the UK so air conditioning isn’t a thing, but heating and clothes dryers are, and they use far more energy than my PC does.

My PC is set to go to sleep after being unused for two hours. It appears to go into hibernation – I can unplug it and when switched back on it doesn’t notice.

Years ago I ought an outlet power meter to answer this sort of question once and for all. The only answer I actually got was that the power usage of my machine varied hugely while sitting idle, and across multiple startups.

That was a different machine in a different country with different outlets, and I didn’t hold on to the numbers I collected, so I can’t give specific examples, nor repeat the experiment… For what it’s worth, I power my machine off most nights. Not to save power, but to hunt down all the horrible resident memory-suckers that modern Windows is prone to. My linux machine at work I only turned off on weekends.

None of this relates to my real question, which is: is it a good idea to turn a computer off at the wall? Or, unplug it, I guess, for Americans who cannot switch it off at the wall. Cos that is what my parents policy was all through growing up, and it drove me mad, mainly because having to lean down behind the desk every time was annoying, butt I never knew enough to tell them a less selfish reason why it was bad.

As far as I know they’re still doing it. I hear about non-solid state drives losing data if unpowered for long enough, possibly other things, but I guess I am still not an expert enough to convince them.

All hard drives are meant to store data without power, solid-state or not. The only loss of data would be if it was unplugged without the computer saving everything from memory (temporary / dies with power off), to disk. Like if somebody unplugs the computer without doing the “shutdown” thing from the operating system.

Hooo, where do I start?

First, disclaimer: We’re all nerds here, and that should be justification enough to make anything worth discussing.

Second: I’m an engineer and an efficiency fetishist. Minimizing energy consumption is an obsession for me, and I’m not holding back here.

Third: No human can be perfect, and no opinion consistent. Like: My PC is chewing through electricity while I write this, and I could save energy by not doing so. But my obsession must be spread.

Almost all my electronic devices have a real on/off button which physically disconnects power. But I don’t always use those because mains extensions with switches are so much more comfortable. Also, in Britain all wall sockets have switches. What isn’t in use is turned off. Any single device wouldn’t be a significant drain, but all those TVs, monitors, computers, microwaves, switches and stuff do add up. Especially true for PCs, since even very efficient power supplies are quite inefficient at low power.

To me it’s just second nature that things which I don’t need should not be running. The main point of inconvenience is that if I have tons of stuff open, I may need to save and later open it all again. That’s a major drain on time, and I’m liable to forget something. But that’s very easily dealt with by sending the computer into hybernation rather than turning it off — that saves the current state to disk and restores it later. That’s way faster than booting fresh (even without re-starting all those programs).

The argument that switching a computer off would wear the components out quicker … actually, I struggle to understand how that would happen. The hard drives are powered down when not in use anyway, and that means they must be designed to deal with that (case in point: I haven’t had a hard drive failure in three or so years, and the only drive to fail on my in the last 7 was one I got used — it had come out of an office PC that had been running 24/7 for a few years). The CPU, mainboard, ventilators… don’t see how thay would have a problem from being turned off and on again?

I used to use sleep a lot on my PCs but had way to many instances where they’d just turn on from someone touching the table and inadvertently wiggling the mouse. So the only scenario where I use that is at work, when I think I might have to log in remotely from work later.

And back to the original question of what’s saving more energy: If your sleeping PC uses about 5 watts, and you sleep for 8h, then it’ll use 40Wh overnight. If the machine takes 100W while waking up from hibernation and going back to sleep (no high CPU/GPU load, just moving data RAM to HDD/SSD), then unless it takes more than 12 minutes to go into hybernation and wake up, (mine does it in much less than 1 minute), you are in fact saving energy by turning it off.

Wait, so almost all of Britain has the light switch connected to particular electrical outlets thing? Here that’s mostly reserved for rooms without ceiling lights (or that were built without them) so you could plug a lamp into one and turn it on and off via the wall. Our living room’s that way (4 plug outlets with 2 plugs apiece, 2 light switches controlling one plug outlet apiece, the other two are American normal) and I inevitably manage to use the one outlet with the switch off and spend a while trying to figure out what bulb’s burned out on the Christmas/fairy lights before I realize it.

That’s certainly not the case; you can wire in individual sockets to a light switch but I wouldn’t call it common.

I think what you’re reacting to is this line:

In this case, the switch is actually physically located next to the outlet, and each outlet has its own switch (so you can individually control which outlets are supplying power and which are not. The main issue is when you’ve plugged something in and then buried the outlet behind some furniture; getting to the switch can be tricky.

MrPro answered this one for me, but just to add a little to it:

1: There’s still regular light switches and wires coming out of the ceiling for lamps — although some of them are internally wired up in the craziest ways.

2: All regular wall sockets have an integrated switch, so if you plug a 4-way extension cable into the wall, and to that extension cable hook up you PC, Monitor, stereo and whatever other gadgets you use, then when the devices aren’t in use you simply switch of the socket directly, and all the devices use exactly zero standby power. We do this even with our microwave which would otherwise spend 99.9% of its life displaying a blinking clock which nobody bothers to set.

3: The reason for the switches on the power sockets is kind-of historical: The wiring in British houses is a bit funny (at least if you’re from the continent), in that there’s only one single ring feeding all power sockets, and so it’s a safety feature that you can turn sockets off individually. There’s a video by Tom Scott where he explains that better than I can. On the continent, you’d have several power circuits, ideally one per room for the power sockets, and one for just the ceiling lights, and you can switch each one off separately at the fuse box — that doesn’t work in Britain.

Those power socket switches are one of the very few features of British domestic technology which I actually appreciate. Otherwise, I pretty much disagree with Tom Scott on whether British plugs are good — I think they’re a particular solution to an unnecessarily complicated scenario.

Regarding the car thing, I’m afraid Shamus’ information is outdated:

https://www.edf.org/attention-drivers-turn-your-idling-engines

https://www.edmunds.com/car-reviews/features/do-stop-start-systems-really-save-fuel.html

Around here, almost any self-respecting car comes with a start/stop system these days, and yes, it saves fuel. Quite a bit, actually, and definitely also for pauses of ten seconds or less.

Of course, the starter motors on such cars are designed for the increased usage they’re seeing. So doing this if your starter motor is not designed for it may indeed be expensive (or it may not, but I’m not the expert on that).

Oh, and idling isn’t really good for an engine, either:

https://mechanics.stackexchange.com/questions/1772/is-idling-bad-for-your-engine

==> Even below ten seconds, turning the engine off does save fuel, and it’s not bad for your engine, either.

I’m now curious about what sort of wear a “warm” boot (restart) does vs. a “cold” boot (power up).

I can’t shake the habit of powering my PC off just because I still play too many older games that have egregious memory leaks and other things that can require a restart to clean up. So I just turn the whole thing off at the end of the day so that I don’t have to remember to restart it. It takes my PC almost exactly as long to wake up from sleep as it does to boot the thing, anyway.

You can kind of test that by bending a piece of tin back and forth. Tin being the dominant metal after silicon for electronic circuits. The warm to cold and cold to warm condition can be simulated by bending tin in the same spot and unbending it repeatedly. Eventually it breaks itself due to metal fatigue. While that’s much more extreme than what power flowing does, it does serve as a way to test which conditions result in the least fatigue.

I don’t think there’s a significant amount of wear on your computer unless you either have some serious high-performance stuff that operates at really high temperatures or if you plan to use the machine for several decades, and cycle to full load and back to zero frequently.

I know there are some high performance compute clusters which keep their nodes on 100% load all the time (e.g. by repeatedly computing Pi or a bunch of prime numbers just to keep them busy), and I suspect it’s for this reason — but most of them don’t do that anymore, so I don’t think that modern components are actually that sensitive to temperature change.

… but even then, your PC will have almost exactly the same temperature when turned off or in sleep mode. If you wanted to keep the temperature changes to a minimum, you’d need to keep it under full load all the time, and that would actually eat a lot of power (I guess you could turn off all the fans and then carefully adjust the load so everything stays warm but doesn’t overheat, but nobody does that, and that’s another piece of evidence that temperature changes aren’t really a problem.

==> Really, the only difference between powering down and going into power saving (“sleep”) mode is that powering down (and up) includes a short burst of activity, which is nonetheless a lot less intense than starting or playing a modern game or doing serious calculations.

If you’re in a building that uses electric resistance heat, then any power used by your computer is used there instead of by the heater, for zero net use.

This is one area where your going to find a lot of bad information because a lot of dumb assumptions are made, even by those that know what they area talking about. However you can use a meter like a “Kill A Watt” to measure the power draw with a fairly good degree of accuracy (in general electrical meters are accurate within 0.05% of actual value).

Some say you need to super saturate the system to get max draw but in reality there are two rules at play for that. Series and parallel power. To find voltage in series, sum all elements along a path. To find voltage in parallel, take the highest element along the branches. Reverse those rules for amperage. This gets very muddy with computer circuits where you have massive swatches that are mutually exclusive. It would probably take weeks of mapping a board to get a good schematic going to do your calculations.

However the whole deal of startup power is only really applicable if all the capacitors in the system have been drained. That is not likely to be the case. Any good capacitor can hold onto a significant portion of it’s charge for a week or two without even being attached to anything. That’s why electronics guys use anything conductive to short the contacts and effectively discharge the capacitors.

Even if you have incredibly low power draw, there is also the problem of harmonics. Circuitry is getting better every day about not muddying up the harmonics. When you return power to the company via dumping power into the “common ground”, they recycle it by cleaning up any unexpected harmonics as best they can. You are billed for this under the term “recovery charge” on your electric bill (some areas don’t bill but I have yet to see such an area in the US). To view this you need a spectrum analyzer that can perform a FFT on the common ground of your house, which is an expensive piece of equipment to be attaching to mains power.

*Slow clap*

Well done, the perfect amount of true facts mixed in with blatant lies. I almost started talking earnestly about power factor in response to the misscussion of voltage and amperage.

I don’t care about the power usage at all, but I am still somewhat concerned about what’s better for the lifespan of the computer. Is turning it on and off once a day really worse for the hardware? (I mean, I have a laptop, so maybe the point is moot).

My (slightly uneducated) guess: sleep will keep some transistors and capacitors occupied, it’ll keep charging/discharging then periodically. So those components will keep degrading while you machine sleeps. That degradation is significantly less than it would be during full load (when the interior of your PC case is warmer, some parts significantly so). I’d expect it to be pretty much insignificant, but it’s not zero.

Degradation during high load and high temperature is _way_ higher than at low load, and the same applies for things like corrosion (if for example there’s some impurity on some contact and it slowly corrodes, that will speed up if it’s hot, and also under vibrations (high-frequency capacitors can make these super high-pitch noises under load — that’s because they’re vibrating).

In that respect, it’d probably be the best thing for a long life to just never put your PC on full load :)

On thing that may help is improved cooling during high load phases. That said: IIRC, the effect of temperature is very very non-linear, and for each component there’s a range where it can effectively last forever, and some range where even tiny increases in temperature are a problem, and of course some range in between those two.

So, assuming that the default set-up for most PCs will have components operate at a temperature where they@ll have a decent life expectation, my best guess to maximize your machine’s life is to set up cooling so that components stay somewhat below the default maximum temperature at peak load. Removing the peaks in the temperature history will help much more than anything else.

…maybe also make sure the ambient air isn’t too humid? (or salty! Humid salty air are crazy good for corrosion!)

Oookay, so first step is to stop living in the tropics. Good to know.

I did not pay attention and put my old C64 in my parents’ attic back when I moved out — which at the time was not very well insulated. Despite being in a very moderate climate, it still heated up like crazy in sunlight and almost matched exterior temperature in cold winter nights (we did get snow). That was just for two years or so, but when I dug the computer out earlier this year, it wouldn’t work … Pretty sure that those two years are what made the difference.

Also, I should probably have put some of those moisture-absorbing thingies in there and sealed it up good.

I have a fun counterintuitive example:

Dishwashers are good for the environment.

Washing one load by hand requires about two to four basins of hot water. A modern dishwasher uses 3-5 litres of water (about a gallon for you units-impaired folks). That is quite literally 10 to 20 times less, and since heating water is basically 99% of the energy usage of a dishwasher, this also saves energy. And it also saves you half an hour of your valuable time.

I am pretty sure that dishwashers save money over their run-time (apart from giving you about 30-50 hours of leisure time per year).

As for computers: Spinning disks? SSDs are now big, cheap and fast. I have replaced all disks for non-backup usage last year, and I am not looking back. Totally worth spending a few hundred on.

The dishwasher thing is true. There have been extensive trials, and humans find it very difficult to impossible to match a dishwasher in terms of water and detergent use, even if they’re trying hard.

Most people who aren’t trying hard will use way more water and detergent than a dishwasher; even more so if they’re in a hurry and annoyed that they need to do the dishes by hand.

The only thing I don’t like about dishwashers is that the detergent they use is much more aggressive than the one you’d use to wash things by hand, but the low amount pretty much makes up for that, in terms of environmental impact. So the only downside really is having to take care what you put in. I still do the good wine glasses (and anti-stick frying pans) by hand because some of the cheaper ones look pretty bad after a few years.

Other downsides to a dishwasher:

Takes up space in your kitchen.

Can flood and ruin your flooring.

Requires maintenance.

So, while it’s clearly worth it, it’s not all up-side.

That’s certainly not false, although we’ve had one for 12 years now. It’s never required maintenance so far, and the one flooding we did have was from the washing machine, and wasn’t the machine itself but rather the connection to the water supply which was a bit funny in that flat — but the kitchen floor dealt with it pretty well.

Not sure if we’ve saved enough water in the meantime to make up for the purchase costs, but in terms of time saved (and spent either doing other things around the house or just having more spare time), it absolutely paid for itself, many times over!

…but of course, if you live in particularly cramped conditions (or in some crazy expensive area where eating out every day is cheaper than paying for the space to have a kitchen), or if for whatever logistical reason it would be a pain to integrate a dishwasher into your kitchen, I’m absolutely not saying that you must get one. I just think that the circumstances where that applies are much narrower than most people believe.

…it would certainly also depend on how comfortable you are with doing the dishes. I hate it almost as much as my wife does, and just doing the stuff that doesn’t go in the dishwasher is enough to remind me of that fact.

Also, downsides of doing dishes by hand:

* My back and neck don’t like the posture

* The skin on my hands doesn’t like it — my wife even gets rashes

* Uses more water and detergent

* Splashes water over the place. Kitchens are made for that but with the regular wooden worktops, around the sink is where they start to deteriorate first. Marble worktops cost more than dishwashers

* Handling your dishes and glasses by hand a lot increases risk of breakage

On the SSD front: It’s supernice to have the quick boot (and hybernation recovery) times afforded by SSDs but whether it’s “worth it” entirely depends on your available budget, and how much storage you need.

I’ve moved the operating system to SSD but bulk storage is still on (multiple) hard drives. If you have mutltiple terabytes of stuff, it’s still a bit very expensive.

They’re quickly catching up in terms of price, though. I wouldn’t be surprised if SSDs become larger and cheaper than hard drives sometime in 5-10 years.

I need to finish reading still, but had to stop at the video card subnote. The video card market has leveled out much better already as gpu mining basically crashed. It’s now below MSRP again for new cards and you can get sales on the last gen new cards that hadn’t flown off shelves yet.

Ah, finally found somebody mentioning this right at the bottom of the comments. :P

Shamus, the market started to drop out about a month or two after you posted the article you linked. There’s various reasons, mainly that bitcoin had a hard crash, which itself is composed of reasons, like a flood in China knocking out something like a third of all mining machines in the world and child porn being found “on” the blockchain. But the takeaway is that GPU prices are back to normal, and have been for half a year. I can google up a 1080 TI right now and find one for $719.99, just over the MSRP of $699.99.

You actually have a link in that old article: “The GeForce GTX 1070 launched with a suggested price of $380. A year and a half later and you might expect the price to have dropped a little, but instead it’s at an insane $1,000!” If you follow that link now, you’ll see the price is currently $459.99. A good chunk above MSRP, but not insane, and you can shop around for a different model or buy used (which I wouldn’t really suggest, given that it’s decent odds that the used market is saturated with overclocked GPU’s that have been overworked on mining rigs, but I’ve been told that I’m overly paranoid about that).

Of course, there’s the matter of Nvidia’s new 20xx series being blatant horrible money grabbing scams. Don’t look at those at all. It’s just some proprietary tech for shaders bolted on to very little real improvement to the 10xx series, and then marked up by 120 to 300 freaking dollars, depending on the model. A couple of years down the line, if that, AMD and Intel will have worked out the tech themselves and implemented it on their own cards. There is definitely no benefit in being an early adopter at these prices.

I think it’s pretty obvious that shutoff for 6+ hours is clearly much, much better for power usage.

Lets say you use 250W (startup is RAM and HDD usage heavy, NOT GPU or CPU really) for 5 minutes. When compared to 6 hours you can multiply it by 1/72 because it’s on for 1/72th of the equivalent time. (1/72 = (5/[6*60])) to get the average power usage if you split it across that full 6 hours. That brings us out to an average of .7W throughout the whole six hours if you turn it off. Say it takes 500W to start up for 10 minutes. That’s still only 2.8W average used consistently throughout the 6 hour period.

Meanwhile if you take a look at http://www.buildcomputers.net/power-consumption-of-pc-components.html you’ll see that it says Idle power draw ranges from 5-10W for low end GPUs, 39-53W for high end GPUs.

Now the question really is, do you let your computer go to sleep, as in sleep mode, and what it goes down to then. I think it’s probably reasonable. Idle but standby (monitors off) my system says the CPU package uses about 8.5W for the CPU alone through openhardwaremonitor. It doesn’t show my GPU power level, but I bet you it’s at least at 10W, it’s not a small GPU.

My stuff is all napkin math. But for me shutting it off for 12+ hours at a time definitely seems worth it.

That “absurdly small” amount of energy might be comparable to the similarly small amount burnt by TVs and monitors left on standby. But in total, the millions of cases of this waste a significant amount of energy (and emit a significant amount of pollution) worldwide. So every little helps :)

But only of course if you don’t have a reason to choose either way and therefore might as well have chosen the less wasteful path.

Maybe the time thinking about it / calculating things will consume more energy than the difference!

I would often save money by walking instead of taking the bus and at some point it occurred to me that as a result I probably had to buy more food to make up the energy used in walking! Haha.

I actually measured my machine’s power consumption just a couple of days before this post went up. The (abridged) results are thus:

* All devices plugged-in but turned off: ~0.04 A

* Everything turned on and sitting idle with an empty desktop: ~0.4-0.5 A

* Heavy AAA gaming (GTA V): ~0.8-1.1 A

During boot the power consumption varied widely and rapidly, going at times as low as 0.3 A and as high as 0.85 A.

While none of this would be what I’d call “scientific”, I think it does lead to a simple conclusion: Turn off your damn computer. Even having the system completely idle and with the monitor turned off resulted in ten times higher power consumption than keeping it off. On the other hand, barely 30 seconds of video gaming would offset whatever power you’d “save” not booting up.

But an even stronger conclusion is that none of this actually matters. I am actually shocked by how little power modern electronics apparently consume. Even when pushing my system to its limit, I just barely managed to have it scrape the 1 amp mark.

Even keeping it at full 100% benchmarking mode 24/7 for an entire month would cost me about as much as 2.5 cups of coffee (which either says something about the low cost of electricity or the high cost of coffee where I live)

So, are the power supply requirements way over-specced then? 1.1A at… what’s wall voltage where you live? Anyway, it can’t be more than 240, so that’s 264 Watts. Your power supply is probably rated at, what, 600 W?

Also, what’s the power draw with the computer in “sleep” mode?

Wall volatge is around 220-230. So yes, either I have my electrics 101 totally wrong, or my PC draws ~250 W at most, making these big chunky 600W power supplies superfluous.

It is worth noting that my graphics card is a miniature (only one fan) GTX 960, which is a notoriously economical little thing. Also, I assume those 600W power ratings have something to do with all those unused SATA cables for people who apparently have a dozen hard drives.

The nominal power supply ratings can be a bit theoretical. You have a 12V and a 5V output, possibly more than one of each, and each circuit has its individual maximum output. 600W is what they can draw in combination, and although that’s usually less than what would happen if every single output was at its own maximum, you’d need a rather particular power draw pattern in order to ever get there. That said: I’ve always bought the supply with the lowest power and decent efficiency I could get, which was usually 350 to 400W, and it’s never not been plenty. But then, I try to keep both CPU and graphics card TDP at or below 100W (individually, not in combination). If you go all-in with the (computational) performance, you can quickly escalate power requirements because if you prioritize performance, efficiency necessarily comes down.

One thing of note: While oversized power supplies are not that much more expensive than smaller ones, they all reach peak efficiency near full load, so even if you get a Platin+ (or whatever the labels are these days) one, its efficiency will be rather low when operating at 20% capacity — better to get a mere Gold one that’s closer to the actual power requirement. That will also reduce consumption at idle or sleep because in low-power states the conversion losses can easily be above 50% of total power intake.

Another thing to do if balance out (and then some) whatever harm generating the electricity to power your computer does by having all those extra bleepy bloops do something good with microprocessing.

I use world community grid.

https://www.worldcommunitygrid.org/index.jsp

There are probably others.