Working with shaders is a little strange. They’re programs that run on your graphics cardI don’t like to bog you down with terminology, but we’re going to need to use SOME. From here on, your computer is the CPU and the graphics card is the GPU.. The pathway between your CPU and the GPU is a bit of a choke point. These two devices can only communicate so fast, and they need to share a monumental amount of data. In an ideal world, you would shove EVERYTHING over to the GPU. Then each frame you would just specify where the camera is, it would crunch all the numbers for you, and in return you’d get the completed image to show to the player. That’s not really feasible outside of the most rudimentary example programs, but we do want to get as close to that idea as possible.

The GPU is very, very good at crunching 3D spatial values. It’s better than your CPU in the same way that a Formula 1 car is better at Formula 1 racing than a dusty pickup truckNo quasi-technical explanation is complete without a Terrible Car Analogy.. It sacrifices flexibility to excel at one very specific task. You can’t run Microsoft Office or a web browser on your GPU. It literally doesn’t have the ability to perform that sort of processing. But the one thing it is good at, it’s really good at. This creates an interesting challenge for us. Any job we want to give to the GPU, we have to first translate into the sort of job a GPU can do.

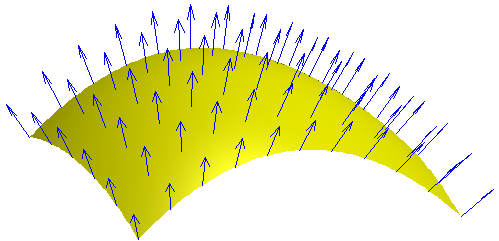

This first one is easy. We just need to calculate some surface normals.

|

Normals are the vectors that point directly away from a given surface. In a perfect sphere, they would all point directly away from the center. On a nice flat tabletop, they all point directly up. On a complex surface it’s… complex. I suppose one way to think of it is imagine throwing a superball at whatever surface you’re interested in. Ignoring gravity (maybe you snuck your superball onto the international space station?) if the ball bounces back along exactly the same vector you threw it, then it traveled along the normal of the point of impact. Also you should probably stop throwing things in here.

We want to offload this normal-calculating business to the GPU, but we have limited ways of communicating with it, and even more limited ways of getting data from it. We can only give it polygons and textures, and the only thing it can do is draw pixels on the screen.

So what we do is we make a new shader. This one will also take the heightmap as input, but instead of drawing terrain, it will draw us the normal map we want. This drawing is done between frames, where the user can’t see it happen. We draw a plain square to the screen, and we give the shader the heightmap to work with.

|

For any given point A, we sample its four neighbors: To the west and east we have X1 and X2, and to the north and south we have Z1 and Z2. Our goal is to find the normal for A, but we only care about the position of these neighbors. Within the gameworld, this grouping would look so:

|

Remember that we’re not actually drawing these polygons right now. We’re just pulling values out of the heightmap, figuring out where the polygons would be, and using these numbers to calculate the value we want.

We use these values to generate a couple of vectors. They’re not the vectors we want, but they’re close. If we subtract Z1 from Z2, we get a vector that points from one to the other. Likewise, we subtract X1 from X2 we get the vector that points from one of them to the other. Optional bonus info for go-getters: We have to normalize these two vectors. That just means you divide them by their own length. The resulting vector still points exactly the same direction, but it’s now exactly 1 unit long, regardless of how far apart the original two points were.

|

So now we have two vectors, which together form a plane. The normal we want is perpendicular to this plane. Time to calculate the cross product. In code, it looks like this:

(For clarity and absolute precision, we’ve named our two red and blue input vectors potato and eisenhower, respectively.)

1 2 3 4 5 6 7 8 9 | vector CrossProduct (vector eisenhower, vector potato) { vector A; A.x = potato.y * eisenhower.z - potato.z * eisenhower.y; A.Y = potato.z * eisenhower.x - potato.x * eisenhower.z; A.z = potato.x * eisenhower.y - potato.y * eisenhower.x; return A; } |

If you’re more of a pencil-and-paper type mathematician, then it looks like this:

eisenhower × potato = ||eisenhower|| ||potato|| sin θ n

In this case the “×” symbol means “cross product” and not “multiply”. The rest of it is more mysterious to me. I’m never one for labor-intensive tasks like doing arithmetic or finding clean scratch paper.

Anyway, when you take the cross product of two vectors like this, the result is a new vector that is perpendicular to both. You might notice that there are actually two such answers to this problem. Remember all that left hand / right hand crap we had to worry about last time? This is one of those situations where it matters. In one coord system, your vector will point out of the surface, and in the other it will point into it. That’s fine either way, as long as you remember which way everything is facing. Or, you know, just flip the vectorMultiply by negative one. if it’s backwards from what you want.

The result:

|

So now we have the surface normal and a new problem. We can still only write color values. As far as the GPU is concerned, we’re drawing pixels on the screen so the user can look at them. We can write four values: Red green, blue, and alpha. These values are each stored in a single byteYou can make textures that use more or less bytes per color value, but since we’re drawing to the screen we still have to match what the display supports. and that’s all we have to work with.

Inside of the shader program we’re using real numbers like 0.2 and -0.67114. But as they’re written to the screen they’re clipped into the zero to one range. That’s quite a bit of damage, but then there’s another round of damage when it’s stored in a single byte, throwing away a lot of our precision. The smallest non-zero value we can store is something like 0.00390625 and the next unique value we can store is 0.0078125. Values between these two will get rounded to one or the other0.00390625 because that’s 1/256, because 256 is the number of distinct values you can store in a single byte.. But! We also have some things working in our favor. We want to store the X, Y, and Z values of this vector, and we have red, green, and blue color channels to work with. So that’s handy.

Normalized vector values fall between -1 and +1, but you might recall from the adventure that was the previous paragraph that color values must be between 0 and +1. So what we do is this: divide by two, add 0.5, and stuff XYZ into RGB. This gives us values centered around 0.5 instead of 0.0. That’s fine as long as we remember to undo this step later when we extract the normal from the texture map for use. This process of turning spatial values into color values is pretty common when you’re dealing with shaders, and makes for some strange images. Here are some normal maps I found on Google:

|

We’re creating something along these lines as we process our heightmap. When we’re done, we have an image like one of the above (except showing the contour of our hills instead of brick or whatever that thing in the middle is) sitting on screenThe user doesn’t see it because we’re drawing on the back buffer, which I explained in this post.. Now we tell OpenGL to grab a spatula and scrape those pixels off the screenSome mobile implementations allow the use of a butterknife instead., then stuff them in a texture map.

You might notice this is exactly the sort of situation we’re trying to avoid. We pull that completed image from GPU to CPU, then turn it into a texture map and send it back. This is an area where apparently consoles have an edge. On some consoles, graphics memory and CPU memory all share the same pool, so you can just copy the pixels directly to where you want them. On the PC, we’re doing at least 2 copy operations. There may be more happening deep down in the driver layers that we don’t even know about. This became an issue in RAGE, where they needed to constantly shuffle texture data around to do megatexturing and the much-slower Xbox 360 could actually out-perform the PC at certain tasks.

So now we take our flat-shaded terrain…

|

…and apply our normal map:

|

For the record: The world tiles, so as you travel you’ll eventually wrap around and pass the same scenery again and again. That odd seam on the right side of the image is where this wrapping happens. This is caused by using a heightmap with edges that don’t match up. This will be fixed later.

Next time we’ll be doing something a lot more complex with our shaders.

Footnotes:

[1] I don’t like to bog you down with terminology, but we’re going to need to use SOME. From here on, your computer is the CPU and the graphics card is the GPU.

[2] No quasi-technical explanation is complete without a Terrible Car Analogy.

[3] Multiply by negative one.

[4] You can make textures that use more or less bytes per color value, but since we’re drawing to the screen we still have to match what the display supports.

[5] 0.00390625 because that’s 1/256, because 256 is the number of distinct values you can store in a single byte.

[6] The user doesn’t see it because we’re drawing on the back buffer, which I explained in this post.

[7] Some mobile implementations allow the use of a butterknife instead.

PC Gaming Golden Age

It's not a legend. It was real. There was a time before DLC. Before DRM. Before crappy ports. It was glorious.

The Truth About Piracy

What are publishers doing to fight piracy and why is it all wrong?

Starcraft: Bot Fight

Let's do some scripting to make the Starcraft AI fight itself, and see how smart it is. Or isn't.

TitleWhat’s Inside Skinner’s Box?

What is a skinner box, how does it interact with neurotransmitters, and what does it have to do with shooting people in the face for rare loot?

Stolen Pixels

A screencap comic that poked fun at videogames and the industry. The comic has ended, but there's plenty of archives for you to binge on.

T w e n t y S i d e d

T w e n t y S i d e d

Why the copy back into main memory if the GPU is the consumer of the normal map?

To my knowledge, this is the only way to get the data into a texture map. I’ve seen some stuff about setting render targets and it’s possible there’s a way to render directly into a texture, but I haven’t investigated yet.

You can certainly attach a texture to a framebuffer (glFramebufferTexture2D) so that it renders onto the texture. I did it here to blur and threshold the result of the first draw. The intermediate step never leaves the GPU.

Oh my gosh, that was effortless.

This was something I was going to investigate later in the project. I just assumed it was going to be a complete pain. But it only took me 5 lines of code. (And then I got to delete about a dozen.)

Thanks.

Yeah, render-to-texture is amazingly useful for stuff like this.

Or for multipass rendering, actually, as long as the texture is about the size of the screen. Or for per-fragment alpha. Or for probably a bunch of other things. :-)

Render to texture is the great big trick behind pretty much every modern graphics effect.

Shadows? Render to texture (normally render the depth buffer to a depth texture)

HDR lighting? Render to a /floating point/ texture (normally half-precision), downsample a bunch of times, then “tonemap” the result

Or the greatest trick of all, deferred rendering: Render everything to a texture (Seriously). Rather than doing all of your complex shading on your objects (which may be occluded), render everything to a texture and then do everything fancy “in post” by ping-ponging between a pair of buffers

—

Incidentally, DirectX 10/OpenGL 3 (I think. Might be 11/4) added a feature called “stream out” which lets you “render to vertex buffer” (Basically, the fragment shader stage gets cut out of the pipeline and instead the results of the previous stages get shoved straight into a buffer)

And also, if you want to get fancy you might consider using the teseelation shader stages (GL4/DX11 added them; there are two, a “control shader” and an “evaluation shader”) to make the terrain more precise when you’re close to it (and vise versa)

You can prep the formula for calculating the normal at a point on a surface in the surface shader and pass it to the vertex shader somehow. That should save time compared to making a new normal map.

Unfortunately, I was never very good at the shader interchange thing.

So far, this is a bit like the Terrain Project with more detail. Not that I’m complaining though, quite the opposite. The Terrain Project was what brought me here years ago, and I’m more than happy to see you’re doing something similar again. Can’t wait for the next post!

I’m not really familiar with them, but couldn’t you do the normal calculation in the vertex shader directly on the Heightmap?

From my limited experience, that’s not a vertex shader operation. Unless you’ve already got the normal for the vertex, you need to know the location of other vertices in 3D space. Vertex shader doesn’t have that.

You can work out normals for a heightmap in the vertex shader, and in fact if you’re doing geometry clipmaps or similar it’s much more straightforward. All you need to know is how big the texels of your heightmap are in world space and do the texture lookups to get the four points Shamus used to describe vectors potato and eisenhower; then you know both the change in elevation and the horizontal length for both vectors and have enough information to do your cross product.

While this does involve more texture lookups in the vertex shader, it means that you can save on video memory vs. the intermediate texture approach, and you can use the other channels of the heightmap to contain more interesting aspects of your terrain; like how grassy it is or how many badgers there are per square yard.

Back when I was a wee sophomore in college I was doing some GPU programming for a CS professor. I was fairly okay at programming even then, but attempting to figure out and work around the limitations of this stuff just had me alternately flabbergasted and frustrated. All the resources on the net are technical and full of jargon, and don’t explain exactly what Shamus does – that yes, the GPU is incredibly good at crunching numbers, but only under very small definitions of “crunching” and “numbers”. And this article isn’t even strictly about GPU programming. I’d love to see what Shamus could come up with if he wrote an article specifically dedicated to the topic.

Also, I never knew why normal maps were colored like that. I thought it was just a method of visualization, but it makes a ton more sense when you put it like this.

I thought the same thing too. Geez, I love this site. :3

RE Normal Maps: I don’t remember how long ago I figured it out, but yeah, it’s just a different way of holding information. Images are actually VERY useful for that, since each pixel represents 3-4 bytes of information in a format that we’re very, VERY good at dealing with these days. My engine even stores all pixel data as 4-byte RGBA values, meaning I have an entire 8 bits of every pixel spare. I ended up using it to store a copy of the heightmap.(floating point height values are still better, though)

For the record, in English that equation is “magnitude of eisenhower times magnitude of potato, times the sin of the angle between the eisenhower & potato, times a unit vector that points in the direction of the normal.” In other words, that equation actually has nothing to do with what you’re talking about, because it only exists to help you find the magnitude of the cross product, then multiply it by it’s direction. What you’re actually trying to find is the direction.

In pen and paper math terms, the easiest way I know to remember it is [a,b,c] X [d,e,f] is the determinant of matrix:

|x,y,z|

|a,b,c|

|d,e,f|

x,y,z are arbitrary variables, that tell you what component you’re talking about. So the x-term in your answer is the x component, the y term is the y component, the z term is the z component. Math people will usually call them i,j,k because of convention, but I’m trying to be clearer…

Also, to keep people from looking up too many equations, the easy way to do determinants of is to multiply the diagonals top-left to bottom-right and add the products, then multiply top-right to bottom-left and subtract. Here’s a good place with pictures, because it’s hard to say in words.

In my example, doing the determinant gets you xbf+ycd+zae-xce-yaf-zbd, corresponding to cross product [bf-ce,cd-af,ae-bd].

I always liked doing cross products this way better, because then I don’t have to memorize which terms go where in what component

The important point to insist on here is that we have this cross-product which is a bit funny-looking, sure, but really easy to compute, And then the formula tells you that what you get out of it is a normal vector whose size is perfectly well understood.

Pretty handy… This is more info out of a simple formula than what anyone has any right to expect…

This might be pedantic, but wouldn’t it be better to do the cross product first and then normalize the result, instead of the other way around? For one thing, if you normalize first your result won’t be a unit vector unless the starting vectors were perpendicular (the sin(theta) term in the math-people equation), and I thought you needed a unit vector? From what I vaguely remember from my brief experience, OpenGL won’t puke too bad if you don’t give it normalized vectors, but still…

For another thing, normalizing last requires fewer multiplications and square-roots. Now, I’m used to working with tiny little mirco-controllers, where bits used to store numbers is limited and accuracy and processing time is important (so you want to minimize operations), so I might be making a big deal out of nothing, but I would think that extra magnitude calc would add up at around the hundred-billionth normal you’re calculating, even if it only amounts to .001 second every tenth frame, or something.

Plus, it cuts a line of code

I guess normalizing first would be helpful if the two vectors have drastically different magnitudes.

Yes indeed. Overnormalization doesn’t hurt anything (except performance) but you really only need to do it once.

Yep. Normalize as few times as possible, because square root operations are expensive. I’ve been doing all these calculations on the CPU, which means I have to carefully budget time and normalizing too many times will bog down my image utilities to an unusable degree.

He’s using a GPU which has vast processing capability(for appropriate processing tasks), but as one of his stated goals is to be able to render at 120Hz with no margin for error, it really is ideal to cut corners wherever possible.

Unless of course, the extra normalizations are not the bottleneck. Don’t optimize without profiling! :)

I always hate when people recite this mantra without actually looking at the situation.

Needless operation can be bad, but when it simplifies the code and makes it more readable at the same time (like saving your normalization for last), there is absolutely no reason not to optimize, regardless of where the bottlenecks are.

Mathematician here. The formula you gave there isn’t actually useful in this case, since the “n” there stands for the normal vector (which is what you’re trying to calculate).

Also, ||v|| means the norm of v (a.k.a. “length”), so if the vectors are normalized it’ll always equal 1.

The truth is that unless you want to start throwing around fancy words like “distribution” and “anti-commutativity”, the best way to describe the formula for the normal is exactly the one you used in your code.

Also, most mathematicians I know prefer whiteboards to scratch paper.

Whiteboards are the best. At my last job, they actually had a small meeting room that had whiteboard COMPLETELY covering all four walls, floor to ceiling (except the door, obviously) – it echoed horrendously, but it was really awesome for working out problems.

Whiteboards are *awful*. The markers run out of ink ridiculously quickly, smell horrible, and don’t erase well with the ancient dirty erasers commonly provided–and trying to get the ink off one’s hands after class is a major pain too. Fortunately *some* of our classrooms still have proper chalkboards.

My experience is that, while faculty in other disciplines (who write a few words occasionally) do like the whiteboards, mathematicians (who write constantly all class period) hate hate *HATE* them.

There is definitely a way to do the normal map calculations entirely on the GPU, see anything on ShaderToy. This is far from optimal.

On the superball thing . . . the broader case is, you throw the superball at the surface. The normal is the direction halfway between the angle it goes in at and the angle it comes out at. The degenerate case is where you throw it in at the normal, and so the in, the out, and halfway between them are all the same.

OK, that’s irrelevant, I know. I don’t even know why I felt I had to say it.

How are you actually generating the mesh of the terrain? It’s been a few years since I did any shader work but as far as I can remember you couldn’t spawn new verts in a shader. Are you sending a flat mesh to the vertex shader and just setting the height there or is actually spawning vertices in shader something that’s now possible?

“Are you sending a flat mesh to the vertex shader and just setting the height there”

Yes.

So… why can’t the graphics card generate the mesh on the fly? I guess it’s not too bad if you are just transferring the mesh into GPU memory once, and then messing with it in-situ.

Still though, for something computationally trivial like a regular hex grid, it seems like it would be more efficient to produce in the GPU than transfer it over.

Could do it in a geometry shader, perhaps: feed in the two triangles that cover the entire surface, plus the heightmap, and have it output a point for each vertex that it thinks it needs, as well as the normals and whatnot for each of them.

But it would depend on how many vertices your card’s geometry shader support allows you to output per input vertex; not having looked at the spec, this limit might be *far* too low to let this work. And there is no geometry shader support in webgl yet (or… there wasn’t a year ago), so I never tried anything like that there, either…

Since you only need to generate the mesh once and not for every drawn frame, the efficiency is essentially irrelevant.

You didn’t mention it so I was wondering: Are you calculating the normal map every frame, or just when you load the texture? In fact, are you deforming your mesh every frame as well?

It seems to me that it should be possible to cache both but your post made it seem like that’s not what you’re doing. It’s not like height or normals are going to change between frames unless you make some kind of destructible terrain.

The normal map, rendered to texture, can be pretty trivially cached if it is not so already.

The deformed mesh is more difficult, and requires the use of a transform feedback buffer. Since moving the vectors via heightmap is ridiculously fast, there’s little benefit to run-and-transform once. (That also makes it more difficult to only render *part* of the mesh).

I love programming but I hate reading code. These posts are pretty much perfect for me.

I have actually experimented with EXACTLY THIS(well… not on the GPU, but the heightmap->normal map->lightmap sequence). I would like to offer a small piece of advice or two.

1: sample points: my heightmap->normal map function has 3 levels of detail, corresponding to 3 levels of point sampling: “Up and Right,”(level 0) where my Ike and Potato vectors are (X,Y)->(X,Y+1) and (X,Y)->(X+1,Y), “Cardinal,” (level 1)where I average normals from the positive “Up and Right” with their negative “Down and Left” counterparts, and “8 Directions,” (level 2) which should be obvious at this point. As far as I can tell, “Up and Right” is actually the industry standard. I’ve found virtually no benefit from using detail levels 1 or 2, and they each take 2x as long as the previous level to calculate.

Short version: you should be able to cut your processing time in half by making your Ike and Potato vectors just (X,Y)->(X,Y+1) and (X,Y)->(X+1,Y) instead of using (X,Y-1)->(X,Y+1) and (X-1,Y)->(X+1,Y) like you appear to be doing. Not huge on a GPU, since I know you’re already taking only a fraction of a percent of your time budget, but given one of your stated goals is RAW SPEED! it’s probably advisible.

2: Normal resolution: Last year I listened to Carmack’s keynote, and voiced hearty agreement when he said that normals should be stored in 32-bit floating point instead of 8-bit integer values like they currently are. Then I actually tried it. The difference between the two is actually not visible to the human eye when rendered to a lightmap, with luminance differences of 1 to 2 out of your 256 possible brightness values.

There is something you might want to look at, though. I found when storing heightmaps as 8-bit values, you can end up with some significant lighting artifacts which are not present when storing heightmaps as 32-bit values.

Finally, for when you get to shadow casting, I mentioned in a comment on this post that I’ve made a small improvement to your EBTPIIS algorithm, and I’d be honored if you wanted to use it. Let me know and I’ll E-mail you the source code.(written in D, but the algorithm should be easy enough.)

About your point 1, I don’t see how (X,Y)->(X,Y+1) and (X,Y)->(X+1,Y) would be faster than (X,Y-1)->(X,Y+1) and (X-1,Y)->(X+1,Y). In either case you’re doing two subtractions and a cross product. And the second one should be more correct.

The first method references 3 vertex locations in memory: Reference, Ike, and Potato. The second references 5 vertex locations in memory: Reference, Ike, InverseIke, Potato, and InversePotato. And if Reference is on the edge of a sharp cliff, you could actually get an incorrect value.

[edit]

I wonder if it could reduce some of the aliasing I mentioned in the second paragraph of item 2, though…

Having a slightly larger memory footprint doesn’t affect speed appreciatively.

In your example, normal is:

Normal = CrossProd( Center – XNext, Center – YNext );

In Shamus’, it would be:

Normal = CrossProd( XPrev – XNext, YPrev – YNext );

You don’t actually need to know the details of the one you are standing on, so it’s only 4 vectors vs 3. As for the “correctness”, your method would provide different results depending on how you traverse. If you tiled the heightmap and had an uneven number of columns, you’d have two different normal maps, or ugly seams at the borders.

…Traversal order wouldn’t affect my system any more(or less) than Shamus’s.

Nether would edges.(Though I’m confident my resizing algorithms would take care of the aliasing.)

Dunno if you are still checking in the thread here, but what you are talking about is the difference between a forward difference and a central difference

You’re going to be giving up accuracy in the calculation so you can reduce the number of points in the individual calculations by 1 (as mentioned above, you only need 4 values from memory to do the central difference method). I believe you, that you weren’t able to see the difference in your experiments, but it’s important to note that the difference in accuracy will become significantly more pronounced at different mesh-densities. So, while you might be able to process more vertices in the same amount of time with your method, you might be able to reduce the number of polygons substantially with Shamus’s, without sacrificing quality.

On a whim I decided to check back here to see if there was anything else on this thread.

I’ve actually been thinking about it, and this morning I altered my normal calculation function so that Detail 1 is now Shamus’s method, pushing the old Detail 1 and 2 to 2 and 3 respectively. I’m looking forward to testing it out. Depending on the results, I may rewrite the rest of the function completely.

Specifically, I’m wondering if using Shamus’s method will eliminate the artifacts caused by using 8-bit heightmaps over 32-bit. The nature of 8-bit maps means that you can’t ever have an altitude change of less than 45 degrees between two adjacent pixels. Averaging normals across 4 to 8 helps a little bit, but not enough to justify the time delay. The minimum altitude change across two pixels, however, is 22.5 degrees, which is equivalent to using a 9-bit heightmap in my previous system. The price is a potential loss of detail, but I’ve learned single-pixel details in textures are pretty much worthless anyway.

Why calculate the normal map at all? Why not pass the height map to the fragment shader and calculate the normals there? Same effect for a quarter of the memory (assuming 8-bit height map), and you don’t calculate normals for points which don’t appear on screen.

Though, on the other hand, using normal maps would make it easy to add small-scale fake bumps via a second normal map in the fragment shader, which would be harder to do with height maps.